Generative AI and robotics are moving us ever closer to the day when we can ask for an object and have it created within a few minutes. In fact, MIT researchers have developed a speech-to-reality system, an AI-driven workflow that allows them to provide input to a robotic arm and “speak objects into existence,” creating things like furniture in as little as five minutes.

With the speech-to-reality system, a robotic arm mounted on a table is able to receive spoken input from a human, such as “I want a simple stool,” and then construct the objects out of modular components. To date, the researchers have used the system to create stools, shelves, chairs, a small table, and even decorative items such as a dog statue.

“We’re connecting natural language processing, 3D generative AI, and robotic assembly,” says Alexander Htet Kyaw, an MIT graduate student and Morningside Academy for Design (MAD) fellow. “These are rapidly advancing areas of research that haven’t been brought together before in a way that you can actually make physical objects just from a simple speech prompt.”

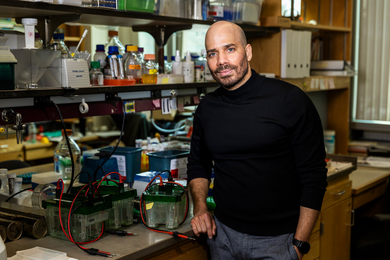

The idea started when Kyaw — a graduate student in the departments of Architecture and Electrical Engineering and Computer Science — took Professor Neil Gershenfeld’s course, “How to Make Almost Anything.” In that class, he built the speech-to-reality system. He continued working on the project at the MIT Center for Bits and Atoms (CBA), directed by Gershenfeld, collaborating with graduate students Se Hwan Jeon of the Department of Mechanical Engineering and Miana Smith of CBA.

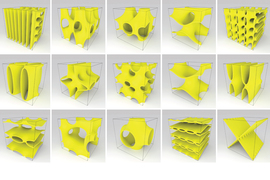

The speech-to-reality system begins with speech recognition that processes the user’s request using a large language model, followed by 3D generative AI that creates a digital mesh representation of the object, and a voxelization algorithm that breaks down the 3D mesh into assembly components.

After that, geometric processing modifies the AI-generated assembly to account for fabrication and physical constraints associated with the real world, such as the number of components, overhangs, and connectivity of the geometry. This is followed by creation of a feasible assembly sequence and automated path planning for the robotic arm to assemble physical objects from user prompts.

By leveraging natural language, the system makes design and manufacturing more accessible to people without expertise in 3D modeling or robotic programming. And, unlike 3D printing, which can take hours or days, this system builds within minutes.

“This project is an interface between humans, AI, and robots to co-create the world around us,” Kyaw says. “Imagine a scenario where you say ‘I want a chair,’ and within five minutes a physical chair materializes in front of you.”

The team has immediate plans to improve the weight-bearing capability of the furniture by changing the means of connecting the cubes from magnets to more robust connections.

“We’ve also developed pipelines for converting voxel structures into feasible assembly sequences for small, distributed mobile robots, which could help translate this work to structures at any size scale,” Smith says.

The purpose of using modular components is to eliminate the waste that goes into making physical objects by disassembling and then reassembling them into something different, for instance turning a sofa into a bed when you no longer need the sofa.

Because Kyaw also has experience using gesture recognition and augmented reality to interact with robots in the fabrication process, he is currently working on incorporating both speech and gestural control into the speech-to-reality system.

Leaning into his memories of the replicator in the “Star Trek” franchise and the robots in the animated film “Big Hero 6,” Kyaw explains his vision.

“I want to increase access for people to make physical objects in a fast, accessible, and sustainable manner,” he says. “I’m working toward a future where the very essence of matter is truly in your control. One where reality can be generated on demand.”

The team presented their paper “Speech to Reality: On-Demand Production using Natural Language, 3D Generative AI, and Discrete Robotic Assembly” at the Association for Computing Machinery (ACM) Symposium on Computational Fabrication (SCF ’25) held at MIT on Nov. 21.