The emergence of generative artificial intelligence has ignited a deep philosophical exploration into the nature of consciousness, creativity, and authorship. As we bear witness to new advances in the field, it’s increasingly apparent that these synthetic agents possess a remarkable capacity to create, iterate, and challenge our traditional notions of intelligence. But what does it really mean for an AI system to be “generative,” with newfound blurred boundaries of creative expression between humans and machines?

For those who feel as if “generative artificial intelligence” — a type of AI that can cook up new and original data or content similar to what it's been trained on — cascaded into existence like an overnight sensation, while indeed the new capabilities have surprised many, the underlying technology has been in the making for some time.

But understanding true capacity can be as indistinct as some of the generative content these models produce. To that end, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) convened in discussions around the capabilities and limitations of generative AI, as well as its potential impacts on society and industries, with regard to language, images, and code.

There are various models of generative AI, each with their own unique approaches and techniques. These include generative adversarial networks (GANs), variational autoencoders (VAEs), and diffusion models, which have all shown off exceptional power in various industries and fields, from art to music and medicine. With that has also come a slew of ethical and social conundrums, such as the potential for generating fake news, deepfakes, and misinformation. Making these considerations is critical, the researchers say, to continue studying the capabilities and limitations of generative AI and ensure ethical use and responsibility.

During opening remarks, to illustrate visual prowess of these models, MIT professor of electrical engineering and computer science (EECS) and CSAIL Director Daniela Rus pulled out a special gift her students recently bestowed upon her: a collage of AI portraits ripe with smiling shots of Rus, running a spectrum of mirror-like reflections. Yet, there was no commissioned artist in sight.

The machine was to thank.

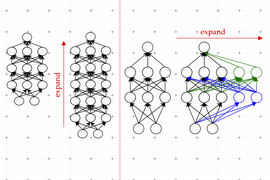

Generative models learn to make imagery by downloading many photos from the internet and trying to make the output image look like the sample training data. There are many ways to train a neural network generator, and diffusion models are just one popular way. These models, explained by MIT associate professor of EECS and CSAIL principal investigator Phillip Isola, map from random noise to imagery. Using a process called diffusion, the model will convert structured objects like images into random noise, and the process is inverted by training a neural net to remove noise step by step until that noiseless image is obtained. If you’ve ever tried a hand at using DALL-E 2, where a sentence and random noise are input, and the noise congeals into images, you’ve used a diffusion model.

“To me, the most thrilling aspect of generative data is not its ability to create photorealistic images, but rather the unprecedented level of control it affords us. It offers us new knobs to turn and dials to adjust, giving rise to exciting possibilities. Language has emerged as a particularly powerful interface for image generation, allowing us to input a description such as ‘Van Gogh style’ and have the model produce an image that matches that description,” says Isola. “Yet, language is not all-encompassing; some things are difficult to convey solely through words. For instance, it might be challenging to communicate the precise location of a mountain in the background of a portrait. In such cases, alternative techniques like sketching can be used to provide more specific input to the model and achieve the desired output.”

Isola then used a bird's image to show how different factors that control the various aspects of an image created by a computer are like “dice rolls.” By changing these factors, such as the color or shape of the bird, the computer can generate many different variations of the image.

And if you haven’t used an image generator, there’s a chance you might have used similar models for text. Jacob Andreas, MIT assistant professor of EECS and CSAIL principal investigator, brought the audience from images into the world of generated words, acknowledging the impressive nature of models that can write poetry, have conversations, and do targeted generation of specific documents all in the same hour.

How do these models seem to express things that look like desires and beliefs? They leverage the power of word embeddings, Andreas explains, where words with similar meanings are assigned numerical values (vectors) and are placed in a space with many different dimensions. When these values are plotted, words that have similar meanings end up close to each other in this space. The proximity of those values shows how closely related the words are in meaning. (For example, perhaps “Romeo” is usually close to “Juliet”, and so on). Transformer models, in particular, use something called an “attention mechanism” that selectively focuses on specific parts of the input sequence, allowing for multiple rounds of dynamic interactions between different elements. This iterative process can be likened to a series of "wiggles" or fluctuations between the different points, leading to the predicted next word in the sequence.

“Imagine being in your text editor and having a magical button in the top right corner that you could press to transform your sentences into beautiful and accurate English. We have had grammar and spell checking for a while, sure, but we can now explore many other ways to incorporate these magical features into our apps,” says Andreas. “For instance, we can shorten a lengthy passage, just like how we shrink an image in our image editor, and have the words appear as we desire. We can even push the boundaries further by helping users find sources and citations as they're developing an argument. However, we must keep in mind that even the best models today are far from being able to do this in a reliable or trustworthy way, and there's a huge amount of work left to do to make these sources reliable and unbiased. Nonetheless, there’s a massive space of possibilities where we can explore and create with this technology.”

Another feat of large language models, which can at times feel quite “meta,” was also explored: models that write code — sort of like little magic wands, except instead of spells, they conjure up lines of code, bringing (some) software developer dreams to life. MIT professor of EECS and CSAIL principal investigator Armando Solar-Lezama recalls some history from 2014, explaining how, at the time, there was a significant advancement in using “long short-term memory (LSTM),” a technology for language translation that could be used to correct programming assignments for predictable text with a well-defined task. Two years later, everyone’s favorite basic human need came on the scene: attention, ushered in by the 2017 Google paper introducing the mechanism, “Attention is All You Need.” Shortly thereafter, a former CSAILer, Rishabh Singh, was part of a team that used attention to construct whole programs for relatively simple tasks in an automated way. Soon after, transformers emerged, leading to an explosion of research on using text-to-text mapping to generate code.

“Code can be run, tested, and analyzed for vulnerabilities, making it very powerful. However, code is also very brittle and small errors can have a significant impact on its functionality or security,” says Solar-Lezema. “Another challenge is the sheer size and complexity of commercial software, which can be difficult for even the largest models to handle. Additionally, the diversity of coding styles and libraries used by different companies means that the bar for accuracy when working with code can be very high.”

In the ensuing question-and-answer-based discussion, Rus opened with one on content: How can we make the output of generative AI more powerful, by incorporating domain-specific knowledge and constraints into the models? “Models for processing complex visual data such as 3-D models, videos, and light fields, which resemble the holodeck in Star Trek, still heavily rely on domain knowledge to function efficiently,” says Isola. “These models incorporate equations of projection and optics into their objective functions and optimization routines. However, with the increasing availability of data, it’s possible that some of the domain knowledge could be replaced by the data itself, which will provide sufficient constraints for learning. While we cannot predict the future, it’s plausible that as we move forward, we might need less structured data. Even so, for now, domain knowledge remains a crucial aspect of working with structured data."

The panel also discussed the crucial nature of assessing the validity of generative content. Many benchmarks have been constructed to show that models are capable of achieving human-level accuracy in certain tests or tasks that require advanced linguistic abilities. However, upon closer inspection, simply paraphrasing the examples can cause the models to fail completely. Identifying modes of failure has become just as crucial, if not more so, than training the models themselves.

Acknowledging the stage for the conversation — academia — Solar-Lezama talked about progress in developing large language models against the deep and mighty pockets of industry. Models in academia, he says, “need really big computers” to create desired technologies that don’t rely too heavily on industry support.

Beyond technical capabilities, limitations, and how it’s all evolving, Rus also brought up the moral stakes around living in an AI-generated world, in relation to deepfakes, misinformation, and bias. Isola mentioned newer technical solutions focused on watermarking, which could help users subtly tell whether an image or a piece of text was generated by a machine. “One of the things to watch out for here, is that this is a problem that’s not going to be solved purely with technical solutions. We can provide the space of solutions and also raise awareness about the capabilities of these models, but it is very important for the broader public to be aware of what these models can actually do,” says Solar-Lezama. “At the end of the day, this has to be a broader conversation. This should not be limited to technologists, because it is a pretty big social problem that goes beyond the technology itself.”

Another inclination around chatbots, robots, and a favored trope in many dystopian pop culture settings was discussed: the seduction of anthropomorphization. Why, for many, is there a natural tendency to project human-like qualities onto nonhuman entities? Andreas explained the opposing schools of thought around these large language models and their seemingly superhuman capabilities.

"Some believe that models like ChatGPT have already achieved human-level intelligence and may even be conscious," Andreas said, "but in reality these models still lack the true human-like capabilities to comprehend not only nuance, but sometimes they behave in extremely conspicuous, weird, nonhuman-like ways. On the other hand, some argue that these models are just shallow pattern recognition tools that can’t learn the true meaning of language. But this view also underestimates the level of understanding they can acquire from text. While we should be cautious of overstating their capabilities, we should also not overlook the potential harms of underestimating their impact. In the end, we should approach these models with humility and recognize that there is still much to learn about what they can and can’t do.”