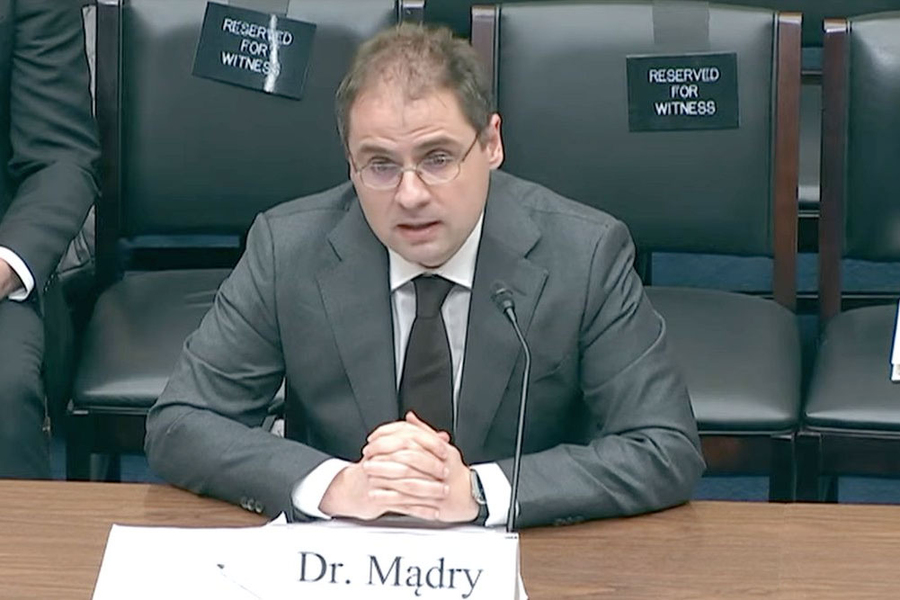

Government should not “abdicate” its responsibilities and leave the future path of artificial intelligence solely to Big Tech, Aleksander Mądry, the Cadence Design Systems Professor of Computing at MIT and director of the MIT Center for Deployable Machine Learning, told a Congressional panel on Wednesday.

Rather, Mądry said, government should be asking questions about the purpose and explainability of the algorithms corporations are using, as a precursor to regulation, which he described as “an important tool” in ensuring that AI is consistent with society’s goals. If the government doesn’t start asking questions, then “I am extremely worried” about the future of AI, Mądry said in response to a question from Rep. Gerald Connolly.

Mądry, a leading expert on explainability and AI, was testifying at a hearing titled “Advances in AI: Are We Ready for a Tech Revolution?” before the House Subcommittee on Cybersecurity, Information Technology, and Government Innovation, a panel of the House Committee on Government Reform and Oversight. The other witnesses at the hearing were former Google CEO Eric Schmidt, IBM Vice President Scott Crowder, and Center for AI and Digital Policy Senior Research Director Merve Hickok.

In her opening remarks, Subcommittee Chair Rep. Nancy Mace cited the book “The Age of AI: And Our Human Future” by Schmidt, Henry Kissinger, and Dan Huttenlocher, the dean of the MIT Schwarzman College of Computing. She also called attention to a March 3 op-ed in The Wall Street Journal by the three authors that summarized the book while discussing ChatGPT. Mace said her formal opening remarks had been entirely written by ChatGPT.

In his prepared remarks, Mądry raised three overarching points. First, he noted that AI is “no longer a matter of science fiction” or confined to research labs. It is out in the world, where it can bring enormous benefits but also poses risks.

Second, he said AI exposes us to “interactions that go against our intuition.” He said because AI tools like ChatGPT mimic human communication, people are too likely to unquestioningly believe what such large language models produce. In the worst case, Mądry warned, human analytical skills will atrophy. He also said it would be a mistake to regulate AI as if it were human — for example, by asking AI to explain its reasoning and assuming that the resulting answers are credible.

Finally, he said too little attention has been paid to problems that will result from the nature of the AI “supply chain” — the way AI systems are built on top of each other. At the base are general systems like ChatGPT, which can be developed by only a few companies because they are so expensive and complex to build. Layered on top of such systems are many AI systems designed to handle a particular task, like figuring out whom a company should hire.

Mądry said this layering raised several “policy-relevant” concerns. First, the entire system of AI is subject to whatever vulnerabilities or biases are in the large system at its base, and is dependent on the work of a few, large companies. Second, the interaction of AI systems is not well-understood from a technical standpoint, making the results of AI even more difficult to predict or explain, and making the tools difficult to “audit.” Finally, the mix of AI tools makes it difficult to know whom to hold responsible when a problem results — who should be legally liable and who should address the concern.

In the written material submitted to the subcommittee, Mądry concluded, “AI technology is not particularly well-suited for deployment through complex supply chains,” even though that is exactly how it is being deployed.

Mądry ended his testimony by calling on Congress to probe AI issues and to be prepared to act. “We are at an inflection point in terms of what future AI will bring. Seizing this opportunity means discussing the role of AI, what exactly we want it to do for us, and how to ensure it benefits us all. This will be a difficult conversation but we do need to have it, and have it now,” he told the subcommittee.

The testimony of all the hearing witnesses and a video of the hearing, which lasted about two hours, is available online.