Mary Ellen Zurko remembers the feeling of disappointment. Not long after earning her bachelor’s degree from MIT, she was working her first job of evaluating secure computer systems for the U.S. government. The goal was to determine whether systems were compliant with the “Orange Book,” the government’s authoritative manual on cybersecurity at the time. Were the systems technically secure? Yes. In practice? Not so much.

“There was no concern whatsoever for whether the security demands on end users were at all realistic,” says Zurko. “The notion of a secure system was about the technology, and it assumed perfect, obedient humans.”

That discomfort started her on a track that would define Zurko’s career. In 1996, after a return to MIT for a master’s in computer science, she published an influential paper introducing the term “user-centered security.” It grew into a field of its own, concerned with making sure that cybersecurity is balanced with usability, or else humans might circumvent security protocols and give attackers a foot in the door. Lessons from usable security now surround us, influencing the design of phishing warnings when we visit an insecure site or the invention of the “strength” bar when we type a desired password.

Now a cybersecurity researcher at MIT Lincoln Laboratory, Zurko is still enmeshed in humans’ relationship with computers. Her focus has shifted toward technology to counter influence operations, or attempts by foreign adversaries to deliberately spread false information (disinformation) on social media, with the intent of disrupting U.S. ideals.

In a recent editorial published in IEEE Security & Privacy, Zurko argues that many of the “human problems” within the usable security field have similarities to the problems of tackling disinformation. To some extent, she is facing a similar undertaking as that in her early career: convincing peers that such human issues are cybersecurity issues, too.

“In cybersecurity, attackers use humans as one means to subvert a technical system. Disinformation campaigns are meant to impact human decision-making; they’re sort of the ultimate use of cyber technology to subvert humans,” she says. “Both use computer technology and humans to get to a goal. It's only the goal that's different.”

Getting ahead of influence operations

Research in counteracting online influence operations is still young. Three years ago, Lincoln Laboratory initiated a study on the topic to understand its implications for national security. The field has since ballooned, notably since the spread of dangerous, misleading Covid-19 claims online, perpetuated in some cases by China and Russia, as one RAND study found. There is now dedicated funding through the laboratory’s Technology Office toward developing influence operations countermeasures.

“It's important for us to strengthen our democracy and make all our citizens resilient to the kinds of disinformation campaigns targeted at them by international adversaries, who seek to disrupt our internal processes,” Zurko says.

Like cyberattacks, influence operations often follow a multistep path, called a kill chain, to exploit predictable weaknesses. Studying and reinforcing those weaknesses can work in fighting influence operations, just as they do in cyber defense. Lincoln Laboratory’s efforts are in developing technology to support “source tending,” or reinforcing early stages in the kill chain when adversaries begin to find opportunities for a divisive or misleading narrative and build accounts to amplify it. Source tending helps cue U.S. information-operations personnel of a brewing disinformation campaign.

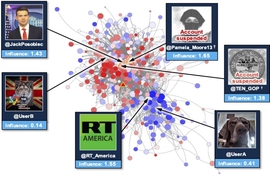

A couple of approaches at the laboratory are aimed at source tending. One approach is leveraging machine learning to study digital personas, with the intent of identifying when the same person is behind multiple, malicious accounts. Another area is focusing on building computational models that can identify deepfakes, or AI-generated videos and photos created to mislead viewers. Researchers are also developing tools to automatically identify which accounts hold the most influence over a narrative. First, the tools identify a narrative (in one paper, the researchers studied the disinformation campaign against French presidential candidate Emmanuel Macron) and gather data related to that narrative, such as keywords, retweets, and likes. Then, they use an analytical technique called causal network analysis to define and rank the influence of specific accounts — which accounts often generate posts that go viral?

These technologies are feeding into the work that Zurko is leading to develop a counter-influence operations test bed. The goal is to create a safe space to simulate social media environments and test counter-technologies. Most importantly, the test bed will allow human operators to be put into the loop to see how well new technologies help them do their jobs.

“Our military’s information-operations personnel are lacking a way to measure impact. By standing up a test bed, we can use multiple different technologies, in a repeatable fashion, to grow metrics that let us see if these technologies actually make operators more effective in identifying a disinformation campaign and the actors behind it.”

This vision is still aspirational as the team builds up the test bed environment. Simulating social media users and what Zurko calls the “grey cell,” the unwitting participants to online influence, is one of the greatest challenges to emulating real-world conditions. Reconstructing social media platforms is also a challenge; each platform has its own policies for dealing with disinformation and proprietary algorithms that influence disinformation’s reach. For example, The Washington Post reported that Facebook’s algorithm gave “extra value” to news that received anger reactions, making it five times more likely to appear on a user’s news feed — and such content is disproportionately likely to include misinformation. These often-hidden dynamics are important to replicate in a test bed, both to study the spread of fake news and understand the impact of interventions.

Taking a full-system approach

In addition to building a test bed to combine new ideas, Zurko is also advocating for a unified space that disinformation researchers can call their own. Such a space would allow researchers in sociology, psychology, policy, and law to come together and share cross-cutting aspects of their work alongside cybersecurity experts. The best defenses against disinformation will require this diversity of expertise, Zurko says, and “a full-system approach of both human-centered and technical defenses.”

Though this space doesn’t yet exist, it’s likely on the horizon as the field continues to grow. Influence operations research is gaining traction in the cybersecurity world. “Just recently, the top conferences have begun putting disinformation research in their call for papers, which is a real indicator of where things are going,” Zurko says. “But, some people still hold on to the old-school idea that messy humans don’t have anything to do with cybersecurity.”

Despite those sentiments, Zurko still trusts her early observation as a researcher — what cyber technology can do effectively is moderated by how people use it. She wants to continue to design technology, and approach problem-solving, in a way that places humans center-frame. “From the very start, what I loved about cybersecurity is that it’s partly mathematical rigor and partly sitting around the ‘campfire’ telling stories and learning from one another,” Zurko reflects. Disinformation gets its power from humans’ ability to influence each other; that ability may also just be the most powerful defense we have.