Are schools that feature strong test scores highly effective, or do they mostly enroll students who are already well-prepared for success? A study co-authored by MIT scholars concludes that widely disseminated school quality ratings reflect the preparation and family background of their students as much or more than a school’s contribution to learning gains.

Indeed, the study finds that many schools that receive relatively low ratings perform better than these ratings would imply. Conventional ratings, the research makes clear, are highly correlated with race. Specifically, many published school ratings are highly positively correlated with the share of the student body that is white.

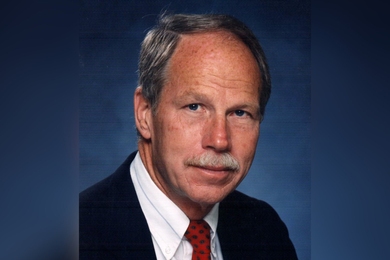

“A school’s average outcomes reflect, to some extent, the demographic mix of the population it serves,” says MIT economist Josh Angrist, a Nobel Prize winner who has long analyzed education outcomes. Angrist is co-author of a newly published paper detailing the study’s results.

The study, which examines the Denver and New York City school districts, has the potential to significantly improve the way school quality is measured. Instead of raw aggregate measures like test scores, the study uses changes in test scores and a statistical adjustment for racial composition to compute more accurate measures of the causal effects that attending a particular school has on students’ learning gains. This methodologically sophisticated research builds on the fact that Denver and New York City both assign students to schools in ways that allow the researchers to mimic the conditions of a randomized trial.

In documenting a strong correlation between currently used rating systems and race, the study finds that white and Asian students tend to attend higher-rated schools, while Black and Hispanic students tend to be clustered at lower-rated schools.

“Simple measures of school quality, which are based on the average statistics for the school, are invariably highly correlated with race, and those measures tend to be a misleading guide of what you can expect by sending your child to that school,” Angrist says.

The paper, “Race and the Mismeasure of School Quality,” appears in the latest issue of the American Economic Review: Insights. The authors are Angrist, the Ford Professor of Economics at MIT; Peter Hull PhD ’17, a professor of economics at Brown University; Parag Pathak, the Class of 1922 Professor of Economics at MIT; and Christopher Walters PhD ’13, an associate professor of economics at the University of California at Berkeley. Angrist and Pathak are both professors in the MIT Department of Economics and co-founders of MIT’s Blueprint Labs, a research group that often examines school performance.

The study uses data provided by the Denver and New York City public school districts, where 6th-graders apply for seats at certain middle schools, and the districts use a school-assignment system. In these districts, students can opt for any school in the district, but some schools are oversubscribed. In these circumstances, the district uses a random lottery number to determine who gets a seat where.

By virtue of the lottery inside the seat-assignment algorithm, otherwise-similar sets of students randomly attend an array of different schools. This facilitates comparisons that reveal causal effects of school attendance on learning gains, as in a randomized clinical trial of the sort used in medical research. Using math and English test scores, the researchers evaluated student progress in Denver from the 2012-2013 through the 2018-2019 school years, and in New York City from the 2016-2017 through 2018-2019 school years.

Those school-assignment systems, it happens, are mechanisms some of the researchers have helped construct, allowing them to better grasp and measure the effects of school assignment.

“An unexpected dividend of our work designing Denver and New York City’s centralized choice systems is that we see how students are rationed from [distributed among] schools,” says Pathak. “This leads to a research design that can isolate cause and effect.”

Ultimately, the study shows that much of the school-to-school variation in raw aggregate test scores stems from the types of students at any given school. This is a case of what researchers call “selection bias.” In this case, selection bias arises from the fact that more-advantaged families tend to prefer the same sets of schools.

“The fundamental problem here is selection bias,” Angrist says. “In the case of schools, selection bias is very consequential and a big part of American life. A lot of decision-makers, whether they’re families or policymakers, are being misled by a kind of naïve interpretation of the data.”

Indeed, Pathak notes, the preponderance of more simplistic school ratings today (found on many popular websites) not only creates a deceptive picture of how much value schools add for students, but has a self-reinforcing effect — since well-prepared and better-off families bid up housing costs near highly-rated schools. As the scholars write in the paper, “Biased rating schemes direct households to low-minority rather than high-quality schools, while penalizing schools that improve achievement for disadvantaged groups.”

The research team hopes their study will lead districts to examine and improve the way they measure and report on school quality. To that end, Blueprint Labs is working with the New York City Department of Education to pilot a new ratings system later this year. They also plan additional work examining the way families respond to different sorts of information about school quality.

Given that the researchers are proposing to improve ratings in what they believe is a straightforward way, by accounting for student preparation and improvement, they think more officials and districts may be interested in updating their measurement practices.

“We’re hopeful that the simple regression adjustment we propose makes it relatively easy for school districts to use our measure in practice,” Pathak says.

The research received support from the Walton Foundation and the National Science Foundation.