Audio

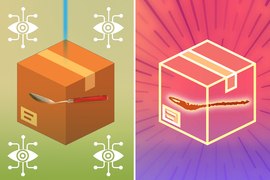

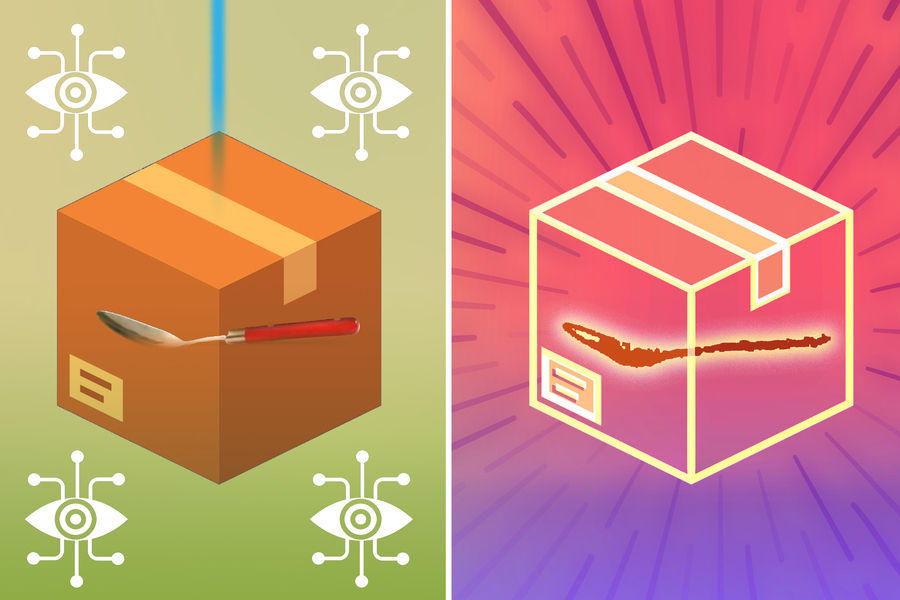

A new imaging technique developed by MIT researchers could enable quality-control robots in a warehouse to peer through a cardboard shipping box and see that the handle of a mug buried under packing peanuts is broken.

Their approach leverages millimeter wave (mmWave) signals, the same type of signals used in Wi-Fi, to create accurate 3D reconstructions of objects that are blocked from view.

The waves can travel through common obstacles like plastic containers or interior walls, and reflect off hidden objects. The system, called mmNorm, collects those reflections and feeds them into an algorithm that estimates the shape of the object’s surface.

This new approach achieved 96 percent reconstruction accuracy on a range of everyday objects with complex, curvy shapes, like silverware and a power drill. State-of-the-art baseline methods achieved only 78 percent accuracy.

In addition, mmNorm does not require additional bandwidth to achieve such high accuracy. This efficiency could allow the method to be utilized in a wide range of settings, from factories to assisted living facilities.

For instance, mmNorm could enable robots working in a factory or home to distinguish between tools hidden in a drawer and identify their handles, so they could more efficiently grasp and manipulate the objects without causing damage.

“We’ve been interested in this problem for quite a while, but we’ve been hitting a wall because past methods, while they were mathematically elegant, weren’t getting us where we needed to go. We needed to come up with a very different way of using these signals than what has been used for more than half a century to unlock new types of applications,” says Fadel Adib, associate professor in the Department of Electrical Engineering and Computer Science, director of the Signal Kinetics group in the MIT Media Lab, and senior author of a paper on mmNorm.

Adib is joined on the paper by research assistants Laura Dodds, the lead author, and Tara Boroushaki, and former postdoc Kaichen Zhou. The research was recently presented at the Annual International Conference on Mobile Systems, Applications and Services.

Reflecting on reflections

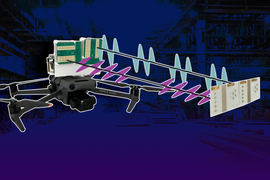

Traditional radar techniques send mmWave signals and receive reflections from the environment to detect hidden or distant objects, a technique called back projection.

This method works well for large objects, like an airplane obscured by clouds, but the image resolution is too coarse for small items like kitchen gadgets that a robot might need to identify.

In studying this problem, the MIT researchers realized that existing back projection techniques ignore an important property known as specularity. When a radar system transmits mmWaves, almost every surface the waves strike acts like a mirror, generating specular reflections.

If a surface is pointed toward the antenna, the signal will reflect off the object to the antenna, but if the surface is pointed in a different direction, the reflection will travel away from the radar and won’t be received.

“Relying on specularity, our idea is to try to estimate not just the location of a reflection in the environment, but also the direction of the surface at that point,” Dodds says.

They developed mmNorm to estimate what is called a surface normal, which is the direction of a surface at a particular point in space, and use these estimations to reconstruct the curvature of the surface at that point.

Combining surface normal estimations at each point in space, mmNorm uses a special mathematical formulation to reconstruct the 3D object.

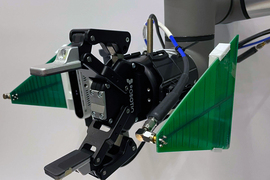

The researchers created an mmNorm prototype by attaching a radar to a robotic arm, which continually takes measurements as it moves around a hidden item. The system compares the strength of the signals it receives at different locations to estimate the curvature of the object’s surface.

For instance, the antenna will receive the strongest reflections from a surface pointed directly at it and weaker signals from surfaces that don’t directly face the antenna.

Because multiple antennas on the radar receive some amount of reflection, each antenna “votes” on the direction of the surface normal based on the strength of the signal it received.

“Some antennas might have a very strong vote, some might have a very weak vote, and we can combine all votes together to produce one surface normal that is agreed upon by all antenna locations,” Dodds says.

In addition, because mmNorm estimates the surface normal from all points in space, it generates many possible surfaces. To zero in on the right one, the researchers borrowed techniques from computer graphics, creating a 3D function that chooses the surface most representative of the signals received. They use this to generate a final 3D reconstruction.

Finer details

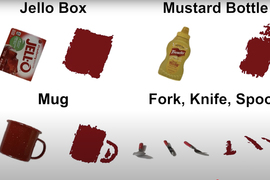

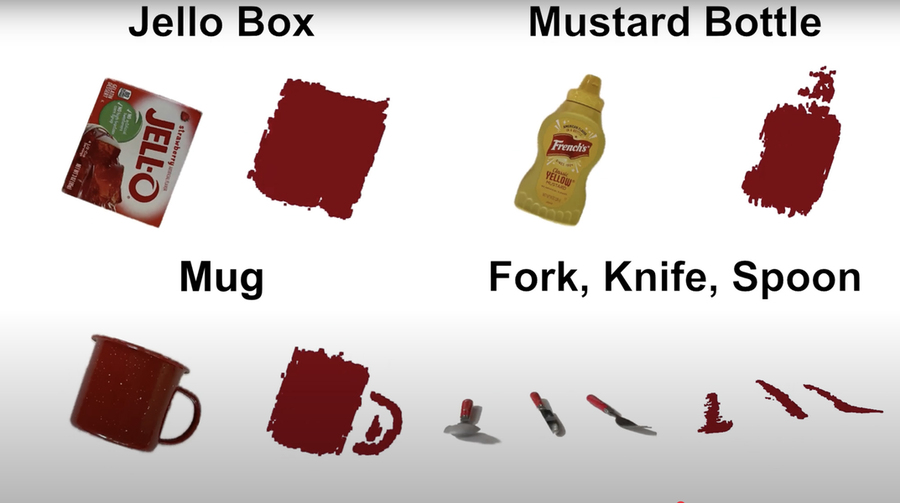

The team tested mmNorm’s ability to reconstruct more than 60 objects with complex shapes, like the handle and curve of a mug. It generated reconstructions with about 40 percent less error than state-of-the-art approaches, while also estimating the position of an object more accurately.

Their new technique can also distinguish between multiple objects, like a fork, knife, and spoon hidden in the same box. It also performed well for objects made from a range of materials, including wood, metal, plastic, rubber, and glass, as well as combinations of materials, but it does not work for objects hidden behind metal or very thick walls.

“Our qualitative results really speak for themselves. And the amount of improvement you see makes it easier to develop applications that use these high-resolution 3D reconstructions for new tasks,” Boroushaki says.

For instance, a robot can distinguish between multiple tools in a box, determine the precise shape and location of a hammer’s handle, and then plan to pick it up and use it for a task. One could also use mmNorm with an augmented reality headset, enabling a factory worker to see lifelike images of fully occluded objects.

It could also be incorporated into existing security and defense applications, generating more accurate reconstructions of concealed objects in airport security scanners or during military reconnaissance.

The researchers want to explore these and other potential applications in future work. They also want to improve the resolution of their technique, boost its performance for less reflective objects, and enable the mmWaves to effectively image through thicker occlusions.

“This work really represents a paradigm shift in the way we are thinking about these signals and this 3D reconstruction process. We’re excited to see how the insights that we’ve gained here can have a broad impact,” Dodds says.

This work is supported, in part, by the National Science Foundation, the MIT Media Lab, and Microsoft.