New England Patriots quarterback Tom Brady has often credited his success to spending countless hours studying his opponent’s movements on film. This understanding of movement is necessary for all living species, whether it’s figuring out the best angle for throwing a ball, or perceiving the motion of predators and prey. But simple videos can’t actually give us the full picture.

That’s because traditional videos and photos for studying motion are two-dimensional, and don’t show us the underlying 3-D structure of the person or subject of interest. Without the full geometry, we can’t inspect the small and subtle movements that help us move faster or make sense of the precision needed to perfect our athletic form.

Recently, though, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have come up with a way to get a better handle on this understanding of complex motion.

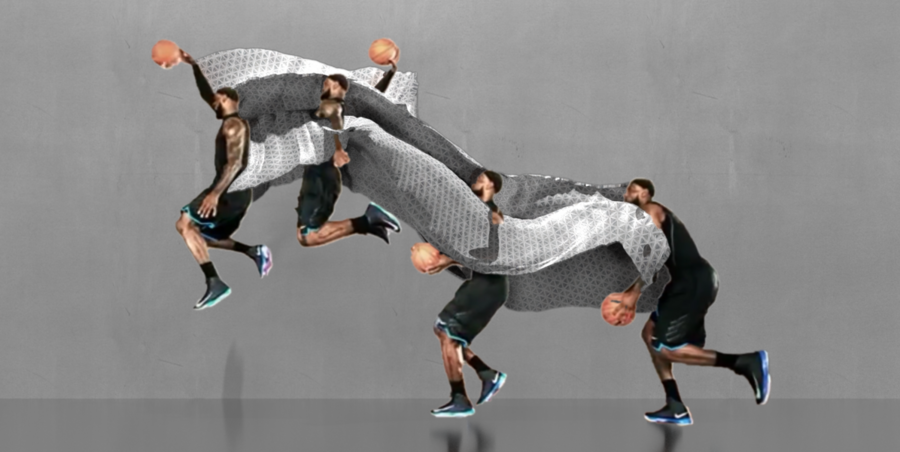

The new system uses an algorithm that can take 2-D videos and turn them into 3-D-printed “motion sculptures” that show how a human body moves through space.

In addition to being an intriguing aesthetic visualization of shape and time, the “MoSculp” system could enable a much more detailed study of motion for professional athletes, dancers, or anyone who wants to improve their physical skills.

“Imagine you have a video of Roger Federer serving a ball in a tennis match, and a video of yourself learning tennis,” says PhD student Xiuming Zhang, lead author of a new paper about the system. “You could then build motion sculptures of both scenarios to compare them and more comprehensively study where you need to improve.”

Because motion sculptures are 3-D, users can use a computer interface to navigate around the structures and see them from different viewpoints, revealing motion-related information inaccessible from the original viewpoint.

Zhang wrote the paper alongside MIT professors of electrical engineering and computer science William Freeman and Stefanie Mueller, PhD student Jiajun Wu, Google researchers Qiurui He and Tali Dekel, as well as former CSAIL PhD students Andrew Owens and Tianfan Xue.

Bodies in motion

Artists and scientists have long struggled to gain better insight into movement, limited by their own camera lenses and what they could provide.

Previous work has mostly used so-called “stroboscopic” photography techniques, which look a lot like the images in a flip book stitched together. But since these photos only show snapshots of movement, viewers wouldn’t be able to see as much of the trajectory of a person’s arm when they’re hitting a golf ball, for example.

What’s more, these photographs also require laborious preshoot setup, such as using a clean background and specialized depth cameras and lighting equipment. All MoSculp needs is a video sequence.

Given an input video, the system first automatically detects 2-D key points on the subject’s body, such as the hip, knee, and ankle of a ballerina while she’s doing a complex dance sequence. Then, it takes the best possible poses from those points to be turned into 3-D “skeletons.”

After stitching these skeletons together, the system generates a motion sculpture that can be 3-D-printed, showing the smooth, continuous path of movement traced out by the subject. Users can customize their figures to focus on different body parts, assign different materials to distinguish among parts, and even customize lighting.

In user studies, the researchers found that over 75 percent of subjects felt that MoSculp provided a more detailed visualization for studying motion than the standard photography techniques.

“Dance and highly skilled athletic motions often seem like ‘moving sculptures’ but they only create fleeting and ephemeral shapes,” says Aaron Hertzmann, a principal scientist at Adobe's Creative Intelligence Lab who was not involved in the research. “This work shows how to take motions and turn them into real sculptures with objective visualizations of movement, providing a way for athletes to analyze their movements for training, requiring no more equipment than a mobile camera and some computing time.”

The system works best for larger movements, like throwing a ball or taking a sweeping leap during a dance sequence. It also works for situations that might obstruct or complicate movement, such as if people are wearing loose clothing or carrying objects.

Currently, the system only uses single-person scenarios, but the team soon hopes to expand to multiple people. This could open up the potential to study things like social disorders, interpersonal interactions, and team dynamics.

The team will present their paper on the system next month at the User Interface Software and Technology (UIST) conference in Berlin, Germany.