For children with speech and language disorders, early-childhood intervention can make a great difference in their later academic and social success. But many such children — one study estimates 60 percent — go undiagnosed until kindergarten or even later.

Researchers at the Computer Science and Artificial Intelligence Laboratory at MIT and Massachusetts General Hospital’s Institute of Health Professions hope to change that, with a computer system that can automatically screen young children for speech and language disorders and, potentially, even provide specific diagnoses.

This week, at the Interspeech conference on speech processing, the researchers reported on an initial set of experiments with their system, which yielded promising results. “We’re nowhere near finished with this work,” says John Guttag, the Dugald C. Jackson Professor in Electrical Engineering and senior author on the new paper. “This is sort of a preliminary study. But I think it’s a pretty convincing feasibility study.”

The system analyzes audio recordings of children’s performances on a standardized storytelling test, in which they are presented with a series of images and an accompanying narrative, and then asked to retell the story in their own words.

“The really exciting idea here is to be able to do screening in a fully automated way using very simplistic tools,” Guttag says. “You could imagine the storytelling task being totally done with a tablet or a phone. I think this opens up the possibility of low-cost screening for large numbers of children, and I think that if we could do that, it would be a great boon to society.”

Subtle signals

The researchers evaluated the system’s performance using a standard measure called area under the curve, which describes the tradeoff between exhaustively identifying members of a population who have a particular disorder, and limiting false positives. (Modifying the system to limit false positives generally results in limiting true positives, too.) In the medical literature, a diagnostic test with an area under the curve of about 0.7 is generally considered accurate enough to be useful; on three distinct clinically useful tasks, the researchers’ system ranged between 0.74 and 0.86.

To build the new system, Guttag and Jen Gong, a graduate student in electrical engineering and computer science and first author on the new paper, used machine learning, in which a computer searches large sets of training data for patterns that correspond to particular classifications — in this case, diagnoses of speech and language disorders.

The training data had been amassed by Jordan Green and Tiffany Hogan, researchers at the MGH Institute of Health Professions, who were interested in developing more objective methods for assessing results of the storytelling test. “Better diagnostic tools are needed to help clinicians with their assessments,” says Green, himself a speech-language pathologist. “Assessing children’s speech is particularly challenging because of high levels of variation even among typically developing children. You get five clinicians in the room and you might get five different answers.”

Unlike speech impediments that result from anatomical characteristics such as cleft palates, speech disorders and language disorders both have neurological bases. But, Green explains, they affect different neural pathways: Speech disorders affect the motor pathways, while language disorders affect the cognitive and linguistic pathways.

Telltale pauses

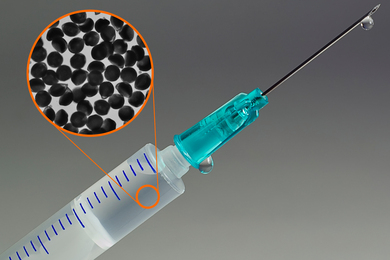

Green and Hogan had hypothesized that pauses in children’s speech, as they struggled to either find a word or string together the motor controls required to produce it, were a source of useful diagnostic data. So that’s what Gong and Guttag concentrated on. They identified a set of 13 acoustic features of children’s speech that their machine-learning system could search, seeking patterns that correlated with particular diagnoses. These were things like the number of short and long pauses, the average length of the pauses, the variability of their length, and similar statistics on uninterrupted utterances.

The children whose performances on the storytelling task were recorded in the data set had been classified as typically developing, as suffering from a language impairment, or as suffering from a speech impairment. The machine-learning system was trained on three different tasks: identifying any impairment, whether speech or language; identifying language impairments; and identifying speech impairments.

One obstacle the researchers had to confront was that the age range of the typically developing children in the data set was narrower than that of the children with impairments: Because impairments are comparatively rare, the researchers had to venture outside their target age range to collect data.

Gong addressed this problem using a statistical technique called residual analysis. First, she identified correlations between subjects’ age and gender and the acoustic features of their speech; then, for every feature, she corrected for those correlations before feeding the data to the machine-learning algorithm.

“The need for reliable measures for screening young children at high risk for speech and language disorders has been discussed by early educators for decades,” says Thomas Campbell, a professor of behavioral and brain sciences at the University of Texas at Dallas and executive director of the university’s Callier Center for Communication Disorders. “The researchers’ automated approach to screening provides an exciting technological advancement that could prove to be a breakthrough in speech and language screening of thousands of young children across the United States."