There’s only one place you find research on the Higgs boson and the quark-gluon plasma; ocean and climate modeling; ship hull design; molecular modeling of polymers; gravitational waves; and more — all in one 2,000 square-foot room. That place is MIT’s High Performance Research Computing Facility (HPRCF): the place where the numbers get run.

Half an hour’s drive from the MIT campus, in Middleton, Massachusetts, the HPRCF is housed in the MIT-Bates Research and Engineering Center. The staff at Bates provide maintenance and infrastructure support for the HPRCF, one of MIT’s three large computing centers. The facility is made up of 71 water-cooled racks with 12 kilowatts of power each — the equivalent of about 10 American households’ power needs in each rack. A high-speed network link connects the facility’s computers and servers to MIT and to the rest of the world, with a connection that has just been upgraded to 100 gigabytes per second — around 10,000 times the average U.S. household Internet speed. The HPRCF is presently 75 percent occupied, with some space still available for new research groups.

Collisions, analyzed and simulated

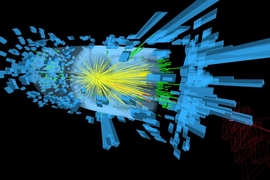

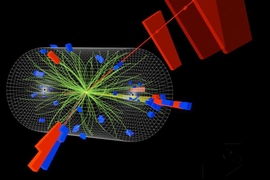

One of the earliest and largest users of the computing facility was the MIT group working with CERN on the Compact Muon Solenoid (CMS) experiment. The CMS is a detector that records particles and phenomena produced during high-energy collisions of protons or lead ions in the European Organization for Nuclear Research (CERN) Large Hadron Collider (LHC). Earlier this year, the LHC restarted after a significant shutdown, with higher energy and higher collision rates.

The CMS records around 30 million collisions per second and runs around 100 days per year, pausing only to refresh the supply of protons or ions. Researchers need to then analyze all of that data: They remove the background noise, determine which collisions are worth looking at, and run a series of complex calculations to identify particles and determine their properties. They then have to combine the data from all these different collisions and determine if they can observe phenomena of statistical significance.

“If you never do this type of analysis, you might think this is really insanely complicated,” says Christoph Paus, a professor of physics who leads the MIT team involved in the experiment. “In the end, you just have to find the particles that emerge in the pictures that we record and reconstruct what happened in the collision.”

On top of the calculations to analyze empirical data, researchers use computer models to deepen their understanding of what they’re observing in the collider.

“We don't only take data from our detector, we also simulate it, to understand what’s going on in these events,” Paus says. “We actually simulate these events. I make two particles collide, I put all my physics knowledge into it, and I generate everything that should happen.”

This type of modeling is known as a Monte Carlo simulation, where you do something many times to calculate the probability of a certain outcome. “They’re very computationally expensive to simulate, and we do this all the time,” Paus says.

That process takes a lot of computing power, provided by around 50 computing centers around the world, all connected through high-speed networks that compensate for the geographic distance between collaborators. The HPRCF is one of those sites. Its resources were used in the analysis that, in 2012, was used in the discovery of the Higgs boson — a subatomic particle predicted by the Standard Model of physics that would help to explain why particles have mass.

“The Higgs boson was at the time the missing piece,” says Paus. “The [HPRCF] was one of maybe five computing centers around the world that specialized in the search for the Higgs boson and had a key role in the success of the search.”

The CMS group continues to rely on the HPRCF — now an even more powerful tool in the LHC network, after its upgrade to a 100 gigabytes per second connection — as researchers seek further evidence that the particle discovered in 2012 is the Higgs boson and explore new physics at the energy frontier.

Modeling crystals

The lab of Gregory Rutledge, professor of chemical engineering, moved their computers to the HPRCF several years ago. The group works on simulating the behavior of polymers to learn more about their material properties.

Many types of plastic — grocery bags, shampoo bottles, hard hats — are useful thanks to their flexibility and toughness. That valuable combination of properties comes from their semi-crystalline nature.

“They’re materials that are partially crystalline and partially amorphous,” says David Nicholson, a graduate student in the lab. “That lends itself to a number of nice properties, and it gives you some options when you’re making them to fine-tune this structure that you get.”

Researchers in the Rutledge group look at the crystallization process for semi-crystalline materials. If the material is flowing in different ways during manufacturing, how does that affect the structure? What happens if you put in additives, so that the crystals can form more easily on the surface area of the powder you’ve added?

For the most part, they do this through simulation, by creating computer models depicting the atoms and molecules in the materials as classical particles exerting forces on one another. The equations of classical mechanics determine how those particles will move and bond given a particular temperature and stress.

“It’s all about statistics,” Nicholson says. “First of all, this structure is statistical in nature. There’s not one starting point, we average over [many]. And if we want to test different strain rates in materials, we have to do a lot of trials at each rate. That’s where the computation power adds up, in getting good statistics.”

Some experiments would not be possible in a reasonable timeframe without high-power computing.

“When you want to run a bigger system, if you want to look at some phenomena that happens at a longer length scale or you just want to get better statistics, you need to rely on better computational resources,” Nicholson says. “When you have bigger computers, more processors, better connectivity between them — which is very important — you can run simulations a lot faster and get a lot more data.”

“Our new cluster at Bates is definitely a step up from what we had before,” he adds.

Engineers on the job

Established in 1960s, the foundation of Bates — in some ways literally — was its electron accelerator. Below the building runs a series of tunnels that held the 1 gigaelectronvolt accelerator used in experiments until 2005.

The ambitious project was supported by an on-site team of scientific and technical staff that was up for the challenge. When 300-ton spectrometers needed to be pivoted to precise angles for different experiments, the team brought in a giant gun rotation mount from a U.S. Navy ship. To open and close the massive stone doorway to the building’s three-story-high hall Experimental Hall, they mounted the slab on high-pressure air pads.

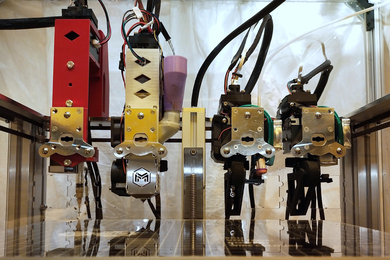

Nowadays, the engineers at Bates focus on supporting experimental nuclear and particle physics projects: They design, prototype, and build the equipment used for cutting-edge research. Bates also collaborates with industry, especially in medical physics and homeland security. They’ve built particle tracking chambers, large magnets, electron beam sources, and are now working on — among other projects — proton therapy devices used to irradiate diseased tissue. Through the years, Bates has pioneered a number of experimental techniques.

“When you think about physics experiments, you’re really hitting the limits of technology,” says James Kelsey, associate director of Bates. He’s been at the center for nearly 30 years. “A lot of the stuff we’re building has never been built before because it’s a novel way of detecting something.”

Kelsey and another Bates engineer, Ernie Ihloff, designed the HPRCF after visiting a number of large computing facilities run by top tech companies and other universities. They chose water-cooling to manage the massive heat output from the computers; it was a far more efficient solution than the air cooling that was less expensive to install and more common at the time. The team completed the project on time and under budget, and the HPRCF opened its doors to MIT research teams in 2009.

Round-the-clock support

Bates offers 24/7 support to users of their facility, with a team on call to respond to power outages or other emergencies. They also offer a creativity and flexibility that allows researchers to spend less time worrying about the logistics of their computing and more time thinking about their research.

“I think the big thing is our ability to respond to users’ requests,” Kelsey says. “When they come up here, if they have a special request, we can usually accommodate them or point them in the right direction.”

When the microprocessor-controlled fans in some of the racks broke after just a few years, Kelsey looked into the cost of replacement: It was $500 per fan. There were six fans in each of the 71 racks. “We brought it downstairs, worked with one of our techs, and we found it was just the bearings that were failing,” Kelsey says. They were able to make the fixes in-house for a fraction of the cost of new fans.

“That’s the kind of environment we’re in,” Kelsey says. “We make it work.”