Media Outlet:

Scientific American Publication Date:

Description:

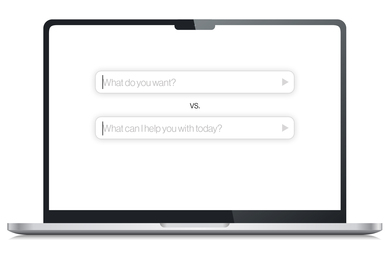

MIT researchers have found that user bias can drive interactions with AI chatbots, reports Nick Hilden for Scientific American. “When people think that the AI is caring, they become more positive toward it,” graduate student Pat Pataranutaporn explains. “This creates a positive reinforcement feedback loop where, at the end, the AI becomes much more positive, compared to the control condition. And when people believe that the AI was manipulative, they become more negative toward the AI—and it makes the AI become more negative toward the person as well.”