A growing number of people are living with conditions that could benefit from physical rehabilitation — but there aren’t enough physical therapists (PTs) to go around. The growing need for PTs is racing alongside population growth, and aging, as well as higher rates of severe ailments, are contributing to the problem.

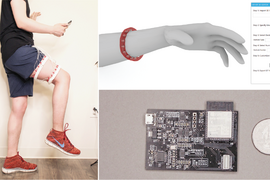

An upsurge in sensor-based techniques, such as on-body motion sensors, has provided some autonomy and precision for patients who could benefit from robotic systems to supplement human therapists. Still, the minimalist watches and rings that are currently available largely rely on motion data, which lack more holistic data a physical therapist pieces together, including muscle engagement and tension, in addition to movement.

This muscle-motion language barrier recently prompted the creation of an unsupervised physical rehabilitation system, MuscleRehab, by researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and Massachusetts General Hospital. There are three ingredients: motion tracking that captures motion activity, an imaging technique called electrical impedance tomography (EIT) that measures what the muscles are up to, and a virtual reality (VR) headset and tracking suit that lets a patient watch themselves perform alongside a physical therapist.

Patients put on the sleek ninja-esque all-black tracking suit and then perform various exercises such as lunges, knee bends, dead lifts, leg raises, knee extensions, squats, fire hydrants, and bridges that measure activity of quadriceps, sartorius, hamstrings, and abductors. VR captures 3D movement data.

In the virtual environment, patients are given two conditions. In both cases, their avatar performs alongside a physical therapist. In the first situation, just the motion tracking data is overlaid onto their patient avatar. In the second situation, the patient puts on the EIT sensing straps, and then they have all the information of the motion and muscle engagement.

With these two conditions, the team compared the exercise accuracy and handed the results to a professional therapist, who explained which muscle groups were supposed to be engaged during each of the exercises. By visualizing both muscle engagement and motion data during these unsupervised exercises instead of just motion alone, the overall accuracy of exercises improved by 15 percent.

The team then did a cross-comparison of how much time during the exercises the correct muscle group got triggered between the two conditions. In the condition where they show the muscle engagement data in real-time, that's the feedback. By monitoring and recording the most engagement data, the PTs reported a much better understanding of the quality of the patient's exercise, and that it helped to better evaluate their current regime and exercise based on those stats.

“We wanted our sensing scenario to not be limited to a clinical setting, to better enable data-driven unsupervised rehabilitation for athletes in injury recovery, patients currently in physical therapy, or those with physical limiting ailments, to ultimately see if we can assist with not only recovery, but perhaps prevention,” says Junyi Zhu, MIT PhD student in electrical engineering and computer science, CSAIL affiliate, and lead author on a new paper about MuscleRehab. “By actively measuring deep muscle engagement, we can observe if the data is abnormal compared to a patient's baseline, to provide insight into the potential muscle trajectory.”

Current sensing technologies focus mostly on tracking behaviors and heart rates, but Zhu was interested in finding a better way than electromyography (EMG) to sense the engagement (blood flow, stretching, contracting) of different layers of the muscles. EMG only captures muscle activity right beneath the skin, unless it’s done invasively.

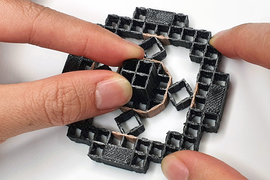

Zhu has been digging into the realm of personal health-sensing devices for some time now. He’d been inspired by using EIT, which measures electrical conductivity of muscles, for his project in 2021 that used the noninvasive imaging technique to create a toolkit for designing and fabricating health and motion sensing devices. To his knowledge, EIT, which is usually used for monitoring lung function, detecting chest tumors, and diagnosing pulmonary embolism, hadn’t been done before.

With MuscleRehab, the EIT sensing board serves as the “brains” behind the system. It’s accompanied by two straps filled with electrodes that are slipped onto a user’s upper thigh to capture 3D volumetric data. The motion capturing process uses 39 markers and a number of cameras that sense very high frame rates per second. The EIT sensing data shows actively triggered muscles highlighted on the display, and a given muscle becomes darker with more engagement.

Currently, MuscleRehab focuses on the upper thigh and the major muscle groups inside, but down the line they’d like to expand to the glutes. The team is also exploring potential avenues in using EIT in radiotherapy in collaboration with Piotr Zygmanski, medical physicist at the Brigham and Women’s Hospital and Dana-Farber Cancer Institute and Associate Professor of Radiation at Harvard Medical School.

“We are exploring utilization of electrical fields and currents for detection of radiation as well as for imaging of the of dielectric properties of patient anatomy during radiotherapy treatment, or as a result of the treatment,” says Zygmanski. “Radiation induces currents inside tissues and cells and other media — for instance, detectors — in addition to making direct damage at the molecular level (DNA damage). We have found the EIT instrumentation developed by the MIT team to be particularly suitable for exploring such novel applications of EIT in radiotherapy. We are hoping that with the customization of the electronic parameters of the EIT system we can achieve these goals.”

“This work advances EIT, a sensing approach conventionally used in clinical settings, with an ingenious and unique combination with virtual reality,” says Yang Zhang, assistant professor in electrical and computer engineering at the UCLA Samueli School of Engineering, who was not involved in the paper. “The enabled application that facilitates rehabilitation potentially has a wide impact across society to help patients conduct physical rehabilitation safely and effectively at home. Such tools to eliminate the need for clinical resources and personnel have long been needed for the lack of workforce in healthcare.”

The paper’s MIT co-authors are graduate students Yuxuan Lei and Gila Schein, MIT undergraduate student Aashini Shah, and MIT Professor Stefanie Mueller, all CSAIL affiliates. Other authors are Hamid Ghaednia, instructor at the Department of Orthopaedic Surgery of Harvard Medical School and co-director of Center for Physical Artificial Intelligence at Mass General Hospital; Joseph Schwab, chief of the Orthopaedic Spine Center, director of spine oncology, co-director of the Stephan L. Harris Chordoma Center, and associate professor of orthopedic surgery at Harvard Medical School; as well as Casper Harteveld, associate dean and professor at Northeastern University. They will present the paper at The ACM Symposium on User Interface Software and Technology later this month.

This research was carried out, in part, using the tools and capabilities of the MIT.nano Immersion Lab.