In a September 2020 essay in Nature Energy, three scientists posed several “grand challenges” — one of which was to find suitable materials for thermal energy storage devices that could be used in concert with solar energy systems. Fortuitously, Mingda Li — the Norman C. Rasmussen Assistant Professor of Nuclear Science and Engineering at MIT, who heads the department’s Quantum Matter Group — was already thinking along similar lines. In fact, Li and nine collaborators (from MIT, Lawrence Berkeley National Laboratory, and Argonne National Laboratory) were developing a new methodology, involving a novel machine-learning approach, that would make it faster and easier to identify materials with favorable properties for thermal energy storage and other uses.

The results of their investigation appear this month in a paper for Advanced Science. “This is a revolutionary approach that promises to accelerate the design of new functional materials,” comments physicist Jaime Fernandez-Baca, a distinguished staff member at Oak Ridge National Laboratory.

A central challenge in materials science, Li and his coauthors write, is to “establish structure-property relationships” — to figure out the characteristics a material with a given atomic structure would have. Li’s team focused, in particular, on using structural knowledge to predict the “phonon density of states,” which has a critical bearing on thermal properties.

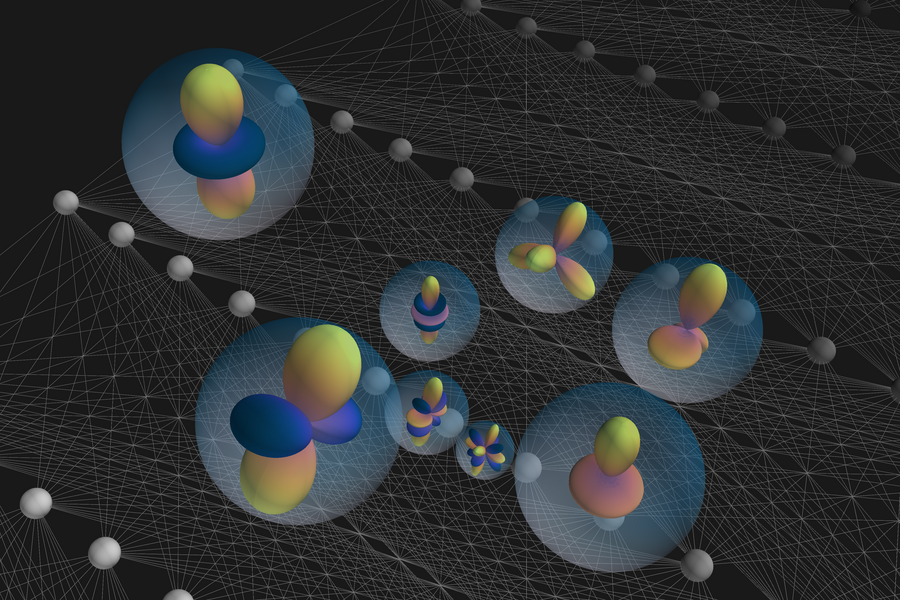

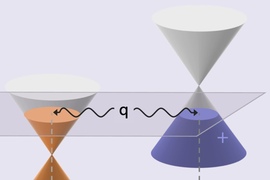

To understand that term, it’s best to start with the word phonon. “A crystalline material is composed of atoms arranged in a lattice structure,” explains Nina Andrejevic, a PhD student in materials science and engineering. “We can think of these atoms as spheres connected by springs, and thermal energy causes the springs to vibrate. And those vibrations, which only occur at discrete [quantized] frequencies or energies, are what we call phonons.”

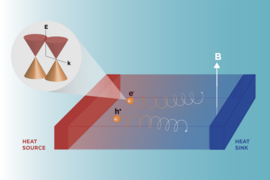

The phonon density of states is simply the number of vibrational modes, or phonons, found within a given frequency or energy range. Knowing the phonon density of states, one can determine a material’s heat-carrying capacity as well as its thermal conductivity, which relates to how readily heat passes through a material, and even the superconducting transition temperature in a superconductor. “For thermal energy storage purposes, you want a material with a high specific heat, which means it can take in heat without a sharp rise in temperature,” Li says. “You also want a material with low thermal conductivity so that it retains its heat longer.”

The phonon density of states, however, is a difficult term to measure experimentally or to compute theoretically. “For a measurement like this, one has to go to a national laboratory to use a large instrument, about 10 meters long, in order to get the energy resolution you need,” Li says. “That’s because the signal we’re looking for is very weak.”

“And if you want to calculate the phonon density of states, the most accurate way of doing so relies on density functional perturbation theory (DFPT),” notes Zhantao Chen, a mechanical engineering PhD student. “But those calculations scale with the fourth order of the number of atoms in the crystal’s basic building block, which could require days of computing time on a CPU cluster.” For alloys, which contain two or more elements, the calculations become much harder, possibly taking weeks or even longer.

The new method, says Li, could reduce those computational demands to a few seconds on a PC. Rather than trying to calculate the phonon density of states from first principles, which is clearly a laborious task, his team employed a neural network approach, utilizing artificial intelligence algorithms that enable a computer to learn from example. The idea was to present the neural network with enough data on a material’s atomic structure and its associated phonon density of states that the network could discern the key patterns connecting the two. After “training” in this fashion, the network would hopefully make reliable density of states predictions for a substance with a given atomic structure.

Predictions are difficult, Li explains, because the phonon density of states cannot by described by a single number but rather by a curve (analogous to the spectrum of light given off at different wavelengths by a luminous object). “Another challenge is that we only have trustworthy [density of states] data for about 1,500 materials. When we first tried machine learning, the dataset was too small to support accurate predictions.”

His group then teamed up with Lawrence Berkeley physicist Tess Smidt '12, a co-inventor of so-called Euclidean neural networks. “Training a conventional neural network normally requires datasets containing hundreds of thousands to millions of examples,” Smidt says. A significant part of that data demand stems from the fact that a conventional neural network does not understand that a 3D pattern and a rotated version of the same pattern are related and actually represent the same thing. Before it can recognize 3D patterns — in this case, the precise geometric arrangement of atoms in a crystal — a conventional neural network first needs to be shown the same pattern in hundreds of different orientations.

“Because Euclidean neural networks understand geometry — and recognize that rotated patterns still ‘mean’ the same thing — they can extract the maximal amount of information from a single sample,” Smidt adds. As a result, a Euclidean neural network trained on 1,500 examples can outperform a conventional neural network trained on 500 times more data.

Using the Euclidean neural network, the team predicted phonon density of states for 4,346 crystalline structures. They then selected the materials with the 20 highest heat capacities, comparing the predicted density of states values with those obtained through time-consuming DFPT calculations. The agreement was remarkably close.

The approach can be used to pick out promising thermal energy storage materials, in keeping with the aforementioned “grand challenge,” Li says. “But it could also greatly facilitate alloy design, because we can now determine the density of states for alloys just as easily as for crystals. That, in turn, offers a huge expansion in possible materials we could consider for thermal storage, as well as many other applications.”

Some applications have, in fact, already begun. Computer code from the MIT group has been installed on machines at Oak Ridge, enabling researchers to predict the phonon density of states of a given material based on its atomic structure.

Andrejevic points out, moreover, that Euclidean neural networks have even broader potential that is as-of-yet untapped. “They can help us figure out important material properties besides the phonon density of states. So this could open up the field in a big way.”

This research was funded by the U.S. Department of Energy Office of Science, National Science Foundation, and Lawrence Berkeley National Laboratory.