Humans have been teaming up with machines throughout history to achieve goals, be it by using simple machines to move materials or complex machines to travel in space. But advances in artificial intelligence today bring possibilities for even more sophisticated teamwork — true human-machine teams that cooperate to solve complex problems.

Much of the development of these human-machine teams focuses on the machine, tackling the technology challenges of training AI algorithms to perform their role in a mission effectively. But less focus, MIT Lincoln Laboratory researchers say, has been given to the human side of the team. What if the machine works perfectly, but the human is struggling?

"In the area of human-machine teaming, we often think about the technology — for example, how do we monitor it, understand it, make sure it's working right. But teamwork is a two-way street, and these considerations aren't happening both ways. What we're doing is looking at the flip side, where the machine is monitoring and enhancing the other side — the human," says Michael Pietrucha, a tactical systems specialist at the laboratory.

Pietrucha is among a team of laboratory researchers that aims to develop AI systems that can sense when a person's cognitive fatigue is interfering with their performance. The system would then suggest interventions, or even take action in dire scenarios, to help the individual recover or to prevent harm.

"Throughout history, we see human error leading to mishaps, missed opportunities, and sometimes disastrous consequences," says Megan Blackwell, former deputy lead of internally funded biological science and technology research at the laboratory. "Today, neuromonitoring is becoming more specific and portable. We envision using technology to monitor for fatigue or cognitive overload. Is this person attending to too much? Will they run out of gas, so to speak? If you can monitor the human, you could intervene before something bad happens."

This vision has its roots in decades-long research at the laboratory in using technology to "read" a person's cognitive or emotional state. By collecting biometric data — such as video and audio recordings of a person speaking — and processing these data with advanced AI algorithms, researchers have uncovered biomarkers of various psychological and neurobehavioral conditions. These biomarkers have been used to train models that can accurately estimate the level of a person's depression, for example.

In this work, the team will apply their biomarker research to AI that can analyze an individual's cognitive state, encapsulating how fatigued, stressed, or overloaded a person is feeling. The system will use biomarkers derived from physiological data such as vocal and facial recordings, heart rate, EEG and optical indications of brain activity, and eye movement to gain these insights.

The first step will be to build a cognitive model of an individual. "The cognitive model will integrate the physiological inputs and monitor the inputs to see how they change as a person performs particular fatiguing tasks," says Thomas Quatieri, who leads several neurobehavioral biomarker research efforts at the laboratory. "Through this process, the system can establish patterns of activity and learn a person's baseline cognitive state involving basic task-related functions needed to avoid injury or undesirable outcomes, such as auditory and visual attention and response time."

Once this individualized baseline is established, the system can start to recognize deviations from normal and predict if those deviations will lead to mistakes or poor performance.

"Building a model is hard. You know you got it right when it predicts performance," says William Streilein, principal staff in the Lincoln Lab's Homeland Protection and Air Traffic Control Division. "We've done well if the system can identify a deviation, and then actually predict that the deviation is going to interfere with the person's performance on a task. Humans are complex; we compensate naturally to stress or fatigue. What's important is building a system that can predict when that deviation won't be compensated for, and to only intervene then."

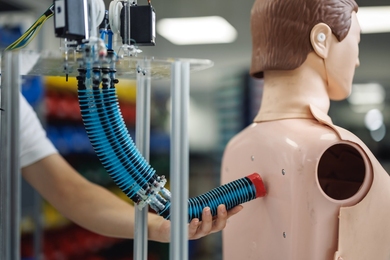

The possibilities for interventions are wide-ranging. On one end of the spectrum are minor adjustments a human can make to restore performance: drink coffee, change the lighting, get fresh air. Other interventions could suggest a shift change or transfer of a task to a machine or other teammate. Another possibility is using transcranial direct current stimulation, a performance-restoring technique that uses electrodes to stimulate parts of the brain and has been show to be more effective than caffeine in countering fatigue, with fewer side effects.

On the other end of the spectrum, the machine might take actions necessary to ensure the survival of the human team member when the human is incapable of doing so. For example, an AI teammate could make the "ejection decision" for a fighter pilot who has lost consciousness or the physical ability to eject themselves. Pietrucha, a retired colonel in the U.S. Air Force who has had many flight hours as a fighter/attack aviator, sees the promise of such a system that "goes beyond the mere analysis of flight parameters and includes analysis of the cognitive state of the aircrew, intervening only when the aircrew can't or wont," he says.

Determining the most helpful intervention, and its effectiveness, depends on a number of factors related to the task at hand, dosage of the intervention, and even a user's demographic background. "There's a lot of work to be done still in understanding the effects of different interventions and validating their safety," Streilein says. "Eventually, we want to introduce personalized cognitive interventions and assess their effectiveness on mission performance."

Beyond its use in combat aviation, the technology could benefit other demanding or dangerous jobs, such as those related to air traffic control, combat operations, disaster response, or emergency medicine. "There are scenarios where combat medics are vastly outnumbered, are in taxing situations, and are as every bit as tired as everyone else. Having this kind of over-the-shoulder help, something to help monitor their mental status and fatigue, could help prevent medical errors or even alert others to their level of fatigue," Blackwell says.

Today, the team is pursuing sponsorship to help develop the technology further. The coming year will be focused on collecting data to train their algorithms. The first subjects will be intelligence analysts, outfitted with sensors as they play a serious game that simulates the demands of their job. "Intelligence analysts are often overwhelmed by data and could benefit from this type of system," Streilein says. "The fact that they usually do their job in a 'normal' room environment, on a computer, allows us to easily instrument them to collect physiological data and start training."

"We'll be working on a basic set of capabilities in the near term," Quatieri says, "but an ultimate goal would be to leverage those capabilities so that, while the system is still individualized, it could be a more turnkey capability that could be deployed widely, similar to how Siri, for example, is universal but adapts quickly to an individual." In the long view, the team sees the promise of a universal background model that could represent anyone and be adapted for a specific use.

Such a capability may be key to advancing human-machine teams of the future. As AI progresses to achieve more human-like capabilities, while being immune from the human condition of mental stress, it's possible that humans may present the greatest risk to mission success. An AI teammate may know just how to lift its partner up.