Would you like to rid the internet of false political news stories and misinformation? Then consider using — yes — crowdsourcing.

That’s right. A new study co-authored by an MIT professor shows that crowdsourced judgments about the quality of news sources may effectively marginalize false news stories and other kinds of online misinformation.

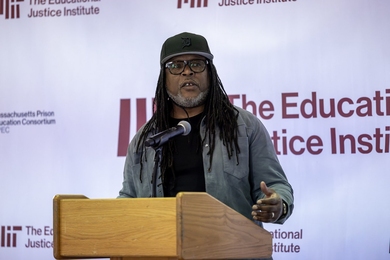

“What we found is that, while there are real disagreements among Democrats and Republicans concerning mainstream news outlets, basically everybody — Democrats, Republicans, and professional fact-checkers — agree that the fake and hyperpartisan sites are not to be trusted,” says David Rand, an MIT scholar and co-author of a new paper detailing the study’s results.

Indeed, using a pair of public-opinion surveys to evaluate of 60 news sources, the researchers found that Democrats trusted mainstream media outlets more than Republicans do — with the exception of Fox News, which Republicans trusted far more than Democrats did. But when it comes to lesser-known sites peddling false information, as well as “hyperpartisan” political websites (the researchers include Breitbart and Daily Kos in this category), both Democrats and Republicans show a similar disregard for such sources.

Trust levels for these alternative sites were low overall. For instance, in one survey, when respondents were asked to give a trust rating from 1 to 5 for news outlets, the result was that hyperpartisan websites received a trust rating of only 1.8 from both Republicans and Democrats; fake news sites received a trust rating of only 1.7 from Republicans and 1.9 from Democrats.

By contrast, mainstream media outlets received a trust rating of 2.9 from Democrats but only 2.3 from Republicans; Fox News, however, received a trust rating of 3.2 from Republicans, compared to 2.4 from Democrats.

The study adds a twist to a high-profile issue. False news stories have proliferated online in recent years, and social media sites such as Facebook have received sharp criticism for giving them visibility. Facebook also faced pushback for a January 2018 plan to let readers rate the quality of online news sources. But the current study suggests such a crowdsourcing approach could work well, if implemented correctly.

“If the goal is to remove really bad content, this actually seems quite promising,” Rand says.

The paper, “Fighting misinformation on social media using crowdsourced judgments of news source quality,” is being published in Proceedings of the National Academy of Sciences this week. The authors are Gordon Pennycook of the University of Regina, and Rand, an associate professor in the MIT Sloan School of Management.

To promote, or to squelch?

To perform the study, the researchers conducted two online surveys that had roughly 1,000 participants each, one on Amazon’s Mechanical Turk platform, and one via the survey tool Lucid. In each case, respondents were asked to rate their trust in 60 news outlets, about a third of which were high-profile, mainstream sources.

The second survey’s participants had demographic characteristics resembling that of the country as a whole — including partisan affiliation. (The researchers weighted Republicans and Democrats equally in the survey to avoid any perception of bias.) That survey also measured the general audience’s evaluations against a set of judgments by professional fact-checkers, to see whether the larger audience’s judgments were similar to the opinions of experienced researchers.

But while Democrats and Republicans regarded prominent news outlets differently, that party-based mismatch largely vanished when it came to the other kinds of news sites, where, as Rand says, “By and large we did not find that people were really blinded by their partisanship.”

In this vein, Republicans trusted MSNBC more than Breitbart, even though many of them regarded it as a left-leaning news channel. Meanwhile, Democrats, although they trusted Fox News less than any other mainstream news source, trusted it more than left-leaning hyperpartisan outlets (such as Daily Kos).

Moreover, because the respondents generally distrusted the more marginal websites, there was significant agreement among the general audience and the professional fact-checkers. (As the authors point out, this also challenges claims about fact-checkers having strong political biases themselves.)

That means the crowdsourcing approach could work especially well in marginalizing false news stories — for instance by building audience judgments into an algorithm ranking stories by quality. Crowdsourcing would probably be less effective, however, if a social media site were trying to build a consensus about the very best news sources and stories.

Where Facebook failed: Familiarity?

If the new study by Rand and Pennycook rehabilitates the idea of crowdsourcing news source judgments, their approach differs from Facebook’s stated 2018 plan in one crucial respect. Facebook was only going to let readers who were familiar with a given news source give trust ratings.

But Rand and Pennycook conclude that this method would indeed build bias into the system, because people are more skeptical of news sources they have less familiarity with — and there is likely good reason why most people are not acquainted with many sites that run fake or hyperpartisan news.

“The people who are familiar with fake news outlets are, by and large, the people who like fake news,” Rand says. “Those are not the people that you want to be asking whether they trust it.”

Thus for crowdsourced judgments to be a part of an online ranking algorithm, there might have to be a mechanism for using the judgments of audience members who are unfamiliar with a given source. Or, better yet, suggest, Pennycook and Rand, showing users sample content from each news outlet before having the users produce trust ratings.

For his part, Rand acknowledges one limit to the overall generalizability of the study: The dymanics could be different in countries that have more limited traditions of freedom of the press.

“Our results pertain to the U.S., and we don’t have any sense of how this will generalize to other countries, where the fake news problem is more serious than it is here,” Rand says.

All told, Rand says, he also hopes the study will help people look at America’s fake news problem with something less than total despair.

“When people talk about fake news and misinformation, they almost always have very grim conversations about how everything is terrible,” Rand says. “But a lot of the work Gord [Pennycook] and I have been doing has turned out to produce a much more optimistic take on things.”

Support for the study came from the Ethics and Governance of Artifical Intelligence Initiative of the Miami Foundation, the Social Sciences and Humanities Research Council of Canada, and the Templeton World Charity Foundation.