The world is facing a maternal health crisis. According to the World Health Organization, approximately 810 women die each day due to preventable causes related to pregnancy and childbirth. Two-thirds of these deaths occur in sub-Saharan Africa. In Rwanda, one of the leading causes of maternal mortality is infected Cesarean section wounds.

An interdisciplinary team of doctors and researchers from MIT, Harvard University, and Partners in Health (PIH) in Rwanda have proposed a solution to address this problem. They have developed a mobile health (mHealth) platform that uses artificial intelligence and real-time computer vision to predict infection in C-section wounds with roughly 90 percent accuracy.

“Early detection of infection is an important issue worldwide, but in low-resource areas such as rural Rwanda, the problem is even more dire due to a lack of trained doctors and the high prevalence of bacterial infections that are resistant to antibiotics,” says Richard Ribon Fletcher '89, SM '97, PhD '02, research scientist in mechanical engineering at MIT and technology lead for the team. “Our idea was to employ mobile phones that could be used by community health workers to visit new mothers in their homes and inspect their wounds to detect infection.”

This summer, the team, which is led by Bethany Hedt-Gauthier, a professor at Harvard Medical School, was awarded the $500,000 first-place prize in the NIH Technology Accelerator Challenge for Maternal Health.

“The lives of women who deliver by Cesarean section in the developing world are compromised by both limited access to quality surgery and postpartum care,” adds Fredrick Kateera, a team member from PIH. “Use of mobile health technologies for early identification, plausible accurate diagnosis of those with surgical site infections within these communities would be a scalable game changer in optimizing women's health.”

Training algorithms to detect infection

The project’s inception was the result of several chance encounters. In 2017, Fletcher and Hedt-Gauthier bumped into each other on the Washington Metro during an NIH investigator meeting. Hedt-Gauthier, who had been working on research projects in Rwanda for five years at that point, was seeking a solution for the gap in Cesarean care she and her collaborators had encountered in their research. Specifically, she was interested in exploring the use of cell phone cameras as a diagnostic tool.

Fletcher, who leads a group of students in Professor Sanjay Sarma’s AutoID Lab and has spent decades applying phones, machine learning algorithms, and other mobile technologies to global health, was a natural fit for the project.

“Once we realized that these types of image-based algorithms could support home-based care for women after Cesarean delivery, we approached Dr. Fletcher as a collaborator, given his extensive experience in developing mHealth technologies in low- and middle-income settings,” says Hedt-Gauthier.

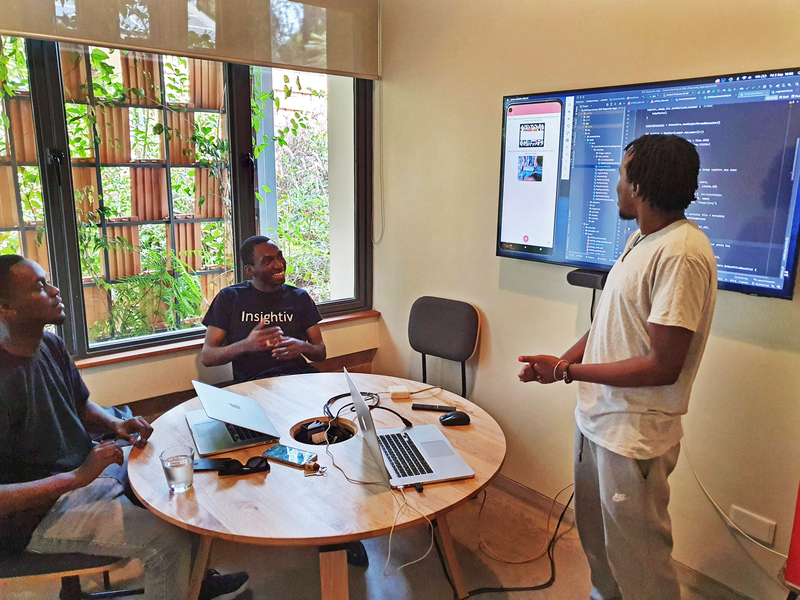

During that same trip, Hedt-Gauthier serendipitously sat next to Audace Nakeshimana ’20, who was a new MIT student from Rwanda and would later join Fletcher’s team at MIT. With Fletcher’s mentorship, during his senior year, Nakeshimana founded Insightiv, a Rwandan startup that is applying AI algorithms for analysis of clinical images, and was a top grant awardee at the annual MIT IDEAS competition in 2020.

The first step in the project was gathering a database of wound images taken by community health workers in rural Rwanda. They collected over 1,000 images of both infected and non-infected wounds and then trained an algorithm using that data.

A central problem emerged with this first dataset, collected between 2018 and 2019. Many of the photographs were of poor quality.

“The quality of wound images collected by the health workers was highly variable and it required a large amount of manual labor to crop and resample the images. Since these images are used to train the machine learning model, the image quality and variability fundamentally limits the performance of the algorithm,” says Fletcher.

To solve this issue, Fletcher turned to tools he used in previous projects: real-time computer vision and augmented reality.

Improving image quality with real-time image processing

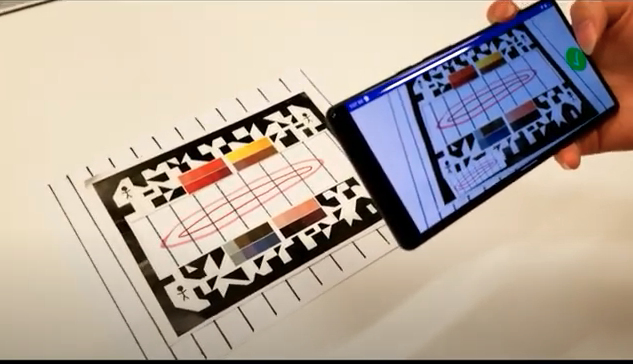

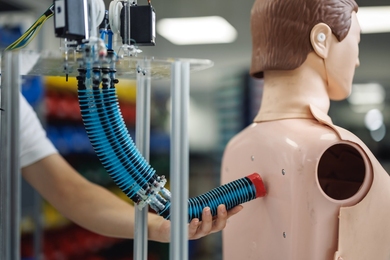

To encourage community health workers to take higher-quality images, Fletcher and the team revised the wound screener mobile app and paired it with a simple paper frame. The frame contained a printed calibration color pattern and another optical pattern that guides the app’s computer vision software.

Health workers are instructed to place the frame over the wound and open the app, which provides real-time feedback on the camera placement. Augmented reality is used by the app to display a green check mark when the phone is in the proper range. Once in range, other parts of the computer vision software will then automatically balance the color, crop the image, and apply transformations to correct for parallax.

“By using real-time computer vision at the time of data collection, we are able to generate beautiful, clean, uniform color-balanced images that can then be used to train our machine learning models, without any need for manual data cleaning or post-processing,” says Fletcher.

Using convolutional neural net (CNN) machine learning models, along with a method called transfer learning, the software has been able to successfully predict infection in C-section wounds with roughly 90 percent accuracy within 10 days of childbirth. Women who are predicted to have an infection through the app are then given a referral to a clinic where they can receive diagnostic bacterial testing and can be prescribed life-saving antibiotics as needed.

The app has been well received by women and community health workers in Rwanda.

“The trust that women have in community health workers, who were a big promoter of the app, meant the mHealth tool was accepted by women in rural areas,” adds Anne Niyigena of PIH.

Using thermal imaging to address algorithmic bias

One of the biggest hurdles to scaling this AI-based technology to a more global audience is algorithmic bias. When trained on a relatively homogenous population, such as that of rural Rwanda, the algorithm performs as expected and can successfully predict infection. But when images of patients of varying skin colors are introduced, the algorithm is less effective.

To tackle this issue, Fletcher used thermal imaging. Simple thermal camera modules, designed to attach to a cell phone, cost approximately $200 and can be used to capture infrared images of wounds. Algorithms can then be trained using the heat patterns of infrared wound images to predict infection. A study published last year showed over a 90 percent prediction accuracy when these thermal images were paired with the app’s CNN algorithm.

While more expensive than simply using the phone’s camera, the thermal image approach could be used to scale the team’s mHealth technology to a more diverse, global population.

“We’re giving the health staff two options: in a homogenous population, like rural Rwanda, they can use their standard phone camera, using the model that has been trained with data from the local population. Otherwise, they can use the more general model which requires the thermal camera attachment,” says Fletcher.

While the current generation of the mobile app uses a cloud-based algorithm to run the infection prediction model, the team is now working on a stand-alone mobile app that does not require internet access, and also looks at all aspects of maternal health, from pregnancy to postpartum.

In addition to developing the library of wound images used in the algorithms, Fletcher is working closely with former student Nakeshimana and his team at Insightiv on the app’s development, and using the Android phones that are locally manufactured in Rwanda. PIH will then conduct user testing and field-based validation in Rwanda.

As the team looks to develop the comprehensive app for maternal health, privacy and data protection are a top priority.

“As we develop and refine these tools, a closer attention must be paid to patients’ data privacy. More data security details should be incorporated so that the tool addresses the gaps it is intended to bridge and maximizes user's trust, which will eventually favor its adoption at a larger scale,” says Niyigena.

Members of the prize-winning team include: Bethany Hedt-Gauthier from Harvard Medical School; Richard Fletcher from MIT; Robert Riviello from Brigham and Women’s Hospital; Adeline Boatin from Massachusetts General Hospital; Anne Niyigena, Frederick Kateera, Laban Bikorimana, and Vincent Cubaka from PIH in Rwanda; and Audace Nakeshimana ’20, founder of Insightiv.ai.