In recent years, quantum computers have lost some of their luster. In the early 1990s, it seemed that they might be able to solve a class of difficult but common problems — the so-called NP-complete problems — exponentially faster than classical computers. Now, it seems that they probably can't. In fact, until this week, the only common calculation where quantum computation promised exponential gains was the factoring of large numbers, which isn't that useful outside cryptography. In a paper appearing today in Physical Review Letters, however, MIT researchers present a new algorithm that could bring the same type of efficiency to systems of linear equations — whose solution is crucial to image processing, video processing, signal processing, robot control, weather modeling, genetic analysis and population analysis, to name just a few applications.

Quantum computers are computers that exploit the weird properties of matter at extremely small scales. Where a bit in a classical computer can represent either a "1" or a "0," a quantum bit, or qubit, can represent "1" and "0" at the same time. Two qubits can represent four values simultaneously, three qubits eight, and so on. Under the right circumstances, computations performed with qubits are thus the equivalent of multiple classical computations performed in parallel. But those circumstances are much rarer than was first anticipated.

Quantum computers with maybe 12 or 16 qubits have been built in the lab, but quantum computation is such a young field, and the physics of it are so counterintuitive, that researchers are still developing the theoretical tools for thinking about it.

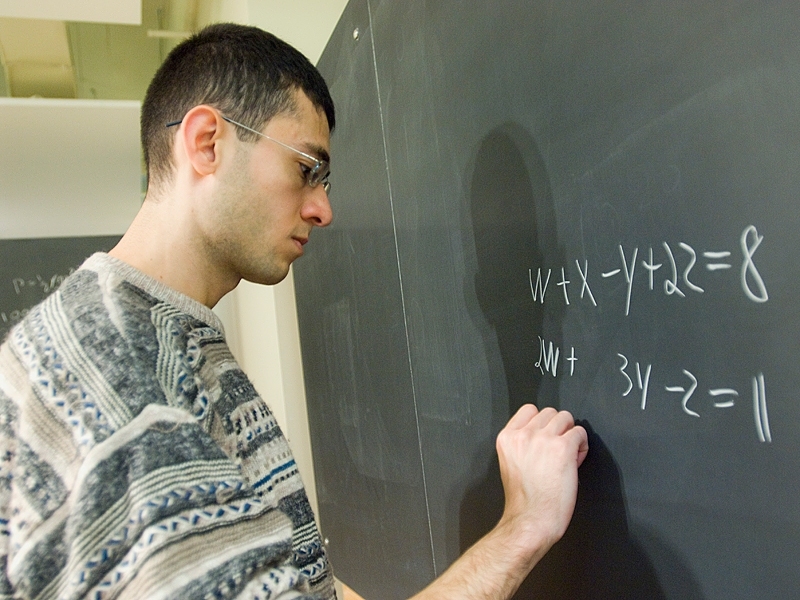

Systems of linear equations, on the contrary, are familiar to almost everyone. We all had to solve them in algebra class: given three distinct equations featuring the same three variables, find values for the variables that make all three equations true.

Computer models of weather systems or of complex chemical reactions, however, might have to solve millions of equations with millions of variables. Under the right circumstances, a classical computer can solve such equations relatively efficiently: the solution time is proportional to the number of variables. But under the same circumstances, the time required by the new quantum algorithm would be proportional to the logarithm of the number of variables.

That means that for a calculation involving a trillion variables, "a supercomputer's going to take trillions of steps, and this algorithm will take a few hundred," says mechanical engineering professor Seth Lloyd, who along with Avinatan Hassidim, a postdoc in the Research Lab of Electronics, and the University of Bristol's Aram Harrow '01, PhD '05, came up with the new algorithm.

Because the result of the calculation would be stored on qubits, however, "you're not going to have the full functionality of an algorithm that just solves everything and writes it all out," Lloyd says. To see why, consider the way in which each added qubit doubles the capacity of quantum memory. Eight qubits can represent 256 values simultaneously, nine qubits 512 values, and so on. This doubling rapidly yields astronomical numbers. The trillion solutions to a trillion-variable problem would be stored on only about 40 qubits. But extracting all trillion solutions from the qubits would take a trillion steps, eating up all the time that the quantum algorithm saved.

With qubits, however, "you can make any measurement you like," Lloyd says. "You can figure out, for instance, their average value. You can say, okay, what fraction of them is bigger than 433?" Such measurements take little time but may still provide useful information. They could, Lloyd says, answer questions like, "In this very complicated ecosystem with, like, 10 to the 12th different species, one of which is humans, in the steady state for this particular model, do humans exist? That's the kind of question where a classical algorithm can't even provide anything."

Greg Kuperberg, a mathematician at the University of California, Davis, who works on quantum algebra, says that the MIT algorithm "could be important," but that he's "not sure yet how important it will be or when." Kuperberg cautions that in applications that process empirical data, loading the data into quantum memory could be just as time consuming as extracting it would be. "If you have to spend a year loading in the data," he says, "it doesn't matter that you can then do this linear-algebra step in 10 seconds."

But Hassidim argues that there could be applications that allow time for data gathering but still require rapid calculation. For instance, to yield accurate results, a weather prediction model might require data from millions of sensors transmitted continuously over high-speed optical fibers for hours. Such quantities of data would have to be loaded into quantum memory, since they would overwhelm all the conventional storage in the world. Once all the data are in, however, the resulting forecast needs to be calculated immediately to be of any use.

Still, Hassidim concedes that no one has yet come up with a "killer app" for the algorithm. But he adds that "this is a tool, which hopefully other people are going to use. Other people are going to have to continue this work and understand how to use this in different problems. You do have to think some more."

Indeed, researchers at the University of London have already expanded on the MIT researchers' approach to develop a new quantum algorithm for solving differential equations. Early in their paper, they describe the MIT algorithm, then say, "This promises to allow the solution of, e.g., vast engineering problems. This result is inspirational in many ways and suggests that quantum computers may be good at solving more than linear equations."

Quantum computers are computers that exploit the weird properties of matter at extremely small scales. Where a bit in a classical computer can represent either a "1" or a "0," a quantum bit, or qubit, can represent "1" and "0" at the same time. Two qubits can represent four values simultaneously, three qubits eight, and so on. Under the right circumstances, computations performed with qubits are thus the equivalent of multiple classical computations performed in parallel. But those circumstances are much rarer than was first anticipated.

Quantum computers with maybe 12 or 16 qubits have been built in the lab, but quantum computation is such a young field, and the physics of it are so counterintuitive, that researchers are still developing the theoretical tools for thinking about it.

Systems of linear equations, on the contrary, are familiar to almost everyone. We all had to solve them in algebra class: given three distinct equations featuring the same three variables, find values for the variables that make all three equations true.

Computer models of weather systems or of complex chemical reactions, however, might have to solve millions of equations with millions of variables. Under the right circumstances, a classical computer can solve such equations relatively efficiently: the solution time is proportional to the number of variables. But under the same circumstances, the time required by the new quantum algorithm would be proportional to the logarithm of the number of variables.

That means that for a calculation involving a trillion variables, "a supercomputer's going to take trillions of steps, and this algorithm will take a few hundred," says mechanical engineering professor Seth Lloyd, who along with Avinatan Hassidim, a postdoc in the Research Lab of Electronics, and the University of Bristol's Aram Harrow '01, PhD '05, came up with the new algorithm.

Because the result of the calculation would be stored on qubits, however, "you're not going to have the full functionality of an algorithm that just solves everything and writes it all out," Lloyd says. To see why, consider the way in which each added qubit doubles the capacity of quantum memory. Eight qubits can represent 256 values simultaneously, nine qubits 512 values, and so on. This doubling rapidly yields astronomical numbers. The trillion solutions to a trillion-variable problem would be stored on only about 40 qubits. But extracting all trillion solutions from the qubits would take a trillion steps, eating up all the time that the quantum algorithm saved.

With qubits, however, "you can make any measurement you like," Lloyd says. "You can figure out, for instance, their average value. You can say, okay, what fraction of them is bigger than 433?" Such measurements take little time but may still provide useful information. They could, Lloyd says, answer questions like, "In this very complicated ecosystem with, like, 10 to the 12th different species, one of which is humans, in the steady state for this particular model, do humans exist? That's the kind of question where a classical algorithm can't even provide anything."

Greg Kuperberg, a mathematician at the University of California, Davis, who works on quantum algebra, says that the MIT algorithm "could be important," but that he's "not sure yet how important it will be or when." Kuperberg cautions that in applications that process empirical data, loading the data into quantum memory could be just as time consuming as extracting it would be. "If you have to spend a year loading in the data," he says, "it doesn't matter that you can then do this linear-algebra step in 10 seconds."

But Hassidim argues that there could be applications that allow time for data gathering but still require rapid calculation. For instance, to yield accurate results, a weather prediction model might require data from millions of sensors transmitted continuously over high-speed optical fibers for hours. Such quantities of data would have to be loaded into quantum memory, since they would overwhelm all the conventional storage in the world. Once all the data are in, however, the resulting forecast needs to be calculated immediately to be of any use.

Still, Hassidim concedes that no one has yet come up with a "killer app" for the algorithm. But he adds that "this is a tool, which hopefully other people are going to use. Other people are going to have to continue this work and understand how to use this in different problems. You do have to think some more."

Indeed, researchers at the University of London have already expanded on the MIT researchers' approach to develop a new quantum algorithm for solving differential equations. Early in their paper, they describe the MIT algorithm, then say, "This promises to allow the solution of, e.g., vast engineering problems. This result is inspirational in many ways and suggests that quantum computers may be good at solving more than linear equations."