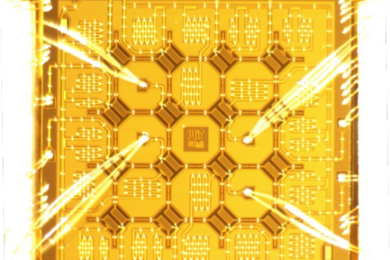

MIT partners with national labs on two new National Quantum Information Science Research Centers

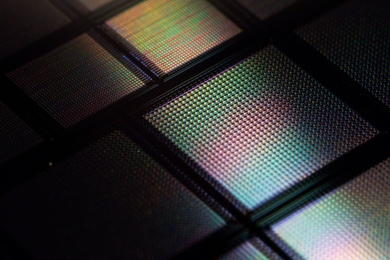

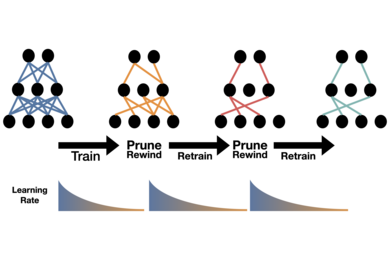

Co-design Center for Quantum Advantage and Quantum Systems Accelerator are funded by the U.S. Department of Energy to accelerate the development of quantum computers.