When manipulating an arcade claw, a player can plan all she wants. But once she presses the joystick button, it’s a game of wait-and-see. If the claw misses its target, she’ll have to start from scratch for another chance at a prize.

The slow and deliberate approach of the arcade claw is similar to state-of-the-art pick-and-place robots, which use high-level planners to process visual images and plan out a series of moves to grab for an object. If a gripper misses its mark, it’s back to the starting point, where the controller must map out a new plan.

Looking to give robots a more nimble, human-like touch, MIT engineers have now developed a gripper that grasps by reflex. Rather than start from scratch after a failed attempt, the team’s robot adapts in the moment to reflexively roll, palm, or pinch an object to get a better hold. It’s able to carry out these “last centimeter” adjustments (a riff on the “last mile” delivery problem) without engaging a higher-level planner, much like how a person might fumble in the dark for a bedside glass without much conscious thought.

The new design is the first to incorporate reflexes into a robotic planning architecture. For now, the system is a proof of concept and provides a general organizational structure for embedding reflexes into a robotic system. Going forward, the researchers plan to program more complex reflexes to enable nimble, adaptable machines that can work with and among humans in ever-changing settings.

“In environments where people live and work, there’s always going to be uncertainty,” says Andrew SaLoutos, a graduate student in MIT’s Department of Mechanical Engineering. “Someone could put something new on a desk or move something in the break room or add an extra dish to the sink. We’re hoping a robot with reflexes could adapt and work with this kind of uncertainty.”

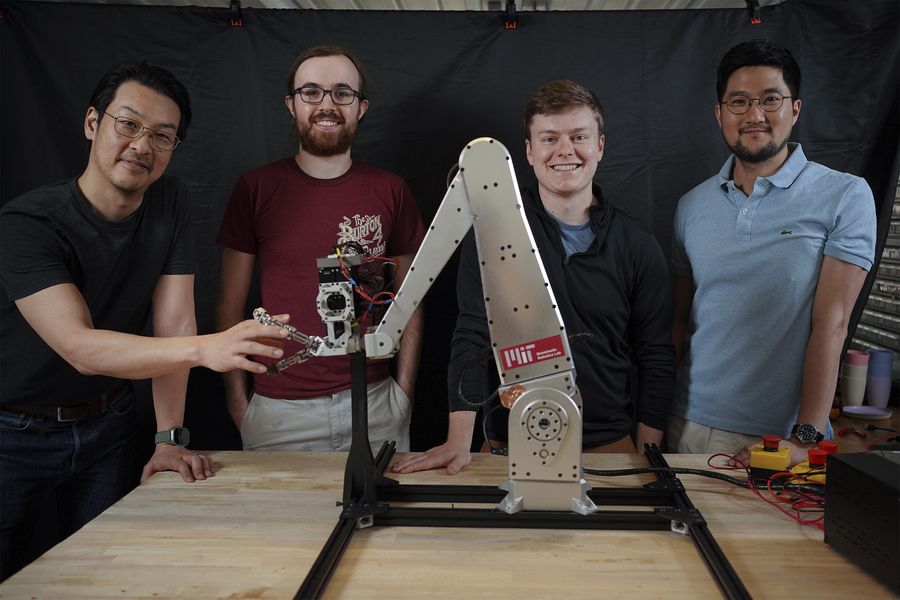

SaLoutos and his colleagues will present a paper on their design in May at the IEEE International Conference on Robotics and Automation (ICRA). His MIT co-authors include postdoc Hongmin Kim, graduate student Elijah Stanger-Jones, Menglong Guo SM ’22, and professor of mechanical engineering Sangbae Kim, the director of the Biomimetic Robotics Laboratory at MIT.

High and low

Many modern robotic grippers are designed for relatively slow and precise tasks, such as repetitively fitting together the same parts on a a factory assembly line. These systems depend on visual data from onboard cameras; processing that data limits a robot’s reaction time, particularly if it needs to recover from a failed grasp.

“There’s no way to short-circuit out and say, oh shoot, I have to do something now and react quickly,” SaLoutos says. “Their only recourse is just to start again. And that takes a lot of time computationally.”

In their new work, Kim’s team built a more reflexive and reactive platform, using fast, responsive actuators that they originally developed for the group’s mini cheetah — a nimble, four-legged robot designed to run, leap, and quickly adapt its gait to various types of terrain.

The team’s design includes a high-speed arm and two lightweight, multijointed fingers. In addition to a camera mounted to the base of the arm, the team incorporated custom high-bandwidth sensors at the fingertips that instantly record the force and location of any contact as well as the proximity of the finger to surrounding objects more than 200 times per second.

The researchers designed the robotic system such that a high-level planner initially processes visual data of a scene, marking an object’s current location where the gripper should pick the object up, and the location where the robot should place it down. Then, the planner sets a path for the arm to reach out and grasp the object. At this point, the reflexive controller takes over.

If the gripper fails to grab hold of the object, rather than back out and start again as most grippers do, the team wrote an algorithm that instructs the robot to quickly act out any of three grasp maneuvers, which they call “reflexes,” in response to real-time measurements at the fingertips. The three reflexes kick in within the last centimeter of the robot approaching an object and enable the fingers to grab, pinch, or drag an object until it has a better hold.

They programmed the reflexes to be carried out without having to involve the high-level planner. Instead, the reflexes are organized at a lower decision-making level, so that they can respond as if by instinct, rather than having to carefully evaluate the situation to plan an optimal fix.

“It’s like how, instead of having the CEO micromanage and plan every single thing in your company, you build a trust system and delegate some tasks to lower-level divisions,” Kim says. “It may not be optimal, but it helps the company react much more quickly. In many cases, waiting for the optimal solution makes the situation much worse or irrecoverable.”

Cleaning via reflex

The team demonstrated the gripper’s reflexes by clearing a cluttered shelf. They set a variety of household objects on a shelf, including a bowl, a cup, a can, an apple, and a bag of coffee grounds. They showed that the robot was able to quickly adapt its grasp to each object’s particular shape and, in the case of the coffee grounds, squishiness. Out of 117 attempts, the gripper quickly and successfully picked and placed objects more than 90 percent of the time, without having to back out and start over after a failed grasp.

A second experiment showed how the robot could also react in the moment. When researchers shifted a cup’s position, the gripper, despite having no visual update of the new location, was able to readjust and essentially feel around until it sensed the cup in its grasp. Compared to a baseline grasping controller, the gripper’s reflexes increased the area of successful grasps by over 55 percent.

Now, the engineers are working to include more complex reflexes and grasp maneuvers in the system, with a view toward building a general pick-and-place robot capable of adapting to cluttered and constantly changing spaces.

“Picking up a cup from a clean table — that specific problem in robotics was solved 30 years ago,” Kim notes. “But a more general approach, like picking up toys in a toybox, or even a book from a library shelf, has not been solved. Now with reflexes, we think we can one day pick and place in every possible way, so that a robot could potentially clean up the house.”

This research was supported, in part, by Advanced Robotics Lab of LG Electronics and the Toyota Research Institute.