No one likes sitting at a red light. But signalized intersections aren’t just a minor nuisance for drivers; vehicles consume fuel and emit greenhouse gases while waiting for the light to change.

What if motorists could time their trips so they arrive at the intersection when the light is green? While that might be just a lucky break for a human driver, it could be achieved more consistently by an autonomous vehicle that uses artificial intelligence to control its speed.

In a new study, MIT researchers demonstrate a machine-learning approach that can learn to control a fleet of autonomous vehicles as they approach and travel through a signalized intersection in a way that keeps traffic flowing smoothly.

Using simulations, they found that their approach reduces fuel consumption and emissions while improving average vehicle speed. The technique gets the best results if all cars on the road are autonomous, but even if only 25 percent use their control algorithm, it still leads to substantial fuel and emissions benefits.

“This is a really interesting place to intervene. No one’s life is better because they were stuck at an intersection. With a lot of other climate change interventions, there is a quality-of-life difference that is expected, so there is a barrier to entry there. Here, the barrier is much lower,” says senior author Cathy Wu, the Gilbert W. Winslow Career Development Assistant Professor in the Department of Civil and Environmental Engineering and a member of the Institute for Data, Systems, and Society (IDSS) and the Laboratory for Information and Decision Systems (LIDS).

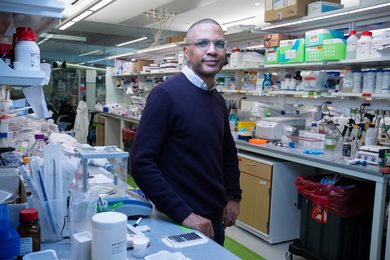

The lead author of the study is Vindula Jayawardana, a graduate student in LIDS and the Department of Electrical Engineering and Computer Science. The research will be presented at the European Control Conference.

Intersection intricacies

While humans may drive past a green light without giving it much thought, intersections can present billions of different scenarios depending on the number of lanes, how the signals operate, the number of vehicles and their speeds, the presence of pedestrians and cyclists, etc.

Typical approaches for tackling intersection control problems use mathematical models to solve one simple, ideal intersection. That looks good on paper, but likely won’t hold up in the real world, where traffic patterns are often about as messy as they come.

Wu and Jayawardana shifted gears and approached the problem using a model-free technique known as deep reinforcement learning. Reinforcement learning is a trial-and-error method where the control algorithm learns to make a sequence of decisions. It is rewarded when it finds a good sequence. With deep reinforcement learning, the algorithm leverages assumptions learned by a neural network to find shortcuts to good sequences, even if there are billions of possibilities.

This is useful for solving a long-horizon problem like this; the control algorithm must issue upwards of 500 acceleration instructions to a vehicle over an extended time period, Wu explains.

“And we have to get the sequence right before we know that we have done a good job of mitigating emissions and getting to the intersection at a good speed,” she adds.

But there’s an additional wrinkle. The researchers want the system to learn a strategy that reduces fuel consumption and limits the impact on travel time. These goals can be conflicting.

“To reduce travel time, we want the car to go fast, but to reduce emissions, we want the car to slow down or not move at all. Those competing rewards can be very confusing to the learning agent,” Wu says.

While it is challenging to solve this problem in its full generality, the researchers employed a workaround using a technique known as reward shaping. With reward shaping, they give the system some domain knowledge it is unable to learn on its own. In this case, they penalized the system whenever the vehicle came to a complete stop, so it would learn to avoid that action.

Traffic tests

Once they developed an effective control algorithm, they evaluated it using a traffic simulation platform with a single intersection. The control algorithm is applied to a fleet of connected autonomous vehicles, which can communicate with upcoming traffic lights to receive signal phase and timing information and observe their immediate surroundings. The control algorithm tells each vehicle how to accelerate and decelerate.

Their system didn’t create any stop-and-go traffic as vehicles approached the intersection. (Stop-and-go traffic occurs when cars are forced to come to a complete stop due to stopped traffic ahead). In simulations, more cars made it through in a single green phase, which outperformed a model that simulates human drivers. When compared to other optimization methods also designed to avoid stop-and-go traffic, their technique resulted in larger fuel consumption and emissions reductions. If every vehicle on the road is autonomous, their control system can reduce fuel consumption by 18 percent and carbon dioxide emissions by 25 percent, while boosting travel speeds by 20 percent.

“A single intervention having 20 to 25 percent reduction in fuel or emissions is really incredible. But what I find interesting, and was really hoping to see, is this non-linear scaling. If we only control 25 percent of vehicles, that gives us 50 percent of the benefits in terms of fuel and emissions reduction. That means we don’t have to wait until we get to 100 percent autonomous vehicles to get benefits from this approach,” she says.

Down the road, the researchers want to study interaction effects between multiple intersections. They also plan to explore how different intersection set-ups (number of lanes, signals, timings, etc.) can influence travel time, emissions, and fuel consumption. In addition, they intend to study how their control system could impact safety when autonomous vehicles and human drivers share the road. For instance, even though autonomous vehicles may drive differently than human drivers, slower roadways and roadways with more consistent speeds could improve safety, Wu says.

While this work is still in its early stages, Wu sees this approach as one that could be more feasibly implemented in the near-term.

“The aim in this work is to move the needle in sustainable mobility. We want to dream, as well, but these systems are big monsters of inertia. Identifying points of intervention that are small changes to the system but have significant impact is something that gets me up in the morning,” she says.

“Professor Cathy Wu's recent work shows how eco-driving provides a unified framework for reducing fuel consumption, thus minimizing carbon dioxide emissions, while also giving good results on average travel time. More specifically, the reinforcement learning approach pursued in Wu's work, by leveraging the use of connected autonomous vehicles technology, provides a feasible and attractive framework for other researchers in the same space,” says Ozan Tonguz, professor of electrical and computer engineering at Carnegie Mellon University, who was not involved with this research. “Overall, this is a very timely contribution in this burgeoning and important research area.”

This work was supported, in part, by the MIT-IBM Watson AI Lab.