Voice assistants like Siri and Alexa can tell the weather and crack a good joke, but any 8-year-old can carry on a better conversation.

The deep learning models that power Siri and Alexa learn to understand our commands by picking out patterns in sequences of words and phrases. Their narrow, statistical understanding of language stands in sharp contrast to our own creative, spontaneous ways of speaking, a skill that starts developing even before we are born, while we're still in the womb.

To give computers some of our innate feel for language, researchers have started training deep learning models on the grammatical rules that most of us grasp intuitively, even if we never learned how to diagram a sentence in school. Grammatical constraints seem to help the models learn faster and perform better, but because neural networks reveal very little about their decision-making process, researchers have struggled to confirm that the gains are due to the grammar, and not the models’ expert ability at finding patterns in sequences of words.

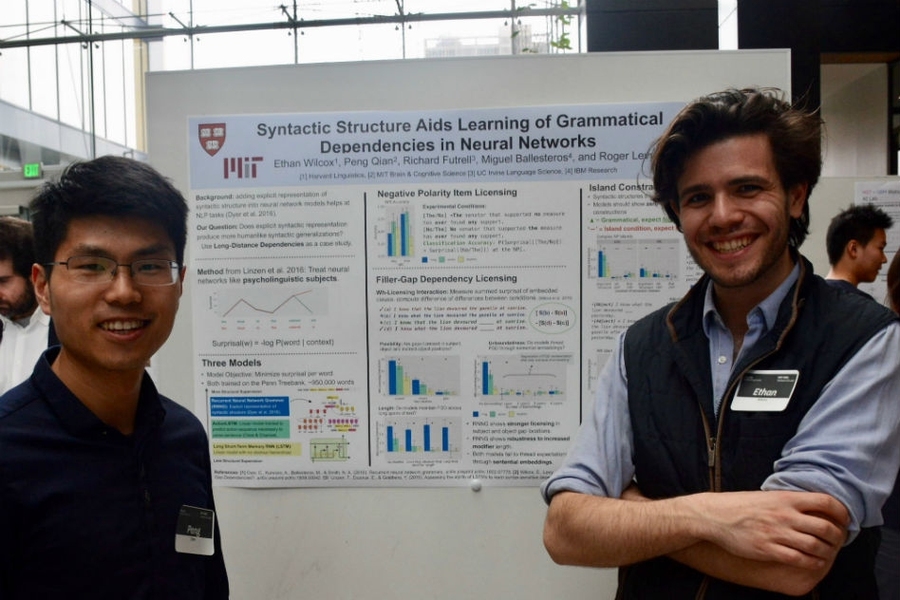

Now psycholinguists have stepped in to help. To peer inside the models, researchers have taken psycholinguistic tests originally developed to study human language understanding and adapted them to probe what neural networks know about language. In a pair of papers to be presented in June at the North American Chapter of the Association for Computational Linguistics conference, researchers from MIT, Harvard University, University of California, IBM Research, and Kyoto University have devised a set of tests to tease out the models’ knowledge of specific grammatical rules. They find evidence that grammar-enriched deep learning models comprehend some fairly sophisticated rules, performing better than models trained on little-to-no grammar, and using a fraction of the data.

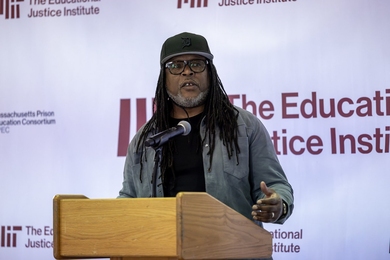

“Grammar helps the model behave in more human-like ways,” says Miguel Ballesteros, an IBM researcher with the MIT-IBM Watson AI Lab, and co-author of both studies. “The sequential models don’t seem to care if you finish a sentence with a non-grammatical phrase. Why? Because they don’t see that hierarchy.”

As a postdoc at Carnegie Mellon University, Ballesteros helped develop a method for training modern language models on sentence structure called recurrent neural network grammars, or RNNGs. In the current research, he and his colleagues exposed the RNNG model, and similar models with little-to-no grammar training, to sentences with good, bad, or ambiguous syntax. When human subjects are asked to read sentences that sound grammatically off, their surprise is registered by longer response times. For computers, surprise is expressed in probabilities; when low-probability words appear in the place of high-probability words, researchers give the models a higher surprisal score.

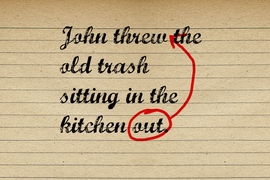

They found that the best-performing model — the grammar-enriched RNNG model — showed greater surprisal when exposed to grammatical anomalies; for example, when the word “that” improperly appears instead of “what” to introduce an embedded clause; “I know what the lion devoured at sunrise” is a perfectly natural sentence, but “I know that the lion devoured at sunrise” sounds like it has something missing — because it does.

Linguists call this type of construction a dependency between a filler (a word like who or what) and a gap (the absence of a phrase where one is typically required). Even when more complicated constructions of this type are shown to grammar-enriched models, they — like native speakers of English — clearly know which ones are wrong.

For example, “The policeman who the criminal shot the politician with his gun shocked during the trial” is anomalous; the gap corresponding to the filler “who” should come after the verb, “shot,” not “shocked.” Rewriting the sentence to change the position of the gap, as in “The policeman who the criminal shot with his gun shocked the jury during the trial,” is longwinded, but perfectly grammatical.

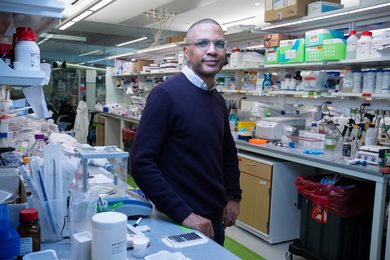

“Without being trained on tens of millions of words, state-of-the-art sequential models don’t care where the gaps are and aren’t in sentences like those,” says Roger Levy, a professor in MIT’s Department of Brain and Cognitive Sciences, and co-author of both studies. “A human would find that really weird, and, apparently, so do grammar-enriched models.”

Bad grammar, of course, not only sounds weird, it can turn an entire sentence into gibberish, underscoring the importance of syntax in cognition, and to psycholinguists who study syntax to learn more about the brain’s capacity for symbolic thought.“Getting the structure right is important to understanding the meaning of the sentence and how to interpret it,” says Peng Qian, a graduate student at MIT and co-author of both studies.

The researchers plan to next run their experiments on larger datasets and find out if grammar-enriched models learn new words and phrases faster. Just as submitting neural networks to psychology tests is helping AI engineers understand and improve language models, psychologists hope to use this information to build better models of the brain.

“Some component of our genetic endowment gives us this rich ability to speak,” says Ethan Wilcox, a graduate student at Harvard and co-author of both studies. “These are the sorts of methods that can produce insights into how we learn and understand language when our closest kin cannot.”