Very few of us who play video games or watch computer-generated image-filled movies ever take the time to sit back and appreciate all the handiwork that make their graphics so thrilling and immersive.

One key aspect of this is texture. The glossy pictures we see on our screens often appear seamlessly rendered, but they require huge amounts of work behind the scenes. When effects studios create scenes in computer-assisted design programs, they first 3D model all the objects that they plan to put in the scene, and then give a texture to each generated object: for example, making a wood table appear to be glossy, polished, or matte.

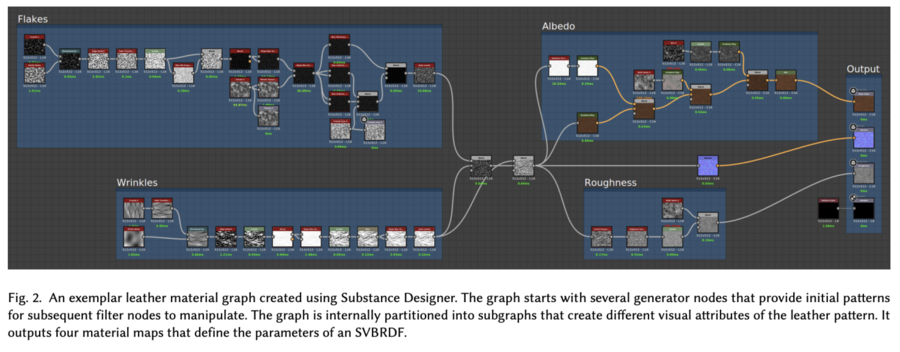

If a designer is trying to recreate a particular texture from the real world, they may find themselves digging around online trying to find a close match that can be stitched together for the scene. But most of the time you can’t just take a photo of an object and use it in a scene — you have to create a set of “maps” that quantify different properties like roughness or light levels.

There are programs that have made this process easier than ever before, like the Adobe Substance software that helped propel the photorealistic ruins of Las Vegas in “Blade Runner 2049”. However, these so-called “procedural” programs can take months to learn, and still involve painstaking hours or even days to create a particular texture.

A team led by researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) has developed an approach that they say can make texturing even less tedious, to the point where you can snap a picture of something you see in a store, and then go recreate the material on your home laptop.

“Imagine being able to take a photo of a pair of jeans that you can then have your character wear in a video game,” says PhD student Liang Shi, lead author of a paper about the new “MATch” project. “We believe this system would further close the gap between ‘virtual’ and ‘reality.’”

Shi says that the goal of MATch is to “significantly simplify and expedite the creation of synthetic materials using machine learning.” The team evaluated MATch on both rendered synthetic materials and real materials captured on camera, and showed that it can reconstruct materials more accurately and at a higher resolution than existing state-of-the-art methods.

A collaboration with researchers at Adobe, one core element is a new library called “DiffMat” that essentially provides the various building blocks for constructing different textured materials.

The team’s framework involves dozens of so-called “procedural graphs” made up of different nodes that all act like mini-Instagram filters: they take some input and transform it in a certain artistic way to produce an output.

“A graph simply defines a way to combine hundreds of such filters to achieve a very complex visual effect, like a particular texture,” says Shi. “The neural network selects the most appropriate combinations of filter nodes until it perceptually matches the appearance of the user’s input image.”

Moving forward, Shi says that the team would like to go beyond inputting just a single flat sample, and to instead be able to capture materials from images of curved objects, or with multiple materials in the image.

They also hope to expand the pipeline to handle more complex materials that have different properties depending on how they are pointed. (For example, with a piece of wood you can see lines going in one direction "with the grain"; wood is stronger with the grain compared to "against the grain").

Shi co-wrote the paper with MIT Professor Wojciech Matusik, alongside MIT graduate student research scientist Beichen Li and Adobe researchers Miloš Hašan, Kalyan Sunkavali, Radomír Měch, and Tamy Boubekeur. The paper will be presented virtually this month at the SIGGRAPH Asia computer graphics conference.

The work is supported, in part, by the National Science Foundation.