The human brain can recognize thousands of different objects, but neuroscientists have long grappled with how the brain organizes object representation — in other words, how the brain perceives and identifies different objects.

Now researchers at MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) and Department of Brain and Cognitive Sciences have discovered that the brain organizes objects based on their physical size, with a specific region of the brain reserved for recognizing large objects and another reserved for small objects. Their findings, to be published in the June 21 issue of Neuron, could have major implications for fields like robotics, and could lead to a greater understanding of how the brain organizes and maps information.

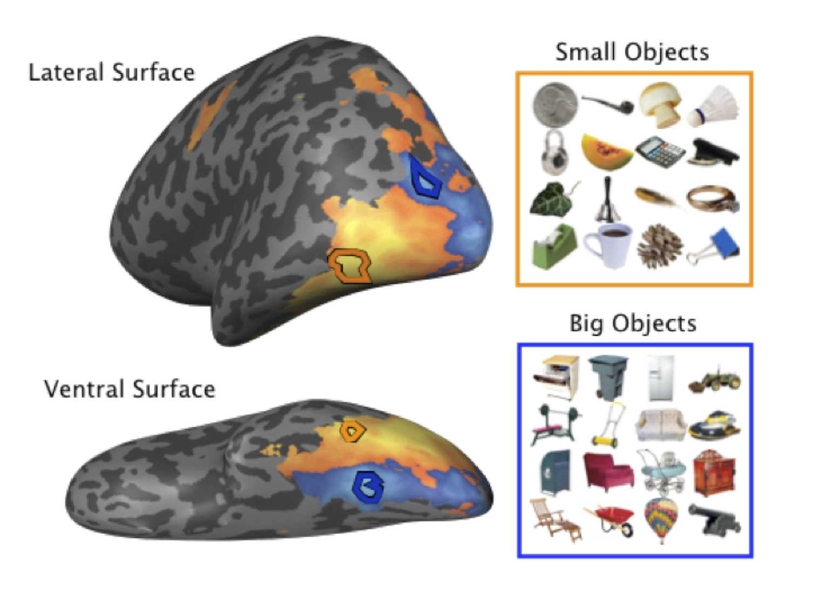

As part of their study, Konkle and Oliva took 3-D scans of brain activity during experiments in which participants were asked to look at images of big and small objects or visualize items of differing size. By evaluating the scans, the researchers found that there are distinct regions of the brain that respond to big objects (for example, a chair or a table) and small objects (for example, a paperclip or a strawberry).

By looking at the arrangement of the responses, they found a systematic organization of big to small object responses across the brain’s cerebral cortex. Large objects, they learned, are processed in the parahippocampal region of the brain, an area located by the hippocampus, which is also responsible for navigating through spaces and for processing the location of different places, like the beach or a building. Small objects are handled in the inferior temporal region of the brain, near regions that are active when the brain has to manipulate tools like a hammer or a screwdriver.

The work could have major implications for the field of robotics, in particular in developing techniques for how robots deal with different objects, from grasping a pen to sitting in a chair.

“Our findings shed light on the geography of the human brain, and could provide insight into developing better machine interfaces for robots,” Oliva says.

Many computer vision techniques currently focus on identifying what an object is without much guidance about the size of the object, which could be useful in recognition. “Paying attention to the physical size of objects may dramatically constrain the number of objects a robot has to consider when trying to identify what it is seeing,” Oliva says.

The study’s findings are also important for understanding how the organization of the brain may have evolved. The work of Konkle and Oliva suggests that the human visual system’s method for organizing thousands of objects may also be tied to human interactions with the world. “If experience in the world has shaped our brain organization over time, and our behavior depends on how big objects are, it makes sense that the brain may have established different processing channels for different actions, and at the center of these may be size,” Konkle says.

Oliva, a cognitive neuroscientist by training, has focused much of her research on how the brain tackles scene and object recognition, as well as visual memory. Her ultimate goal is to gain a better understanding of the brain’s visual processes, paving the way for the development of machines and interfaces that can see and understand the visual world like humans do.

“Ultimately, we want to focus on how active observers move in the natural world. We think this not only matters for large-scale brain organization of the visual system, but it also matters for making machines that can see like us,” Konkle and Oliva say.

This research was funded by a National Science Foundation graduate fellowship and a National Eye Institute grant, and was conducted at the Athinoula A. Martinos Imaging Center at MIT’s McGovern Institute for Brain Research.

Now researchers at MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) and Department of Brain and Cognitive Sciences have discovered that the brain organizes objects based on their physical size, with a specific region of the brain reserved for recognizing large objects and another reserved for small objects. Their findings, to be published in the June 21 issue of Neuron, could have major implications for fields like robotics, and could lead to a greater understanding of how the brain organizes and maps information.

As part of their study, Konkle and Oliva took 3-D scans of brain activity during experiments in which participants were asked to look at images of big and small objects or visualize items of differing size. By evaluating the scans, the researchers found that there are distinct regions of the brain that respond to big objects (for example, a chair or a table) and small objects (for example, a paperclip or a strawberry).

By looking at the arrangement of the responses, they found a systematic organization of big to small object responses across the brain’s cerebral cortex. Large objects, they learned, are processed in the parahippocampal region of the brain, an area located by the hippocampus, which is also responsible for navigating through spaces and for processing the location of different places, like the beach or a building. Small objects are handled in the inferior temporal region of the brain, near regions that are active when the brain has to manipulate tools like a hammer or a screwdriver.

The work could have major implications for the field of robotics, in particular in developing techniques for how robots deal with different objects, from grasping a pen to sitting in a chair.

“Our findings shed light on the geography of the human brain, and could provide insight into developing better machine interfaces for robots,” Oliva says.

Many computer vision techniques currently focus on identifying what an object is without much guidance about the size of the object, which could be useful in recognition. “Paying attention to the physical size of objects may dramatically constrain the number of objects a robot has to consider when trying to identify what it is seeing,” Oliva says.

The study’s findings are also important for understanding how the organization of the brain may have evolved. The work of Konkle and Oliva suggests that the human visual system’s method for organizing thousands of objects may also be tied to human interactions with the world. “If experience in the world has shaped our brain organization over time, and our behavior depends on how big objects are, it makes sense that the brain may have established different processing channels for different actions, and at the center of these may be size,” Konkle says.

Oliva, a cognitive neuroscientist by training, has focused much of her research on how the brain tackles scene and object recognition, as well as visual memory. Her ultimate goal is to gain a better understanding of the brain’s visual processes, paving the way for the development of machines and interfaces that can see and understand the visual world like humans do.

“Ultimately, we want to focus on how active observers move in the natural world. We think this not only matters for large-scale brain organization of the visual system, but it also matters for making machines that can see like us,” Konkle and Oliva say.

This research was funded by a National Science Foundation graduate fellowship and a National Eye Institute grant, and was conducted at the Athinoula A. Martinos Imaging Center at MIT’s McGovern Institute for Brain Research.