On Jan. 7, 2011, MIT kicks off the celebration of its 150th anniversary, and one of the features making its debut on the MIT150 website is a collection of more than 100 video interviews with MIT luminaries. Dubbed the Infinite History, the collection has an innovative navigation interface whose development is an apt illustration of the MIT motto mens et manus — “mind and hand,” theory and application.

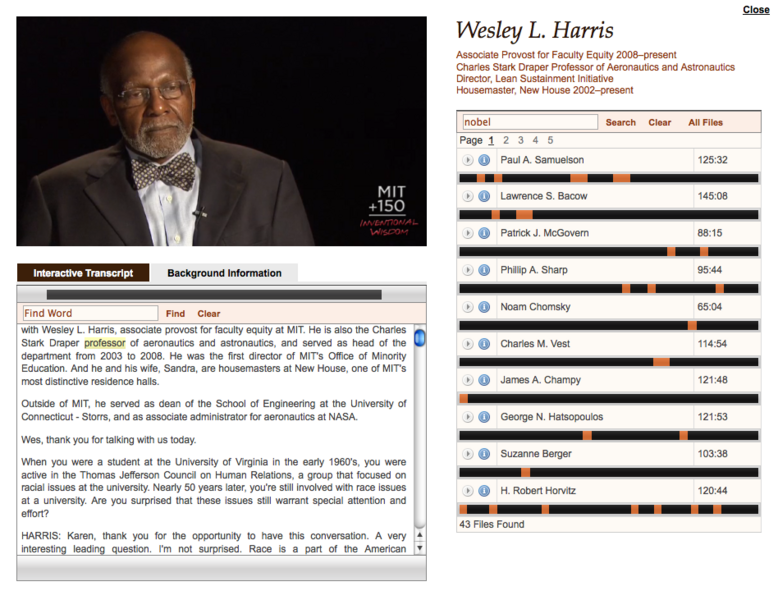

When one of the Infinite History videos launches, a scrolling transcript of the interview appears underneath it, and each word of the transcript is highlighted as it’s spoken. The viewer can browse through the transcript or use a search function to find a particular term; clicking on a word or group of words in the transcript automatically forwards the video to the corresponding section.

Next to the video window is yet another search bar that can pull up a list of all the videos in the collection that contain a given term, even as the interview in the video window continues playing. Underneath each title in the list, the occurrences of the search term are depicted as orange hashes on a black bar representing the video’s duration. Clicking on a hash pulls up the corresponding section of the transcript, and clicking the transcript launches that section of the video in the video window.

The Infinite History is the result of a collaboration that began nearly three years ago. Planning for the 150th anniversary has been going on for even longer, and early in the process, the MIT150 organizers suggested a series of interviews to Larry Gallagher, director of MIT’s Academic Media Production Services. Gallagher knew that Jim Glass, who heads the Computer Science and Artificial Intelligence Laboratory’s Spoken Language Systems Group, had been working on software that would automatically transcribe and index lectures recorded as part of MIT and Microsoft’s iCampus project. “I proposed that if we could get this to work, wouldn’t this be a very engaging way for the user community to be able to navigate through the database?” Gallagher says.

Like most general-purpose speech-recognition systems, however, Glass’s was rarely more than about 80-percent accurate. For some purposes, that’s fine: If you’re combing through thousands of recorded lectures for the two or three that mention, say, “Lagrangians,” 80-percent accuracy will probably help narrow down your search significantly. But for something as high-profile as the MIT150 website, transcripts with a 20-percent error rate wouldn’t do.

Glass, however, pointed out that C. J. Johnson ’02, MBA ’08 had done his graduate work on the specific problem of, as Glass puts it, “closing the gap between where speech recognition was and where, say, a human transcript would be.” After getting his degree from the MIT Sloan School of Management, Johnson had cofounded a Cambridge company, 3Play Media, to commercialize technology he had begun developing as an independent study in Glass’ lab.

3Play uses commercial speech-recognition technology to produce an initial draft of a video file and then farms the corrections out to subcontractors — generally recruited online — who work remotely. What makes the process cost effective, Johnson explains, is the technology he began developing at MIT, a Web application that allows contractors to quickly recognize and correct errors. “Making sure that the transcripts are accurate, both on the time and the text end, is actually a very difficult task,” Johnson says.

3Play also provides the navigation system that allows viewers to browse through video by clicking on keywords, which was field-tested by the Infinite History project. “Larry knew me back when I was just working with Jim, doing research and still at Sloan as a grad student,” Johnson says. “I worked closely with him, and he was certainly influential in refining the product to where it is now.” MIT was also an early adopter of a 3Play product called Clipmaker, which allows video editors to excise segments of video simply by highlighting the corresponding text in the transcript. Gallagher’s department has been using the technology to, among other things, edit footage for several documentaries about MIT that will also be part of the MIT150 celebration. “It’s a video producer’s dream,” Gallagher says.

In previews, the Infinite History was getting good reviews from MIT historians and archivists. “This is the first oral-history project on this scale that I’m aware of which allows the viewer to see the interview and track the transcript at the same time,” says Ann Wolpert, MIT’s director of libraries.

“It will certainly make video a lot more accessible,” adds David Mindell, who directs MIT’s Program in Science, Technology, and Society and chairs the MIT150 Steering Committee. “I teach a class on the history of MIT, and it’s a huge resource for us.”

“There’s a lot of stories to be told, a lot of information that you don’t find in the textual world, in letters and things,” says Tom Rosko, head of the Institute Archives. “Especially when you have people conversing, more often comes out.” Rosko adds that the collection already has an import that may have been unforeseen when it was created, in that three of the videos’ subjects — former MIT President Howard Johnson, Nobel-winning economist Paul Samuelson and former dean of the architectural school Bill Mitchell — have died since they were interviewed.

“This collection is not just going to go away after the 150th,” Gallagher says. “These will only become more valuable in time.”

When one of the Infinite History videos launches, a scrolling transcript of the interview appears underneath it, and each word of the transcript is highlighted as it’s spoken. The viewer can browse through the transcript or use a search function to find a particular term; clicking on a word or group of words in the transcript automatically forwards the video to the corresponding section.

Next to the video window is yet another search bar that can pull up a list of all the videos in the collection that contain a given term, even as the interview in the video window continues playing. Underneath each title in the list, the occurrences of the search term are depicted as orange hashes on a black bar representing the video’s duration. Clicking on a hash pulls up the corresponding section of the transcript, and clicking the transcript launches that section of the video in the video window.

The Infinite History is the result of a collaboration that began nearly three years ago. Planning for the 150th anniversary has been going on for even longer, and early in the process, the MIT150 organizers suggested a series of interviews to Larry Gallagher, director of MIT’s Academic Media Production Services. Gallagher knew that Jim Glass, who heads the Computer Science and Artificial Intelligence Laboratory’s Spoken Language Systems Group, had been working on software that would automatically transcribe and index lectures recorded as part of MIT and Microsoft’s iCampus project. “I proposed that if we could get this to work, wouldn’t this be a very engaging way for the user community to be able to navigate through the database?” Gallagher says.

Like most general-purpose speech-recognition systems, however, Glass’s was rarely more than about 80-percent accurate. For some purposes, that’s fine: If you’re combing through thousands of recorded lectures for the two or three that mention, say, “Lagrangians,” 80-percent accuracy will probably help narrow down your search significantly. But for something as high-profile as the MIT150 website, transcripts with a 20-percent error rate wouldn’t do.

Glass, however, pointed out that C. J. Johnson ’02, MBA ’08 had done his graduate work on the specific problem of, as Glass puts it, “closing the gap between where speech recognition was and where, say, a human transcript would be.” After getting his degree from the MIT Sloan School of Management, Johnson had cofounded a Cambridge company, 3Play Media, to commercialize technology he had begun developing as an independent study in Glass’ lab.

3Play uses commercial speech-recognition technology to produce an initial draft of a video file and then farms the corrections out to subcontractors — generally recruited online — who work remotely. What makes the process cost effective, Johnson explains, is the technology he began developing at MIT, a Web application that allows contractors to quickly recognize and correct errors. “Making sure that the transcripts are accurate, both on the time and the text end, is actually a very difficult task,” Johnson says.

3Play also provides the navigation system that allows viewers to browse through video by clicking on keywords, which was field-tested by the Infinite History project. “Larry knew me back when I was just working with Jim, doing research and still at Sloan as a grad student,” Johnson says. “I worked closely with him, and he was certainly influential in refining the product to where it is now.” MIT was also an early adopter of a 3Play product called Clipmaker, which allows video editors to excise segments of video simply by highlighting the corresponding text in the transcript. Gallagher’s department has been using the technology to, among other things, edit footage for several documentaries about MIT that will also be part of the MIT150 celebration. “It’s a video producer’s dream,” Gallagher says.

In previews, the Infinite History was getting good reviews from MIT historians and archivists. “This is the first oral-history project on this scale that I’m aware of which allows the viewer to see the interview and track the transcript at the same time,” says Ann Wolpert, MIT’s director of libraries.

“It will certainly make video a lot more accessible,” adds David Mindell, who directs MIT’s Program in Science, Technology, and Society and chairs the MIT150 Steering Committee. “I teach a class on the history of MIT, and it’s a huge resource for us.”

“There’s a lot of stories to be told, a lot of information that you don’t find in the textual world, in letters and things,” says Tom Rosko, head of the Institute Archives. “Especially when you have people conversing, more often comes out.” Rosko adds that the collection already has an import that may have been unforeseen when it was created, in that three of the videos’ subjects — former MIT President Howard Johnson, Nobel-winning economist Paul Samuelson and former dean of the architectural school Bill Mitchell — have died since they were interviewed.

“This collection is not just going to go away after the 150th,” Gallagher says. “These will only become more valuable in time.”