Lip reading is a critical means of communication for many deaf people, but it has a drawback: Certain consonants (for example, p and b) can be nearly impossible to distinguish by sight alone.

Tactile devices, which translate sound waves into vibrations that can be felt by the skin, can help overcome that obstacle by conveying nuances of speech that can't be gleaned from lip reading.

Researchers in MIT's Sensory Communication Group are working on a new generation of such devices, which could be an important tool for deaf people who rely on lip reading and can't use or can't afford cochlear implants. The cost of the device and the surgery make cochlear implants prohibitive for many people, especially in developing countries.

"Most deaf people will not have access to that technology in our lifetime," said Ted Moallem, a graduate student working on the project. "Tactile devices can be several orders of magnitude cheaper than cochlear implants."

Moallem and Charlotte Reed, senior research scientist in MIT's Research Laboratory of Electronics and leader of the project, say the software they are developing could be compatible with current smart phones, allowing such devices to be transformed into unobtrusive tactile aids for the deaf.

"Anyone who has a smart phone already has much of what they would need to run the program," including a microphone, digital signal-processing capability, and a rudimentary vibration system, says Moallem.

Sensing vibrations

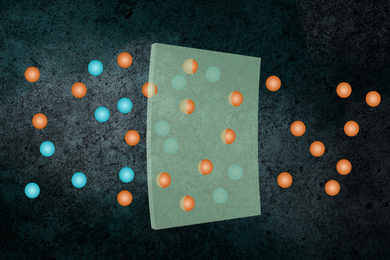

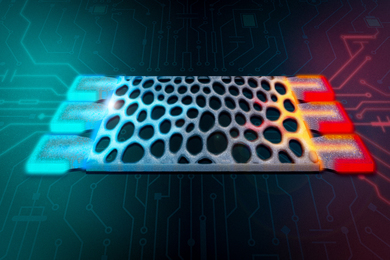

Tactile devices translate sound waves into vibrations that allow the user to distinguish between vibratory patterns associated with different sound frequencies. The MIT researchers are testing devices that have at least two vibration ranges, one for high-frequency sounds and one for low-frequency sounds.

Using such handheld devices, deaf people can more easily follow conversations than with lip reading alone, which requires a great deal of concentration, says Moallem.

"It's hard to have a casual conversation in a situation where you have to be paying attention like that," he says.

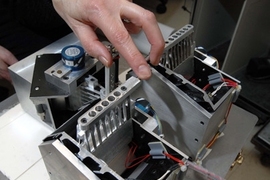

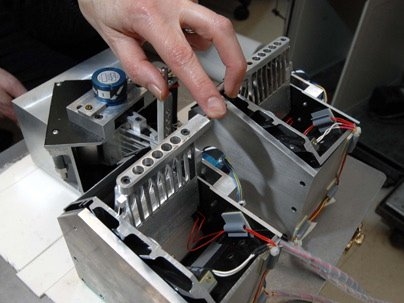

Current prototypes can be held in the user's hand or worn around the back of the neck, but once the acoustic processing software is developed, it could be easily incorporated into existing smart phones, according to the researchers. To lay the groundwork for such future applications, the researchers are investigating the best way to transform sound waves into vibrations.

Existing tactile aids have been in use for decades, but the MIT team hopes to improve the devices by refining the acoustic signal processing systems to provide tactile cues that are tailored to boost lip-reading performance, says Reed.

As part of their project, the researchers have done several studies on the frequency reception ability of the skin. The human ear can perceive frequencies up to 20,000 hertz, but for touch receptors in the skin, optimal frequencies are below 500 hertz.

Using a laboratory setup with a device that can provide distinct vibration patterns to three fingers simultaneously, Moallem has done preliminary studies of deaf people's ability to interpret the vibrations from tactile devices.

This project was originally inspired by earlier studies Reed did on the Tadoma technique, a communication method taught to deaf-blind people. Practitioners of that method hold their hands to someone's face while they are talking, allowing them to feel the vibrations of the face and neck.

Reed's study, done about 20 years ago, showed that the deaf-blind subjects could successfully understand speech with this method -- especially if the other person spoke clearly and slowly.

"We were inspired by seeing what deaf-blind people could accomplish just using the sense of touch alone," says Reed.

This research is funded by the National Institute on Deafness and Other Communication Disorders.

A version of this article appeared in MIT Tech Talk on March 4, 2009 (download PDF).