MIT researchers have developed data-compression software that could have a significant impact on the high-stakes race to provide high-quality image and video data over ordinary telephone lines.

The software is of special interest for Internet applications. For example, it could soon allow cyber-shoppers to download multiple catalog images in a fraction of a second, click on the page that captures their eye and then zoom and pan around the image in a seamless manner to further examine the product features.

Other applications include digital TV, teleconferencing, telemedicine, CD-ROM, computer graphics and database archiving. The software, which is based on an area of mathematics known as wavelet theory, was developed by Associate Professor John R. Williams and graduate student Kevin Amaratunga. Both are affiliated with the Department of Civil and Environmental Engineering and the Intelligent Engineering Systems Laboratory.

Compression technology is necessary for delivery of digital information, whether it be audio, text, images or video. That's because to deliver an image over a network very quickly, the communication channel must either be sufficiently large or the information must be packed into a small-enough package. Currently the communications channels for digital information are much too small for the typical digital image file. This problem is usually referred to as lack of bandwidth.

Bandwidth can be thought of as the speed at which information flows. Ordinary telephone lines represent the most ubiquitous information channels. However they have very limited bandwidth. Higher-bandwidth lines exist, such as ISDN and cable, but the infrastructure for these channels is not complete and will require significant additional investment.

The following example shows how staggering this bandwidth problem can be. Start with a typical uncompressed color image which, in its digital form, is represented by 250,000 bytes, or 2 million bits. Transferring this uncompressed image over an ordinary telephone line which transmits about 8,000 bits/sec would take 250 seconds, or about four minutes. Clearly, a cyber-shopper is not going to wait four minutes to download the next image from an on-line catalog, for example.

Compression technologies address this issue by reducing the amount of information that must be transmitted to fully recreate the desired data. Nevertheless, today's "standard" compression algorithms-they are called Joint Photographic Expert Group (JPEG) for still images and Motion Picture Expert Group (MPEG) for video-are not capable of achieving high enough compression ratios without significant loss of quality. Add to this the heavy drain on processing power caused by the JPEG and MPEG standards, and one can understand why the delivery of images and video over on-line services and the Internet has remained impractical.

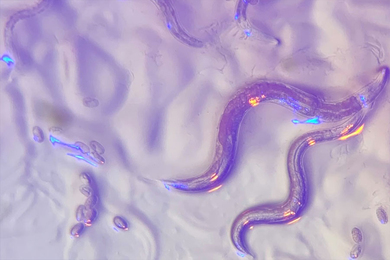

So why all the excitement about MIT's wavelet software? Wavelets represent a significant improvement over the Fourier-transform based JPEG and MPEG in terms of their ability to pack tremendous amounts of data into tiny volumes without straining a computer's central processing unit. Wavelets can do a better job at capturing key features in an image and yet not lose sight of the intricate details that are also present. MIT researchers have added to the power of wavelets by developing technology which allows an image to be broken down into smaller blocks for more efficient wavelet processing, while preserving image quality.

MIT's wavelet-based software not only enables high compression ratios without losing image quality, but allows new ways of delivering images and exploring them when they arrive. The MIT research was sponsored through the Intelligent Engineering Systems Lab by Kajima, NTT Data and Shimizu. MIT has applied for patent protection for this innovation in wavelet technology.

A version of this article appeared in MIT Tech Talk on June 7, 1995.