How should personal data be protected? What are the best uses of it? In our networked world, questions about data privacy are ubiquitous and matter for companies, policymakers, and the public.

A new study by MIT researchers adds depth to the subject by suggesting that people’s views about privacy are not firmly fixed and can shift significantly, based on different circumstances and different uses of data.

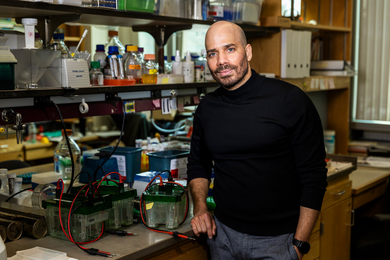

“There is no absolute value in privacy,” says Fabio Duarte, principal research scientist in MIT’s Senseable City Lab and co-author of a new paper outlining the results. “Depending on the application, people might feel use of their data is more or less invasive.”

The study is based on an experiment the researchers conducted in multiple countries using a newly developed game that elicits public valuations of data privacy relating to different topics and domains of life.

“We show that values attributed to data are combinatorial, situational, transactional, and contextual,” the researchers write.

The open-access paper, “Data Slots: tradeoffs between privacy concerns and benefits of data-driven solutions,” is published today in Nature: Humanities and Social Sciences Communications. The authors are Martina Mazzarello, a postdoc in the Senseable City Lab; Duarte; Simone Mora, a research scientist at Senseable City Lab; Cate Heine PhD ’24 of University College London; and Carlo Ratti, director of the Senseable City Lab.

The study is based around a card game with poker-type chips the researchers created to study the issue, called Data Slots. In it, players hold hands of cards with 12 types of data — such as a personal profile, health data, vehicle location information, and more — that relate to three types of domains where data are collected: home life, work, and public spaces. After exchanging cards, the players generate ideas for data uses, then assess and invest in some of those concepts. The game has been played in-person in 18 different countries, with people from another 74 countries playing it online; over 2,000 individual player-rounds were included in the study.

The point behind the game is to examine the valuations that members of the public themselves generate about data privacy. Some research on the subject involves surveys with pre-set options that respondents choose from. But in Data Slots, the players themselves generate valuations for a wide range of data-use scenarios, allowing the researchers to estimate the relative weight people place on privacy in different situations.

The idea is “to let people themselves come up with their own ideas and assess the benefits and privacy concerns of their peers’ ideas, in a participatory way,” Ratti explains.

The game strongly suggests that people’s ideas about data privacy are malleable, although the results do indicate some tendencies. The data privacy card whose use players most highly valued was for personal mobility; given the opportunity in the game to keep it or exchange it, players retained it in their hands 43 percent of the time, an indicator of its value. That was followed in order by personal health data, and utility use. (With apologies to pet owners, the type of data privacy card players held on to the least, about 10 percent of the time, involved animal health.)

However, the game distinctly suggests that the value of privacy is highly contingent on specific use-cases. The game shows that people care about health data to a substantial extent but also value the use of environmental data in the workplace, for instance. And the players of Data Slots also seem less concerned about data privacy when use of data is combined with clear benefits. In combination, that suggests a deal to be cut: Using health data can help people understand the effects of the workplace on wellness.

“Even in terms of health data in work spaces, if they are used in an aggregated way to improve the workspace, for some people it’s worth combining personal health data with environmental data,” Mora says.

Mazzarello adds: “Now perhaps the company can make some interventions to improve overall health. It might be invasive, but you might get some benefits back.”

In the bigger picture, the researchers suggest, taking a more flexible, user-driven approach to understanding what people think about data privacy can help inform better data policy. Cities — the core focus on the Senseable City Lab — often face such scenarios. City governments can collect a lot of aggregate traffic data, for instance, but public input can help determine how anonymized such data should be. Understanding public opinion along with the benefits of data use can produce viable policies for local officials to pursue.

“The bottom line is that if cities disclose what they plan to do with data, and if they involve resident stakeholders to come up with their own ideas about what they could do, that would be beneficial to us,” Duarte says. “And in those scenarios, people’s privacy concerns start to decrease a lot.”