Automation has been around since ancient Greece. Its form changes, but the intent of having technology take over repetitive tasks has remained consistent, and a fundamental element for success has been the ability to image. The latest iteration is robots, and the problem with a majority of them in industrial automation is they work in fixture-based environments that are specifically designed for them. That’s fine if nothing changes, but things inevitably do. What robots need to be capable of, which they aren’t, is to adapt quickly, see objects precisely, and then place them in the correct orientation to enable operations like autonomous assembly and packaging.

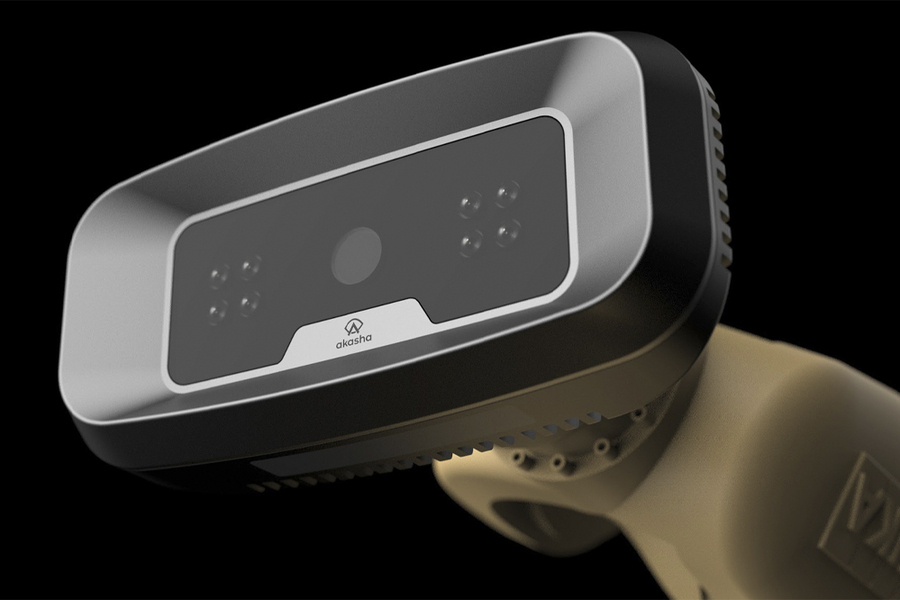

Akasha Imaging is trying to change that. The California startup with MIT roots uses passive imaging, varied modalities and spectra, combined with deep learning, to provide higher resolution feature detection, tracking, and pose orientation in a more efficient and cost-effective way. Robots are the main application and current focus. In the future, it could be for packaging and navigation systems. Those are secondary, says Kartik Venkataraman, Akasha CEO, but because adaptation would be minimal, it speaks to the overall potential of what the company is developing. “That’s the exciting part of what this technology is capable of,” he says.

Out of the lab

Started in 2019, Venkataraman founded the company with MIT Associate Professor Ramesh Raskar and Achuta Kadambi PhD '18. Raskar is a faculty member in the MIT Media Lab while Kadambi is a former Media Lab graduate student, whose research while working on his doctorate would become the foundation for Akasha’s technology.

The partners saw an opportunity with industrial automation, which, in turn, helped name the company. Akasha means “the basis and essence of all things in the material world,” and it’s that limitlessness that inspires a new kind of imaging and deep learning, Venkataraman says. It specifically pertains to estimating objects’ orientation and localization. The traditional vision systems of lidar and lasers project various wavelengths of light onto a surface and detect the time it takes for the light to strike the surface and return in order to determine its location.

Limitations have existed with these methods. The further out a system needs to be, the more power required for illumination; for a higher resolution, the more projected light. Additionally, the precision with which the elapsed time is sensed is dependent on the speed of the electronic circuits, and there is a physics-based limitation around this. Company executives are continually forced to make a decision over what’s most important between resolution, cost, and power. “It’s always a trade-off,” he says.

And projected light itself presents challenges. With shiny plastic or metal objects, the light bounces back, and the reflectivity interferes with illumination and accuracy of readings. With clear objects and clear packaging, the light goes through, and the system gives a picture of what’s behind the intended target. And with dark objects, there is little-to-no reflection, making detection difficult, let alone providing any detail.

Putting it to use

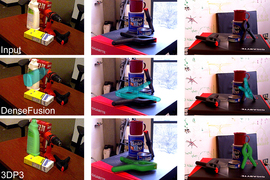

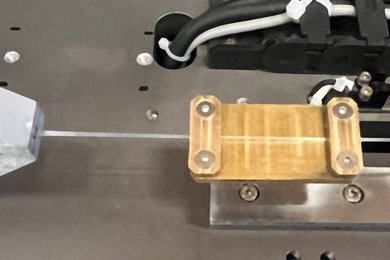

One of the company’s focuses is to improve robotics. As it stands in warehouses, robots assist in manufacturing, but materials present the aforementioned optical challenges. Objects can also be small, where, for example, a 5-6 millimeter-long spring needs to be picked up and threaded into a 2mm-wide shaft. Human operators can compensate for inaccuracies because they can touch things, but, since robots lack tactile feedback, their vision has to be accurate. If it’s not, any slight deviation can result in a blockage where a person has to intervene. In addition, if the imaging system isn’t reliable and accurate more than 90-plus percent of the time, a company is creating more problems than it’s solving and losing money, he says.

Another potential is to improve automotive navigation systems. Lidar, a current technology, can detect that there’s an object in the road, but it can’t necessarily tell what the object is, and that information is often useful, “in some cases vital,” Venkataraman says.

In both realms, Akasha’s technology provides more. On a road or highway, the system can pick up on the texture of a material and be able to identify if what’s oncoming is a pothole, animal, or road work barrier. In the unstructured environment of a factory or warehouse, it can help a robot pick up and put that spring into the shaft or be able to move objects from one clear container into another. Ultimately, it means an increase in their mobilization.

With robots in assembly automation, one nagging obstacle has been that most don’t have any visual system. They’re only able to find an object because it’s fixed and they’re programmed where to go. “It works, but it’s very inflexible,” he says. When new products come in or a process changes, the fixtures have to change as well. It requires time, money, and human intervention, and it results in an overall loss in productivity.

Along with not having the ability to essentially see and understand, robots don’t have the innate hand-eye coordination that humans do. “They can’t figure out the disorderliness of the world on a day-to-day basis,” says Venkataraman, but, he adds, “with our technology I think it will begin to happen.”

Like with most new companies, the next step is testing the robustness and reliability in real-world environments down to the “sub-millimeter level” of precision, he says. After that, the next five years should see an expansion into various industrial applications. It’s virtually impossible to predict which ones, but it’s easier to see the universal benefits. “In the long run, we’ll see this improved vision as being an enabler for improved intelligence and learning,” Venkataraman says. “In turn, it will then enable the automation of more complex tasks than has been possible up until now.”