Today, big multinational companies depend heavily on their information systems. If a company’s internal servers go down for even a few hours, it can mean millions of dollars in lost productivity. Information technology (IT) managers are thus reluctant to make changes that could have unforeseen consequences for network stability; as a result, they often end up saddled with obsolete software and inefficient network designs.

At the Institute of Electrical and Electronics Engineers’ (IEEE) Cluster conference from Sept. 26-30, researchers from MIT’s Department of Civil and Environmental Engineering (CEE) will unveil what may well be the most sophisticated computer model of a corporate information infrastructure yet built, which could help IT managers predict the effects of changes to their networks. In a study funded by Ford Motor Co., the researchers compared their model’s predictions to data supplied by Ford and found that, on average, its estimates of response times for queries sent to company servers were within 5 to 13 percent of the real times.

A server in a corporate data center can be a complex device, with 32, 64 or even 128 separate processors, all of which are connected to a bank of disk drives. Servers at a single data center are connected to each other, and the data center as a whole is connected to other data centers around the world.

Exhaustive detail

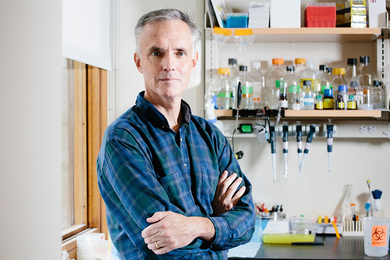

Researchers have previously modeled the performance of groups of servers within individual data centers, with good results. But the MIT team — Sergio Herrero-Lopez, a graduate student in CEE; John R. Williams, a professor of information engineering, civil and environmental engineering and engineering systems; and Abel Sanchez, a research scientist in the Laboratory for Manufacturing and Productivity — instead modeled every processor in every server, every connection between processors and disk drives, and every connection between servers and between data centers. They also modeled the way in which processing tasks are distributed across the network by software running on multiple servers.

“We take the software application and we break it into very basic operations, like logging in, saving files, searching, opening, filtering — basically, all the classic things that people do when they are searching for information,” Herrero-Lopez says.

Just one of those operations, Herrero-Lopez explains, could require dozens of communications between computers on the network. Opening a file, for instance, might require identifying the most up-to-date copies of particular pieces of data, which could be stored on different servers; requesting the data; and transferring it to the requester. The researchers’ model represents each of these transactions in terms of the computational resources it requires: “It takes this many cycles of the CPU, this bandwidth, and this memory,” Herrero-Lopez says.

For a large network, the model can become remarkably complex. When the researchers were benchmarking it against the data from Ford, “it took us two days to simulate one day” of Ford’s operations, Herrero-Lopez says. (Of course, they were running the model on a single computer, which was less powerful than the average server in a major corporate network.) But because the model can simulate the assignment of specific computational tasks to specific processors, it can provide much more accurate information about the effects of software upgrades or network reconfigurations than previous models could.

Trust, but verify

To be at all practical, even so complex a model has to make some simplifying assumptions. It doesn’t require any information about what an application running on the network does, but it does require information about the resources the application consumes. To provide that information, Ford engineers ran a series of experiments on servers at multiple data centers. They also supplied the MIT researchers with information about patterns of network use: “At 9 a.m., this many engineers are logging in, or are saving files, in this geographical location,” Herrero-Lopez explains.

The researchers’ model is modular: There’s a standard bit of code that describes each element of the network, and elements — a processor here, a high-bandwidth connection there — can be added or removed arbitrarily. So the model can quickly be adapted to different companies’ networks, and to different hypothetical configurations of those networks. “You can use the simulator for performance estimation and capacity planning, or to evaluate hardware or software configurations or bottlenecks — what happens if a link between two data centers is broken? — or for background processes or denial-of-service attacks,” Herrero-Lopez says. “It’s nonintrusive — you’re not touching the system — it’s modular, and it’s cheap.”

At the Institute of Electrical and Electronics Engineers’ (IEEE) Cluster conference from Sept. 26-30, researchers from MIT’s Department of Civil and Environmental Engineering (CEE) will unveil what may well be the most sophisticated computer model of a corporate information infrastructure yet built, which could help IT managers predict the effects of changes to their networks. In a study funded by Ford Motor Co., the researchers compared their model’s predictions to data supplied by Ford and found that, on average, its estimates of response times for queries sent to company servers were within 5 to 13 percent of the real times.

A server in a corporate data center can be a complex device, with 32, 64 or even 128 separate processors, all of which are connected to a bank of disk drives. Servers at a single data center are connected to each other, and the data center as a whole is connected to other data centers around the world.

Exhaustive detail

Researchers have previously modeled the performance of groups of servers within individual data centers, with good results. But the MIT team — Sergio Herrero-Lopez, a graduate student in CEE; John R. Williams, a professor of information engineering, civil and environmental engineering and engineering systems; and Abel Sanchez, a research scientist in the Laboratory for Manufacturing and Productivity — instead modeled every processor in every server, every connection between processors and disk drives, and every connection between servers and between data centers. They also modeled the way in which processing tasks are distributed across the network by software running on multiple servers.

“We take the software application and we break it into very basic operations, like logging in, saving files, searching, opening, filtering — basically, all the classic things that people do when they are searching for information,” Herrero-Lopez says.

Just one of those operations, Herrero-Lopez explains, could require dozens of communications between computers on the network. Opening a file, for instance, might require identifying the most up-to-date copies of particular pieces of data, which could be stored on different servers; requesting the data; and transferring it to the requester. The researchers’ model represents each of these transactions in terms of the computational resources it requires: “It takes this many cycles of the CPU, this bandwidth, and this memory,” Herrero-Lopez says.

For a large network, the model can become remarkably complex. When the researchers were benchmarking it against the data from Ford, “it took us two days to simulate one day” of Ford’s operations, Herrero-Lopez says. (Of course, they were running the model on a single computer, which was less powerful than the average server in a major corporate network.) But because the model can simulate the assignment of specific computational tasks to specific processors, it can provide much more accurate information about the effects of software upgrades or network reconfigurations than previous models could.

Trust, but verify

To be at all practical, even so complex a model has to make some simplifying assumptions. It doesn’t require any information about what an application running on the network does, but it does require information about the resources the application consumes. To provide that information, Ford engineers ran a series of experiments on servers at multiple data centers. They also supplied the MIT researchers with information about patterns of network use: “At 9 a.m., this many engineers are logging in, or are saving files, in this geographical location,” Herrero-Lopez explains.

The researchers’ model is modular: There’s a standard bit of code that describes each element of the network, and elements — a processor here, a high-bandwidth connection there — can be added or removed arbitrarily. So the model can quickly be adapted to different companies’ networks, and to different hypothetical configurations of those networks. “You can use the simulator for performance estimation and capacity planning, or to evaluate hardware or software configurations or bottlenecks — what happens if a link between two data centers is broken? — or for background processes or denial-of-service attacks,” Herrero-Lopez says. “It’s nonintrusive — you’re not touching the system — it’s modular, and it’s cheap.”