Humans excel at recognizing faces, but how we do this has been an abiding mystery in neuroscience and psychology. In an effort to explain our success in this area, researchers are taking a closer look at how and why we fail.

A new study from MIT looks at a particularly striking instance of failure: our impaired ability to recognize faces in photographic negatives. The study, which appears in the Proceedings of the National Academy of Sciences this week, suggests that a large part of the answer might lie in the brain's reliance on a certain kind of image feature.

The work could potentially lead to computer vision systems, for settings as diverse as industrial quality control or object and face detection. On a different front, the results and methodologies could help researchers probe face-perception skills in children with autism, who are often reported to experience difficulties analyzing facial information.

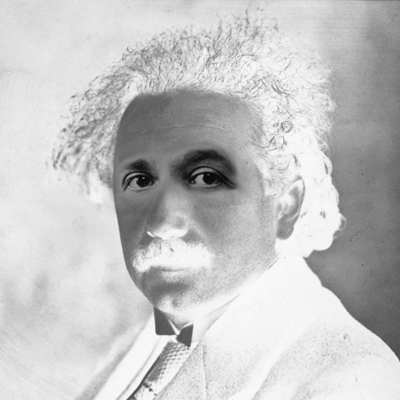

Anyone who remembers the days before digital photography has probably noticed that it's much harder to identify people in photographic negatives than in normal photographs. "You have not taken away any information, but somehow these faces are much harder to recognize," says Pawan Sinha, an associate professor of brain and cognitive sciences and senior author of the PNAS study.

Sinha has previously studied light and dark relationships between different parts of the face, and found that in nearly every normal lighting condition, a person's eyes appear darker than the forehead and cheeks. He theorized that photo negatives are hard to recognize because they disrupt these very strong regularities around the eyes.

To test this idea, Sinha and his colleagues asked subjects to identify photographs of famous people in not only positive and negative images, but also in a third type of image in which the celebrities' eyes were restored to their original levels of luminance, while the rest of the photo remained in negative.

Subjects had a much easier time recognizing these "contrast chimera" images. According to Sinha, that's because the light/dark relationships between the eyes and surrounding areas are the same as they would be in a normal image.

Similar contrast relationships can be found in other parts of the face, primarily the mouth, but those relationships are not as consistent. "The relationships around the eyes seem to be particularly significant," says Sinha.

Other studies have shown that people with autism tend to focus on the mouths of people they are looking at, rather than the eyes, so the new findings could help explain why autistic people have such difficulty recognizing faces, says Sinha.

The findings also suggest that neuronal responses in the brain may be based on these relationships between different parts of the face. The team found that when they scanned the brains of people performing the recognition task, regions associated with facial processing (the fusiform face areas) were far more active when looking at the contrast chimeras than when looking at pure negatives.

Other authors of the paper are Sharon Gilad of the Weizmann Institute of Science in Israel and MIT postdoctoral associate Ming Meng, both of whom contributed equally to the work.

The research was funded by the Alfred P. Sloan Foundation and the Jim and Marilyn Simons Foundation.

A version of this article appeared in MIT Tech Talk on March 18, 2009 (download PDF).