The TV soap opera you watch may soon be a home shopping program, thanks to researchers at the Media Laboratory. The lab recently produced a soap opera which lets viewers select clothing and furnishings with a special remote control, and see an item's price and purchase information on a pop-up screen display.

The program, called HyperSoap, offers an engaging form of interactive shopping and an alternative to the printed product catalogs that stores and manufacturers mail and distribute to customers.

Produced in association with Media Lab sponsor JCPenney, Hyper-Soap lets viewers interact with the program at the click of a button -- in much the same way that a person can click on words and pictures in a web document for links to additional content.

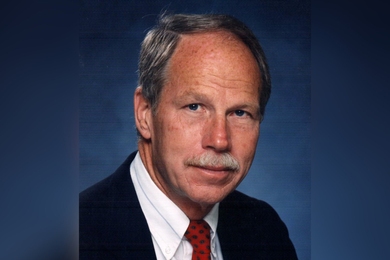

The technology behind the Hyper-Soap project enables a broad new range of applications well beyond product placement. "We believe it could be a way of creating entirely new forms of programming which engages the viewer in unprecedented ways," said Associate Professor V. Michael Bove Jr., head of the Media Lab's Object-Based Media group.

"For example, new kinds of documentaries and educational programs can be created, such as a nature program that lets children go on safari and collect specimens with their remote control. Other programs might provide instruction on everything from flying an airplane to surgery."

HyperSoap was created with au-thoring software developed at the Media Lab. The software allows a producer or graphic artist to quickly indicate desired regions or objects in a video sequence, and thereafter the system tracks those objects automatically, and with high precision. This is a significant advance over existing technology, which either requires manually marking object locations in each and every video frame, or which tracks so coarsely that only one or two moving regions can be identified in any scene.

By automating and improving the process of object recognition, the Media Lab's technology reduces the manual work of interactive content creation, while improving the quality. As a result, literally dozens of items -- ranging from an actress' blouse, skirt, necklace and earrings to the knickknacks on her desk -- are selectable during a program.

"While it is easy for people to look at the world and instantly recognize objects -- a person, a table, an animal -- getting a computer to recognize objects is a tricky problem," said Professor Bove. "Our new software works by 'training' the computer to recognize what each object looks like in terms of the way it moves and its colors and textures."

Exploring new video production methods in addition to designing the underlying video processing technology, the Media Lab team was also interested in understanding how additional aspects of TV production are affected when creating programs with hyperlinks.

"A traditional soap opera is created with very specific timing and flow, which does not lend well to hyperlinks and viewer interruptions," Professor Bove said. "In HyperSoap, scripting and directing were modified to allow and even encourage interaction with the products as part of the viewing experience." Dynamic graphic design experiments were also conducted to determine the best way of showing the additional product information.

"This innovative research breaks the boundaries of traditional advertising methods, and offers a whole new scope to marketing goods and services," said Michael Ponder of JCPenney's Internet commerce research division. "In addition, it adds an important new dimension to the ways in which people can learn and access information."

REACHING THE MARKET

The Object-Based Media group is also working with JCPenney to explore other programming venues. These could include a CD or digital video disc that customers could receive in the mail instead of a printed catalog and view on their personal computers. In the future, the ability to view hyperlinked video programs may be built into digital television sets, or set-top boxes for cable or satellite services.

In another application, department stores could have TV-equipped kiosks that would engage customers in a drama and allow them to view and select products of interest by simply touching the screen. The kiosk could also provide information about products, such as sale prices, or directions to the departments in which the items are displayed.

The Object-Based Media group seeks to change the way in which digital video and audio are produced and used. Instead of concentrating simply on data compression for more efficient transmission of signals, the group looks at ways in which the outputs of cameras and microphones can be subjected to an "understanding" process which results in a collection of audio and video "objects" and a "script" describing their behavior.

Examples of this process include automatically separating video images into people and background portions, extracting individual voices from mixed audio signals, and merging the observations of several ordinary cameras into a single three-dimensional model of a scene. These new methods enable both new creative directions for content producers and more responsive media forms for viewers.

Support for this research was provided by the Digital Life Consortium of the Media Lab.

A version of this article appeared in MIT Tech Talk on January 13, 1999.