Although it may sound like an April Fool's joke, MIT researchers are conducting serious studies in which people mouth odd sounds in response to a machine or have their tongues wired up with little coils. These studies may shed light on how hearing affects our ability to talk.

In the long run, this knowledge could help diagnose and treat people with communicative disorders, including stutterers, stroke victims and those suffering from dyslexia. It might also be used to help gain or lose an accent.

By studying speech movements and the resulting sounds in three groups of subjects -- people with normal hearing, people whose auditory perception of speech sounds is modified experimentally and in hearing-impaired people who gain some hearing artifically -- MIT researchers are learning about the "internal model" that tells us how we want to sound.

"The main thing I have to offer is a new tool for understanding the role of auditory feedback in speech production," said John F. Houde, a postdoctoral fellow in otolaryngology at the University of California at San Francisco, who recently completed his PhD in brain and cognitive sciences at MIT and published a paper on this subject in the February 20 issue of Science.

Dr. Joseph S. Perkell, a senior research scientist in the Speech Communication Group of the Research Laboratory of Electronics, also studies the role hearing plays in speaking.

By examining the physical components of speech production and exploring changes in speech that result from changes in hearing, he is helping to unravel the enormously complex system involving brain and body that allows us to communicate through spoken language.

Dr. Perkell, who is also affiliated with the Department of Brain and Cognitive Sciences, says that the goals of speech movements -- aside from the primary goal of converting linguistic messages into intelligible signals -- vary depending on the situation.

"Variations are made possible partly because listeners can understand less-than-perfect speech," he said. The almost unconscious decisions we make about the clarity, volume and rate at which we speak depends on the noise level around us, the listener's familiarity with the language and whether we can see the listener's face, among other things.

Dr. Perkell is investigating the idea that, whenever possible, we opt for the laziest way to speak by choosing movements that will get our point across with the least effort. Speaking clearly may require more effort than letting words run together and dropping parts of words.

A COMPLICATED TRICK

Our ability to control individual muscles in our tongues, coupled with our uniquely right-angled vocal tract (part of the airway between the larynx and lips), may account for the wide range of speech sounds that humans alone can make.

Although talking may seem easy, it is anything but simple. Before a person even opens his mouth, decisions about how loudly or long he intends to speak determine how much air he uses. Then he expands his rib cage and begins forcing air from the lungs through the trachea and larynx. In the larynx, the air flow can cause the vocal folds to vibrate, making the voicing sounds of vowels. The air then passes into the vocal tract, where the tongue, jaw, soft palate and lips are moved around to create different speech sounds.

Sometimes the tongue or lips completely close off the vocal tract or create narrow constrictions for the production of silent intervals and certain kinds of noises that correspond to consonants like "t" and "s."

Dozens of muscles that move several very different physiological structures -- the lungs, larynx and vocal tract -- are controlled by the brain with lightning speed to produce a single "Hey!"

"These systems that evolved to serve different functions -- breathing, swallowing, chewing -- are used in an elegant way to make these sounds come out right," Dr. Perkell said. "Having engineering knowledge and skills to apply to understanding this process has been extremely helpful."

Approaching the problem with expertise in experimental psychology as well as engineering, Dr. Perkell studies aspects of the motor control system that makes speech possible. This task is so complex that only a handful of researchers in the country are tackling it.

"Speech may be the most complicated motor act any creature performs," said Dr. Perkell, who admitted he doesn't expect to see an explanation in his lifetime of how billions of neurons work together to accomplish this enormously complex task.

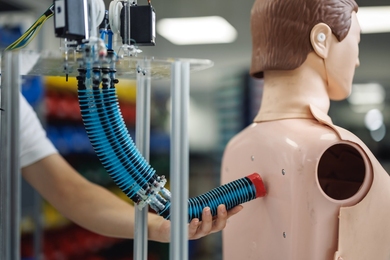

To help understand speech motor control, Dr. Perkell and his colleagues gather data about the speech motor control system with an Electro-Magnetic Midsaggital Articulometer (EMMA) system. The EMMA system developed at MIT uses small encased transducer coils that are glued to a subject's articulators -- the parts of the tongue, teeth and lips that help produce speech.

The data produced by the EMMA system when a subject is talking are analyzed on a computer and plotted on graphs to help researchers learn about the motor strategies that people use to produce intelligible sequences of speech sounds. About 20 such systems are used in laboratories around the world.

The data from these systems have made it clear that people can use different strategies to produce the same utterance. Even when the same person repeats the same thing a number of times, the sound is produced somewhat differently each time.

By studying such patterns of variation, researchers can begin to understand which parts of utterances are less variable and which parts are more variable. The parts that are less variable are likely to be most useful for transmitting the message reliably to the listener.

The research done by Dr. Perkell and his colleagues with the EMMA system shows that the resulting sounds may be somewhat less variable than the movements that produce them. Such findings point to the importance of the acoustic signal and the need to understand the role of hearing in controlling speech production.

HARD-WIRED FOR SPEECH?

Scientists agree that with the help of their ears, people establish and refine from infancy through puberty an "internal model" of how speech should sound. In effect, through learning, we become "hard-wired" for speech.

"Speech is really stable in the absence of feedback," Dr. Houde said. Dr. Houde and Dr. Perkell pointed out that adults who become deaf will retain intelligible speech for a couple of decades, but an individual who is born deaf has great difficulty learning to speak.

While the brain's parameters for controlling speech are very stable, they can be tinkered with, as Dr. Houde found in his research at MIT. He and his thesis adviser, Professor of Psychology Michael I. Jordan, wanted to find out how people adapt their speech to what they hear.

Dr. Houde built a sensorimotor adaptation (SA) apparatus that takes a sample of speech, figures out in real time its spectrum and formant pattern (the peaks in the frequency spectrum of human speech), alters the formant pattern of a vowel, resynthesizes the speech and sends it back to the subject with a virtually imperceptible delay.

If you try to say a word with one vowel sound -- "pep," for instance -- the SA apparatus makes it sound as though you just said a different vowel sound, like "peep."

"The easiest way to think about it is that you've suddenly been transported to a planet with a weird atmosphere that changes how your speech sounds," Dr. Houde said. This is all done in a whisper, because it's hard to block out a person's hearing of his own voice.

It turns out that "we end up doing whatever we have to do to make the correct sound come out of our mouths. When we hear our own words with an altered vowel sound, we automatically begin to 'correct' the way we say the vowel in the first place," he said.

In one version of the experiment, when subjects whispered "pep," the machine made them hear this as "peep." In response, the subjects altered their whispering until what they heard from the machine sounded once again like "pep," even though what they were actually whispering -- if they could hear it without the machine -- sounded more liked "pop." If they heard altered feedback for long enough, they continued to say "pop" for "pep" even after the alteration was eliminated, as long as they couldn't hear themselves. Once they could hear themselves normally, they returned to normal speech

LINKING HEARING AND SPEECH

It was once thought that "hearing yourself has only an indirect influence on your speech -- a minor one about how to set some parameter about how to speak," Dr. Houde said. "It appears that the connection between hearing your own and others' speech and producing sounds is not that simple."

Dr. Perkell said that although the role of hearing in controlling speech may be complicated, "it is very unlikely that the motor control system uses auditory feedback moment-to-moment" to monitor speech production. "The neural processing times would probably be toolong, and a significant portion of the movements for many vowels occurs during preceding consonant strings, when relatively little or no sound is being generated."

He and his colleagues have been studying the relation between speech and hearing in people who have been fitted with an auditory prosthesis called a cochlear implant, which uses sophisticated electronics to provide a form of artificial hearing. These patients have learned how to speak while they could hear and then lost their hearing. While their speech remains intelligible, it does not sound completely normal.

Dr. Perkell's group collaborates with surgeon Joseph Nadol and Donald Eddington (also an RLE principle research scientist) at the Massachusetts Eye and Ear Infirmary in studying changes that take place in the speech of cochlear implant patients. The changes indicate that in adults, hearing seems to have two roles: it monitors the acoustic environment, so that we speak louder in a noisy room; and it maintains our internal model to assure that we end up sounding the way we think we should.

Dr. Houde's work is supported by the National Institute of Health. Dr. Perkell's work is funded by the NIH's National Institute of Deafness and Other Communicative Disorders.

A version of this article appeared in MIT Tech Talk on April 1, 1998.