It can be a hassle to get to the doctor’s office. And the task can be especially challenging for parents of children with motor disorders such as cerebral palsy, as a clinician must evaluate the child in person on a regular basis, often for an hour at a time. Making it to these frequent evaluations can be expensive, time-consuming, and emotionally taxing.

MIT engineers hope to alleviate some of that stress with a new method that remotely evaluates patients’ motor function. By combining computer vision and machine-learning techniques, the method analyzes videos of patients in real-time and computes a clinical score of motor function based on certain patterns of poses that it detects in video frames.

The researchers tested the method on videos of more than 1,000 children with cerebral palsy. They found the method could process each video and assign a clinical score that matched with over 70 percent accuracy what a clinician had previously determined during an in-person visit.

The video analysis can be run on a range of mobile devices. The team envisions that patients can be evaluated on their progress simply by setting up their phone or tablet to take a video as they move about their own home. They could then load the video into a program that would quickly analyze the video frames and assign a clinical score, or level of progress. The video and the score could then be sent to a doctor for review.

The team is now tailoring the approach to evaluate children with metachromatic leukodystrophy — a rare genetic disorder that affects the central and peripheral nervous system. They also hope to adapt the method to assess patients who have experienced a stroke.

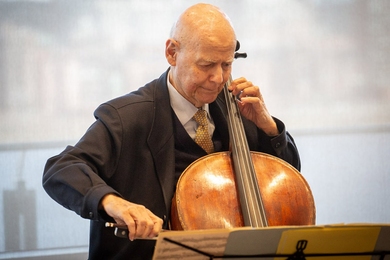

“We want to reduce a little of patients’ stress by not having to go to the hospital for every evaluation,” says Hermano Krebs, principal research scientist at MIT’s Department of Mechanical Engineering. “We think this technology could potentially be used to remotely evaluate any condition that affects motor behavior.”

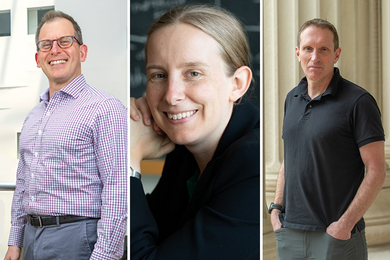

Krebs and his colleagues will present their new approach at the IEEE Conference on Body Sensor Networks in October. The study’s MIT authors are first author Peijun Zhao, co-principal investigator Moises Alencastre-Miranda, Zhan Shen, and Ciaran O’Neill, along with David Whiteman and Javier Gervas-Arruga of Takeda Development Center Americas, Inc.

Network training

At MIT, Krebs develops robotic systems that physically work with patients to help them regain or strengthen motor function. He has also adapted the systems to gauge patients’ progress and predict what therapies could work best for them. While these technologies have worked well, they are significantly limited in their accessibility: Patients have to travel to a hospital or facility where the robots are in place.

“We asked ourselves, how could we expand the good results we got with rehab robots to a ubiquitous device?” Krebs recalls. “As smartphones are everywhere, our goal was to take advantage of their capabilities to remotely assess people with motor disabilities, so that they could be evaluated anywhere.”

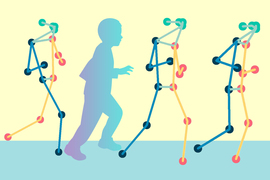

Image: Dataset created by Stanford Neuromuscular Biomechanics Laboratory in collaboration with Gillette Children’s Specialty Healthcare

The researchers looked first to computer vision and algorithms that estimate human movements. In recent years, scientists have developed pose estimation algorithms that are designed to take a video — for instance, of a girl kicking a soccer ball — and translate her movements into a corresponding series of skeleton poses, in real-time. The resulting sequence of lines and dots can be mapped to coordinates that scientists can further analyze.

Krebs and his colleagues aimed to develop a method to analyze skeleton pose data of patients with cerebral palsy — a disorder that has traditionally been evaluated along the Gross Motor Function Classification System (GMFCS), a five-level scale that represents a child’s general motor function. (The lower the number, the higher the child’s mobility.)

The team worked with a publicly available set of skeleton pose data that was produced by Stanford University’s Neuromuscular Biomechanics Laboratory. This dataset comprised videos of more than 1,000 children with cerebral palsy. Each video showed a child performing a series of exercises in a clinical setting, and each video was tagged with a GMFCS score that a clinician assigned the child after the in-person assessment. The Stanford group ran the videos through a pose estimation algorithm to generate skeleton pose data, which the MIT group then used as a starting point for their study.

The researchers then looked for ways to automatically decipher patterns in the cerebral palsy data that are characteristic of each clinical motor function level. They started with a Spatial-Temporal Graph Convolutional Neural Network — a machine-learning process that trains a computer to process spatial data that changes over time, such as a sequence of skeleton poses, and assign a classification.

Before the team applied the neural network to cerebral palsy, they utilized a model that had been pretrained on a more general dataset, which contained videos of healthy adults performing various daily activities like walking, running, sitting, and shaking hands. They took the backbone of this pretrained model and added to it a new classification layer, specific to the clinical scores related to cerebral palsy. They fine-tuned the network to recognize distinctive patterns within the movements of children with cerebral palsy and accurately classify them within the main clinical assessment levels.

They found that the pretrained network learned to correctly classify children’s mobility levels, and it did so more accurately than if it were trained only on the cerebral palsy data.

“Because the network is trained on a very large dataset of more general movements, it has some ideas about how to extract features from a sequence of human poses,” Zhao explains. “While the larger dataset and the cerebral palsy dataset can be different, they share some common patterns of human actions and how those actions can be encoded.”

The team test-ran their method on a number of mobile devices, including various smartphones, tablets, and laptops, and found that most devices could successfully run the program and generate a clinical score from videos, in close to real-time.

The researchers are now developing an app, which they envision parents and patients could one day use to automatically analyze videos of patients, taken in the comfort of their own environment. The results could then be sent to a doctor for further evaluation. The team is also planning to adapt the method to evaluate other neurological disorders.

“This approach could be easily expandable to other disabilities such as stroke or Parkinson’s disease once it is tested in that population using appropriate metrics for adults,” says Alberto Esquenazi, chief medical officer at Moss Rehabilitation Hospital in Philadelphia, who was not involved in the study. “It could improve care and reduce the overall cost of health care and the need for families to lose productive work time, and it is my hope [that it could] increase compliance.”

“In the future, this might also help us predict how patients would respond to interventions sooner,” Krebs says. “Because we could evaluate them more often, to see if an intervention is having an impact.”

This research was supported by Takeda Development Center Americas, Inc.