The coronavirus pandemic has driven us apart physically while reminding us of the power of technology to connect. When MIT shut its doors in March, much of campus moved online, to virtual classes, labs, and chatrooms. Among those making the pivot were students engaged in independent research under MIT’s Undergraduate Research Opportunities Program (UROP).

With regular check-ins with their advisors via Slack and Zoom, many students succeeded in pushing through to the end. One even carried on his experiments from his bedroom, after schlepping his Sphero Bolt robots home in a backpack. “I’ve been so impressed by their resilience and dedication,” says Katherine Gallagher, one of three artificial intelligence engineers at MIT Quest for Intelligence who works with students each semester on intelligence-related applications. “There was that initial week of craziness and then they were right back to work.” Four projects from this spring are highlighted below.

Learning to explore the world with open eyes and ears

Robots rely heavily on images beamed through their built-in cameras, or surrogate “eyes,” to get around. MIT senior Alon Kosowsky-Sachs thinks they could do a lot more if they also used their microphone “ears.”

From his home in Sharon, Massachusetts, where he retreated after MIT closed in March, Kosowsky-Sachs is training four baseball-sized Sphero Bolt robots to roll around a homemade arena. His goal is to teach the robots to pair sights with sounds, and to exploit this information to build better representations of their environment. He's working with Pulkit Agrawal, an assistant professor in MIT’s Department of Electrical Engineering and Computer Science, who is interested in designing algorithms with human-like curiosity.

While Kosowsky-Sachs sleeps, his robots putter away, gliding through an object-strewn rink he built for them from two-by-fours. Each burst of movement becomes a pair of one-second video and audio clips. By day, Kosowsky-Sachs trains a “curiosity” model aimed at pushing the robots to become bolder, and more skillful, at navigating their obstacle course.

“I want them to see something through their camera, and hear something from their microphone, and know that these two things happen together,” he says. “As humans, we combine a lot of sensory information to get added insight about the world. If we hear a thunder clap, we don’t need to see lightning to know that a storm has arrived. Our hypothesis is that robots with a better model of the world will be able to accomplish more difficult tasks.”

Training a robot agent to design a more efficient nuclear reactor

One important factor driving the cost of nuclear power is the layout of its reactor core. If fuel rods are arranged in an optimal fashion, reactions last longer, burn less fuel, and need less maintenance. As engineers look for ways to bring down the cost of nuclear energy, they are eying the redesign of the reactor core.

“Nuclear power emits very little carbon and is surprisingly safe compared to other energy sources, even solar or wind,” says third-year student Isaac Wolverton. “We wanted to see if we could use AI to make it more efficient.”

In a project with Josh Joseph, an AI engineer at the MIT Quest, and Koroush Shirvan, an assistant professor in MIT’s Department of Nuclear Science and Engineering, Wolverton spent the year training a reinforcement learning agent to find the best way to lay out fuel rods in a reactor core. To simulate the process, he turned the problem into a game, borrowing a machine learning technique for producing agents with superhuman abilities at chess and Go.

He started by training his agent on a simpler problem: arranging colored tiles on a grid so that as few tiles as possible of the same color would touch. As Wolverton increased the number of options, from two colors to five, and four tiles to 225, he grew excited as the agent continued to find the best strategy. “It gave us hope we could teach it to swap the cores into an optimal arrangement,” he says.

Eventually, Wolverton moved to an environment meant to simulate a 36-rod reactor core, with two enrichment levels and 2.1 million possible core configurations. With input from researchers in Shirvan’s lab, Wolverton trained an agent that arrived at the optimal solution.

The lab is now building on Wolverton's code to try to train an agent in a life-sized 100-rod environment with 19 enrichment levels. “There’s no breakthrough at this point,” he says. “But we think it’s possible, if we can find enough compute resources.”

Making more livers available to patients who need them

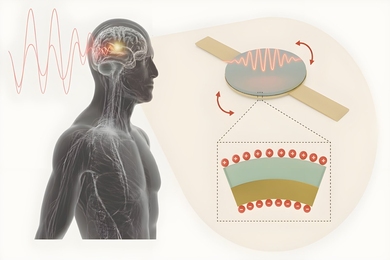

About 8,000 patients in the United States receive liver transplants each year, but that’s only half the number who need one. Many more livers might be made available if hospitals had a faster way to screen them, researchers say. In a collaboration with Massachusetts General Hospital, MIT Quest is evaluating whether automation could help to boost the nation’s supply of viable livers.

In approving a liver for transplant, pathologists estimate its fat content from a slice of tissue. If it’s low enough, the liver is deemed ready for transplant. But there are often not enough qualified doctors to review tissue samples on the tight timeline needed to match livers with recipients. A shortage of doctors, coupled with the subjective nature of analyzing tissue, means that viable livers are inevitably discarded.

This loss represents a huge opportunity for machine learning, says third-year student Kuan Wei Huang, who joined the project to explore AI applications in health care. The project involves training a deep neural network to pick out globules of fat on liver tissue slides to estimate the liver’s overall fat content.

One challenge, says Huang, has been figuring out how to handle variations in how various pathologists classify fat globules. “This makes it harder to tell whether I’ve created the appropriate masks to feed into the neural net,” he says. “However, after meeting with experts in the field, I received clarifications and was able to continue working.”

Trained on images labeled by pathologists, the model will eventually learn to isolate fat globules in unlabeled images on its own. The final output will be a fat content estimate with pictures of highlighted fat globules showing how the model arrived at its final count. “That’s the easy part — we just count up the pixels in the highlighted globules as a percentage of the overall biopsy and we have our fat content estimate,” says the Quest’s Gallagher, who is leading the project.

Huang says he’s excited by the project’s potential to help people. “Using machine learning to address medical problems is one of the best ways that a computer scientist can impact the world.”

Exposing the hidden constraints of what we mean in what we say

Language shapes our understanding of the world in subtle ways, with slight variations in the words we use conveying sharply different meanings. The sentence, “Elephants live in Africa and Asia,” looks a lot like the sentence “Elephants eat twigs and leaves.” But most readers will conclude that the elephants in the first sentence are split into distinct groups living on separate continents but not apply the same reasoning to the second sentence, because eating twigs and eating leaves can both be true of the same elephant in a way that living on different continents cannot.

Karen Gu is a senior majoring in computer science and molecular biology, but instead of putting cells under a microscope for her SuperUROP project, she chose to look at sentences like the ones above. “I’m fascinated by the complex and subtle things that we do to constrain language understanding, almost all of it subconsciously,” she says.

Working with Roger Levy, a professor in MIT’s Department of Brain and Cognitive Sciences, and postdoc MH Tessler, Gu explored how prior knowledge guides our interpretation of syntax and ultimately, meaning. In the sentences above, prior knowledge about geography and mutual exclusivity interact with syntax to produce different meanings.

After steeping herself in linguistics theory, Gu built a model to explain how, word by word, a given sentence produces meaning. She then ran a set of online experiments to see how human subjects would interpret analogous sentences in a story. Her experiments, she says, largely validated intuitions from linguistic theory.

One challenge, she says, was having to reconcile two approaches for studying language. “I had to figure out how to combine formal linguistics, which applies an almost mathematical approach to understanding how words combine, and probabilistic semantics-pragmatics, which has focused more on how people interpret whole utterances.’ "

After MIT closed in March, she was able to finish the project from her parents’ home in East Hanover, New Jersey. “Regular meetings with my advisor have been really helpful in keeping me motivated and on track,” she says. She says she also got to improve her web-development skills, which will come in handy when she starts work at Benchling, a San Francisco-based software company, this summer.

Spring semester Quest UROP projects were funded, in part, by the MIT-IBM Watson AI Lab and Eric Schmidt, technical advisor to Alphabet Inc., and his wife, Wendy.