To demystify artificial intelligence (AI) and unlock its benefits, the MIT Quest for Intelligence created the Quest Bridge to bring new intelligence tools and ideas into classrooms, labs, and homes. This spring, more than a dozen Undergraduate Research Opportunities Program (UROP) students joined the project in its mission to make AI accessible to all. Undergraduates worked on applications designed to teach kids about AI, improve access to AI programs and infrastructure, and harness AI to improve literacy and mental health. Six projects are highlighted here.

Project Athena for cloud computing

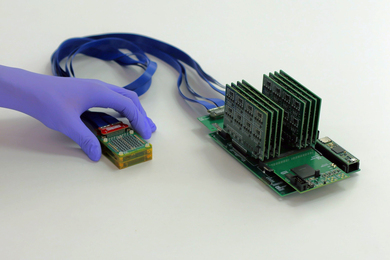

Training an AI model often requires remote servers to handle the heavy number-crunching, but getting projects to the cloud and back is no trivial matter. To simplify the process, an undergraduate club called the MIT Machine Intelligence Community (MIC) is building an interface modeled after MIT’s Project Athena, which brought desktop computing to campus in the 1980s.

Amanda Li stumbled on the MIC during orientation last fall. She was looking for computer power to train an AI language model she had built to identify the nationality of non-native English speakers. The club had a bank of cloud credits, she learned, but no practical system for giving them away. A plan to build such a system, tentatively named “Monkey,” quickly took shape.

The system would have to send a student’s training data and AI model to the cloud, put the project in a queue, train the model, and send the finished project back to MIT. It would also have to track individual usage to make sure cloud credits were evenly distributed.

This spring, Monkey became a UROP project, and Li and sophomore Sebastian Rodriguez continued to work on it under the guidance of the Quest Bridge. So far, the students have created four modules in GitHub that will eventually become the foundation for a distributed system.

“The coding isn’t the difficult part,” says Li. “It’s the exploring the server side of machine learning — Docker, Google Cloud, and the API. The most important thing I’ve learned is how to efficiently design and pipeline a project as big as this.”

A launch is expected sometime next year. “This is a huge project, with some timely problems that industry is also trying to address,” says Quest Bridge AI engineer Steven Shriver, who is supervising the project. “I have no doubt the students will figure it out: I’m here to help when they need it.”

An easy-to-use AI program for segmenting images

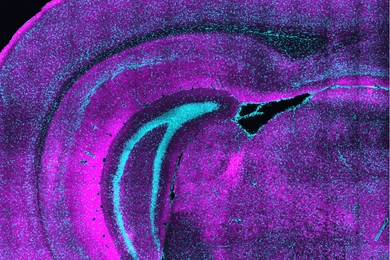

The ability to divide an image into its component parts underlies more complicated AI tasks like picking out proteins in pictures of microscopic cells, or stress fractures in shattered materials. Although fundamental, image segmentation programs are still hard for non-engineers to navigate. In a project with the Quest Bridge, first-year Marco Fleming helped to build a Jupyter notebook for image segmentation, part of the Quest Bridge’s broader mission to develop a set of AI building blocks that researchers can tailor for specific applications.

Fleming came to the project with self-taught coding skills, but no experience with machine learning, GitHub, or using a command-line interface. Working with Katherine Gallagher, an AI engineer with the Quest Bridge, and a more experienced classmate, Sule Kahraman, Fleming became fluent in convolutional neural networks, the workhorse for many machine vision tasks. “It’s kind of weird,” he explains. “You take a picture and do a lot of math to it, and the machine learns where the edges are.” Bound for a summer internship at Allstate this summer, Fleming says the project gave him a confidence boost.

His participation also benefitted the Quest Bridge, says Gallagher. “We’re developing these notebooks for people like Marco, a freshman with no machine learning experience. Seeing where Marco got tripped up was really valuable.”

An automated image classifier: no coding required

Anyone can build apps that impact the world. That’s the motto of the MIT AppInventor, a programming environment founded by Hal Abelson, the Class of 1922 Professor in MIT’s Department of Electrical Engineering and Computer Science. Working in Abelson’s lab over Independent Activity Period, sophomore Yuria Utsumi developed a web interface that lets anyone build a deep learning classifier to sort pictures of, say, happy faces and sad faces, or apples and oranges.

In four steps, the Image Classification Explorer lets users label and upload their images to the web, select a customizable model, add testing data, and see the results. Utsumi built the app with a pre-trained classifier that she restructured to learn from a set of new and unfamiliar images. Once users retrain the classifier on the new images, they can upload the model to AppInventor to view it on their smartphones.

In a recent test run of the Explorer app, students at Boston Latin Academy uploaded selfies shot on their laptop webcams and classified their facial expressions. For Utsumi, who picked the project hoping to gain practical web development and programming skills, it was a moment of triumph. “This is the first time I’m solving an algorithms problem in real life!” she says. “It was fun to see the students become more comfortable with machine learning,” she adds. “I’m excited to help expand the platform to teach more concepts.”

Introducing kids to machine-generated art

One of the hottest trends in AI is a new method for creating computer-generated art using generative adversarial networks, or GANs. A pair of neural networks work together to create a photorealistic image while letting the artist add their unique twist. One AI program called GANpaint, developed in the lab of MIT Quest for Intelligence Director Antonio Torralba, lets users add trees, clouds, and doors, among other features, to a set of pre-drawn images.

In a project with the Quest Bridge, sophomore Maya Nigrin is helping to adapt GANpaint to the popular coding platform for kids, Scratch. The work involves training a new GAN on pictures of castles and developing custom Scratch extensions to integrate GANpaint with Scratch. The students are also developing Jupyter notebooks to teach others how to think critically about GANs as the technology makes it easier to make and share doctored images.

A former babysitter and piano teacher who now tutors middle and high school students in computer science, Nigrin says she picked the project for its emphasis on K-12 education. Asked for the most important takeaway, she says: “If you can’t solve the problem, go around it.”

Learning to problem-solve is a key skill for any software engineer, says Gallagher, who supervised the project. “It can be challenging,” she says, “but that’s part of the fun. The students will hopefully come away with a realistic sense of what software development entails.”

A robot that lifts you up when you’re feeling blue

Anxiety and depression are on the rise as more of our time is spent staring at screens. But if technology is the problem, it might also be the answer, according to Cynthia Breazeal, an associate professor of media arts and sciences at the MIT Media Lab.

In a new project, Breazeal is rebooting her home robot Jibo as a personal wellness coach. (The MIT spinoff that commercialized Jibo closed last fall, but MIT has a license to use Jibo for applied research). MIT junior Kika Arias spent the last semester helped to design interactions for Jibo to read and respond to people’s moods with personalized bits of advice. If Jibo senses you’re down, for example, it might suggest a “wellness” chat and some positive psychology exercises, like writing down something you feel grateful for.

Jibo the wellness coach will face its first test in a pilot study with MIT students this summer. To get it ready, Arias designed and assembled what she calls a “glorified robot chair,” a portable mount for Jibo and its suite of instruments: a camera, microphone, computer, and tablet. She has translated scripts written for Jibo by a human life coach into his playful but laid-back voice. And she has made a widely used scale for self-reported emotions, which study participants will use to rate their mood, more engaging.

“I’m not a hardcore machine learning, cloud-computing type, but I’ve discovered I’m capable of a lot more than I thought,” she says. “I’ve always felt a strong desire to help people, so when I found this lab, I thought this is exactly where I’m supposed to be.”

A storytelling robot that helps kids learn to read

Kids who are read-to aloud tend to pick up reading easier, but not all parents themselves know how to read or have time to regularly read stories to their children. What if a home robot could fill in, or even promote higher-quality parent-child reading time?

In the first phase of a larger project, researchers in Breazeal’s lab are recording parents as they read aloud to their children, and are analyzing video, audio, and physiological data from the reading sessions. “These interactions play a big role in a child’s literacy later in life,” says first-year student Shreya Pandit, who worked on the project this semester. “There’s a sharing of emotion, and exchange of questions and answers during the telling of the story.”

These sidebar conversations are critical for learning, says Breazeal. Ideally, the robot is there to strengthen the parent-child bond and provide helpful prompts for both parent and child.

To understand how a robot can augment learning, Pandit has helped to develop parent surveys, run behavioral experiments, analyze data, and integrate multiple data streams. One surprise, she says, has been learning how much work is self-directed: She looks for a problem, researches solutions, and runs them by others in the lab before picking one — for example, an algorithm for splitting audio files based on who’s speaking, or a way of scoring the complexity of the stories being read aloud.

“I try to set goals for myself and report something back after each session,” she says. “It’s cool to look at this data and try to figure out what it can tell us about improving literacy.”

These Quest for Intelligence UROP projects were funded by Eric Schmidt, technical adviser to Alphabet Inc., and his wife, Wendy.