For decades researchers have envisioned a world where digital user interfaces are seamlessly integrated with the physical environment, until the two are virtually indistinguishable from one another.

This vision, though, is held up by a few boundaries. First, it’s difficult to integrate sensors and display elements into our tangible world due to various design constraints. Second, most methods to do so are limited to smaller scales, bound by the size of the fabricating device.

Recently, a group of researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) came up with SprayableTech, a system that lets users create room-sized interactive surfaces with sensors and displays. The system, which uses airbrushing of functional inks, enables various displays, like interactive sofas with embedded sensors to control your television, and sensors for adjusting lighting and temperature through your walls.

The Engineering and Physical Sciences Research Council partially funded this work.

SprayableTech lets users channel their inner Picassos: After designing your interactive artwork in the 3D editor, it automatically generates stencils for airbrushing the layout onto a surface. Once they’ve created the stencils from cardboard, a user can then add sensors to the desired surface, whether it’s a sofa, a wall, or even a building, to control various appliances like your lamp or television. (An alternate option to stenciling is projecting them digitally.)

“Since SprayableTech is so flexible in its application, you can imagine using this type of system beyond walls and surfaces to power larger-scale entities like interactive smart cities and interactive architecture in public places,” says Michael Wessely, postdoc in CSAIL and lead author on a new paper about SprayableTech. “We view this as a tool that will allow humans to interact with and use their environment in newfound ways.”

The race for the smartest home has now been in the works for some time, with a large interest in sensor technology. It’s a big advance from the enormous glass wall displays with quick-shifting images and screens we’ve seen in countless dystopian films.

The MIT researchers’ approach is focusing on scale, and creative expression. By using the airbrush technology, they’re no longer limited to the size of the printer, the area of the screen-printing net, or the size of the hydrographic bath — and there’s thousands of possible design options.

Let’s say a user wanted to design a tree symbol on their wall to control the ambient light in the room. To start the process, they would use a toolkit in a 3D editor to design their digital object, and customize for things like proximity sensors, touch buttons, sliders, and electroluminescent displays.

Then, the toolkit would output the choice of stencils: fabricated stencils cut from cardboard, which are great for high-precision spraying on simple, flat, surfaces, or projected stencils, which are less precise, but better for doubly-curved surfaces.

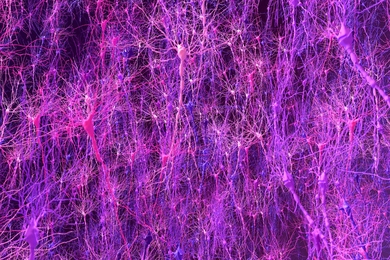

Designers can then spray on the functional ink, which is ink with electrically functional elements, using an airbrush. As a final step to get the system going, a microcontroller is attached that connects the interface to the board that runs the code for sensing and visual output.

The team tested the system on a variety of items, including:

- a musical interface on a concrete pillar;

- an interactive sofa that’s connected to a television;

- a wall display for controlling light; and

- a street post with a touchable display that provides audible information on subway stations and local attractions.

Since the stencils need to be created in advance via the digital editor, it reduces the opportunity for spontaneous exploration. Looking forward, the team wants to explore so-called “modular” stencils that create touch buttons of different sizes, as well as shape-changing stencils that adjust themselves based on a desired user interface shape.

“In the future, we aim to collaborate with graffiti artists and architects to explore the future potential for large-scale user interfaces in enabling the internet of things for smart cities and interactive homes,” says Wessely.

Wessely wrote the paper alongside MIT PhD student Ticha Sethapakdi, MIT undergraduate students Carlos Castillo and Jackson C. Snowden, MIT postdoc Isabel P.S. Qamar, MIT Professor Stefanie Mueller, University of Bristol PhD student Ollie Hanton, University of Bristol Professor Mike Fraser, and University of Bristol Associate Professor Anne Roudaut.