A massive new survey developed by MIT researchers reveals some distinct global preferences concerning the ethics of autonomous vehicles, as well as some regional variations in those preferences.

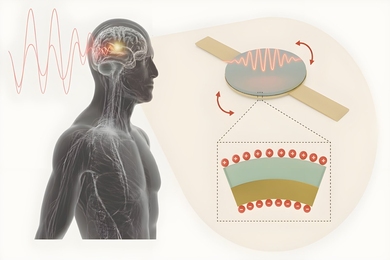

The survey has global reach and a unique scale, with over 2 million online participants from over 200 countries weighing in on versions of a classic ethical conundrum, the “Trolley Problem.” The problem involves scenarios in which an accident involving a vehicle is imminent, and the vehicle must opt for one of two potentially fatal options. In the case of driverless cars, that might mean swerving toward a couple of people, rather than a large group of bystanders.

“The study is basically trying to understand the kinds of moral decisions that driverless cars might have to resort to,” says Edmond Awad, a postdoc at the MIT Media Lab and lead author of a new paper outlining the results of the project. “We don’t know yet how they should do that.”

Still, Awad adds, “We found that there are three elements that people seem to approve of the most.”

Indeed, the most emphatic global preferences in the survey are for sparing the lives of humans over the lives of other animals; sparing the lives of many people rather than a few; and preserving the lives of the young, rather than older people.

“The main preferences were to some degree universally agreed upon,” Awad notes. “But the degree to which they agree with this or not varies among different groups or countries.” For instance, the researchers found a less pronounced tendency to favor younger people, rather than the elderly, in what they defined as an “eastern” cluster of countries, including many in Asia.

The paper, “The Moral Machine Experiment,” is being published today in Nature.

The authors are Awad; Sohan Dsouza, a doctoral student in the Media Lab; Richard Kim, a research assistant in the Media Lab; Jonathan Schulz, a postdoc at Harvard University; Joseph Henrich, a professor at Harvard; Azim Shariff, an associate professor at the University of British Columbia; Jean-François Bonnefon, a professor at the Toulouse School of Economics; and Iyad Rahwan, an associate professor of media arts and sciences at the Media Lab, and a faculty affiliate in the MIT Institute for Data, Systems, and Society.

Awad is a postdoc in the MIT Media Lab’s Scalable Cooperation group, which is led by Rahwan.

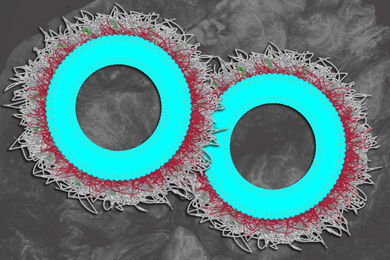

To conduct the survey, the researchers designed what they call “Moral Machine,” a multilingual online game in which participants could state their preferences concerning a series of dilemmas that autonomous vehicles might face. For instance: If it comes right down it, should autonomous vehicles spare the lives of law-abiding bystanders, or, alternately, law-breaking pedestrians who might be jaywalking? (Most people in the survey opted for the former.)

All told, “Moral Machine” compiled nearly 40 million individual decisions from respondents in 233 countries; the survey collected 100 or more responses from 130 countries. The researchers analyzed the data as a whole, while also breaking participants into subgroups defined by age, education, gender, income, and political and religious views. There were 491,921 respondents who offered demographic data.

The scholars did not find marked differences in moral preferences based on these demographic characteristics, but they did find larger “clusters” of moral preferences based on cultural and geographic affiliations. They defined “western,” “eastern,” and “southern” clusters of countries, and found some more pronounced variations along these lines. For instance: Respondents in southern countries had a relatively stronger tendency to favor sparing young people rather than the elderly, especially compared to the eastern cluster.

Awad suggests that acknowledgement of these types of preferences should be a basic part of informing public-sphere discussion of these issues. In all regions, since there is a moderate preference for sparing law-abiding bystanders rather than jaywalkers, knowing these preferences could, in theory, inform the way software is written to control autonomous vehicles.

“The question is whether these differences in preferences will matter in terms of people’s adoption of the new technology when [vehicles] employ a specific rule,” he says.

Rahwan, for his part, notes that “public interest in the platform surpassed our wildest expectations,” allowing the researchers to conduct a survey that raised awareness about automation and ethics while also yielding specific public-opinion information.

“On the one hand, we wanted to provide a simple way for the public to engage in an important societal discussion,” Rahwan says. “On the other hand, we wanted to collect data to identify which factors people think are important for autonomous cars to use in resolving ethical tradeoffs.”

Beyond the results of the survey, Awad suggests, seeking public input about an issue of innovation and public safety should continue to become a larger part of the dialoge surrounding autonomous vehicles.

“What we have tried to do in this project, and what I would hope becomes more common, is to create public engagement in these sorts of decisions,” Awad says.