Most robots are programmed using one of two methods: learning from demonstration, in which they watch a task being done and then replicate it, or via motion-planning techniques such as optimization or sampling, which require a programmer to explicitly specify a task’s goals and constraints.

Both methods have drawbacks. Robots that learn from demonstration can’t easily transfer one skill they’ve learned to another situation and remain accurate. On the other hand, motion planning systems that use sampling or optimization can adapt to these changes but are time-consuming, since they usually have to be hand-coded by expert programmers.

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have recently developed a system that aims to bridge the two techniques: C-LEARN, which allows noncoders to teach robots a range of tasks simply by providing some information about how objects are typically manipulated and then showing the robot a single demo of the task.

Importantly, this enables users to teach robots skills that can be automatically transferred to other robots that have different ways of moving — a key time- and cost-saving measure for companies that want a range of robots to perform similar actions.

“By combining the intuitiveness of learning from demonstration with the precision of motion-planning algorithms, this approach can help robots do new types of tasks that they haven’t been able to learn before, like multistep assembly using both of their arms,” says Claudia Pérez-D’Arpino, a PhD student who wrote a paper on C-LEARN with MIT Professor Julie Shah.

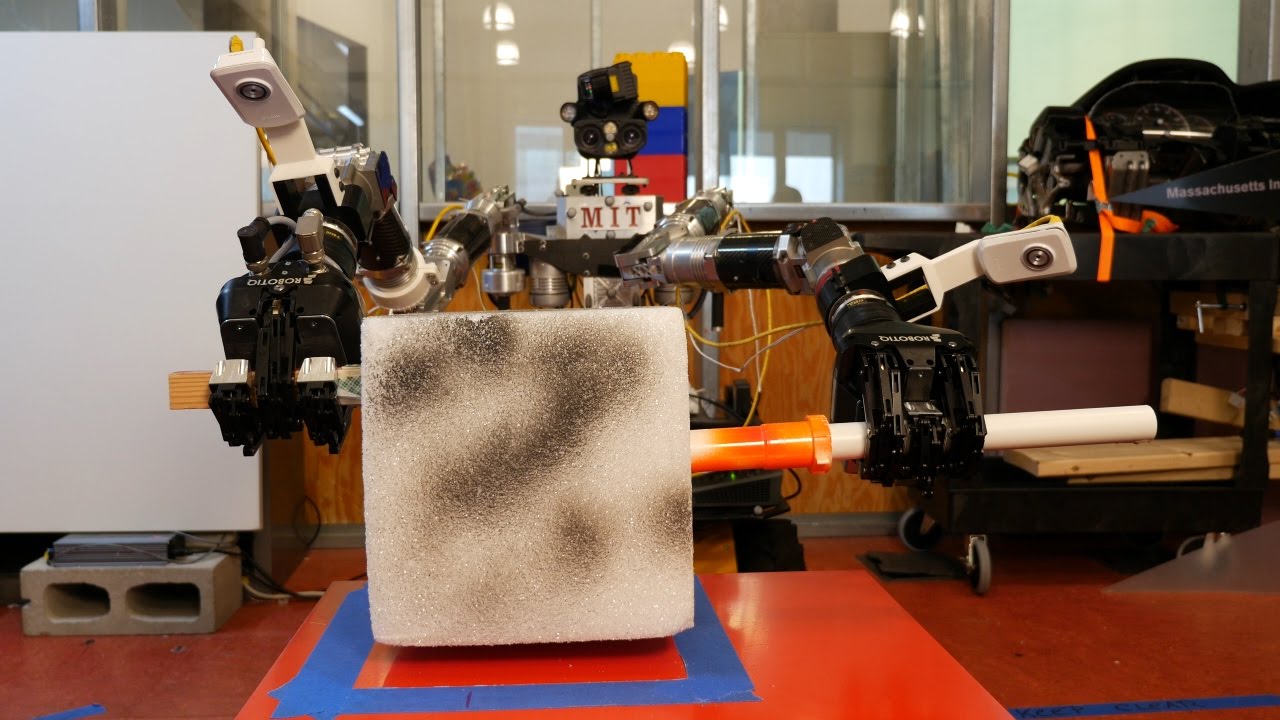

The team tested the system on Optimus, a new two-armed robot designed for bomb disposal that they programmed to perform tasks such as opening doors, transporting objects, and extracting objects from containers. In simulations they showed that Optimus’ learned skills could be seamlessly transferred to Atlas, CSAIL’s 6-foot-tall, 400-pound humanoid robot.

A paper describing C-LEARN was recently accepted to the IEEE International Conference on Robotics and Automation (ICRA), which takes place May 29 to June 3 in Singapore.

How it works

With C-LEARN the user first gives the robot a knowledge base of information on how to reach and grasp various objects that have different constraints. (The C in C-LEARN stands for “constraints.”) For example, a tire and a steering wheel have similar shapes, but to attach them to a car, the robot has to configure its arms differently to move them. The knowledge base contains the information needed for the robot to do that.

The operator then uses a 3-D interface to show the robot a single demonstration of the specific task, which is represented by a sequence of relevant moments known as “keyframes.” By matching these keyframes to the different situations in the knowledge base, the robot can automatically suggest motion plans for the operator to approve or edit as needed.

“This approach is actually very similar to how humans learn in terms of seeing how something’s done and connecting it to what we already know about the world,” says Pérez-D’Arpino. “We can’t magically learn from a single demonstration, so we take new information and match it to previous knowledge about our environment.”

One challenge was that existing constraints that could be learned from demonstrations weren’t accurate enough to enable robots to precisely manipulate objects. To overcome that, the researchers developed constraints inspired by computer-aided design (CAD) programs that can tell the robot if its hands should be parallel or perpendicular to the objects it is interacting with.

The team also showed that the robot performed even better when it collaborated with humans. While the robot successfully executed tasks 87.5 percent of the time on its own, it did so 100 percent of the time when it had an operator that could correct minor errors related to the robot’s occasional inaccurate sensor measurements.

“Having a knowledge base is fairly common, but what’s not common is integrating it with learning from demonstration,” says Dmitry Berenson, an assistant professor at the University of Michigan's Electrical Engineering and Computer Science Department. “That’s very helpful, because if you are dealing with the same objects over and over again, you don't want to then have to start from scratch to teach the robot every new task.”

Applications

The system is part of a larger wave of research focused on making learning-from-demonstration approaches more adaptive. If you’re a robot that has learned to take an object out of a tube from a demonstration, you might not be able to do it if there’s an obstacle in the way that requires you to move your arm differently. However, a robot trained with C-LEARN can do this, because it does not learn one specific way to perform the action.

“It’s good for the field that we're moving away from directly imitating motion, toward actually trying to infer the principles behind the motion,” Berenson says. “By using these learned constraints in a motion planner, we can make systems that are far more flexible than those which just try to mimic what's being demonstrated"

Shah says that advanced LfD methods could prove important in time-sensitive scenarios such as bomb disposal and disaster response, where robots are currently tele-operated at the level of individual joint movements.

“Something as simple as picking up a box could take 20-30 minutes, which is significant for an emergency situation,” says Pérez-D’Arpino.

C-LEARN can’t yet handle certain advanced tasks, such as avoiding collisions or planning for different step sequences for a given task. But the team is hopeful that incorporating more insights from human learning will give robots an even wider range of physical capabilities.

“Traditional programming of robots in real-world scenarios is difficult, tedious, and requires a lot of domain knowledge,” says Shah. “It would be much more effective if we could train them more like how we train people: by giving them some basic knowledge and a single demonstration. This is an exciting step toward teaching robots to perform complex multiarm and multistep tasks necessary for assembly manufacturing and ship or aircraft maintenance.”