Researchers at the MIT Media Lab have developed a new imaging device that consists of a loose bundle of optical fibers, with no need for lenses or a protective housing.

The fibers are connected to an array of photosensors at one end; the other ends can be left to wave free, so they could pass individually through micrometer-scale gaps in a porous membrane, to image whatever is on the other side.

Bundles of the fibers could be fed through pipes and immersed in fluids, to image oil fields, aquifers, or plumbing, without risking damage to watertight housings. And tight bundles of the fibers could yield endoscopes with narrower diameters, since they would require no additional electronics.

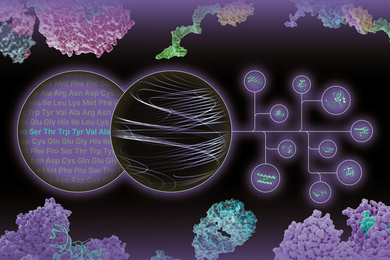

The positions of the fibers’ free ends don’t need to correspond to the positions of the photodetectors in the array. By measuring the differing times at which short bursts of light reach the photodetectors — a technique known as “time of flight” — the device can determine the fibers’ relative locations.

In a commercial version of the device, the calibrating bursts of light would be delivered by the fibers themselves, but in experiments with their prototype system, the researchers used external lasers.

“Time of flight, which is a technique that is broadly used in our group, has never been used to do such things,” says Barmak Heshmat, a postdoc in the Camera Culture group at the Media Lab, who led the new work. “Previous works have used time of flight to extract depth information. But in this work, I was proposing to use time of flight to enable a new interface for imaging.”

The researchers reported their results today in Nature Scientific Reports. Heshmat is first author on the paper, and he’s joined by associate professor of media arts and sciences Ramesh Raskar, who leads the Media Lab’s Camera Culture group, and by Ik Hyun Lee, a fellow postdoc.

Travel time

In their experiments, the researchers used a bundle of 1,100 fibers that were waving free at one end and positioned opposite a screen on which symbols were projected. The other end of the bundle was attached to a beam splitter, which was in turn connected to both an ordinary camera and a high-speed camera that can distinguish optical pulses’ times of arrival.

Perpendicular to the tips of the fibers at the bundle’s loose end, and to each other, were two ultrafast lasers. The lasers fired short bursts of light, and the high-speed camera recorded their time of arrival along each fiber.

Because the bursts of light came from two different directions, software could use the differences in arrival time to produce a two-dimensional map of the positions of the fibers’ tips. It then used that information to unscramble the jumbled image captured by the conventional camera.

The resolution of the system is limited by the number of fibers; the 1,100-fiber prototype produces an image that’s roughly 33 by 33 pixels. Because there’s also some ambiguity in the image reconstruction process, the images produced in the researchers’ experiments were fairly blurry.

But the prototype sensor also used off-the-shelf optical fibers that were 300 micrometers in diameter. Fibers just a few micrometers in diameter have been commercially manufactured, so for industrial applications, the resolution could increase markedly without increasing the bundle size.

In a commercial application, of course, the system wouldn’t have the luxury of two perpendicular lasers positioned at the fibers’ tips. Instead, bursts of light would be sent along individual fibers, and the system would gauge the time they took to reflect back. Many more pulses would be required to form an accurate picture of the fibers’ positions, but then, the pulses are so short that the calibration would still take just a fraction of a second.

“Two is the minimum number of pulses you could use,” Heshmat says. “That was just proof of concept.”

Checking references

For medical applications, where the diameter of the bundle — and thus the number of fibers — needs to be low, the quality of the image could be improved through the use of so-called interferometric methods.

With such methods, an outgoing light signal is split in two, and half of it — the reference beam — is kept locally, while the other half — the sample beam — bounces off objects in the scene and returns. The two signals are then recombined, and the way in which they interfere with each other yields very detailed information about the sample beam’s trajectory. The researchers didn’t use this technique in their experiments, but they did perform a theoretical analysis showing that it should enable more accurate scene reconstructions.

“It is definitely interesting and very innovative to combine the knowledge we now have of time-of-flight measurements and computational imaging,” says Mona Jarrahi, an associate professor of electrical engineering at the University of California at Los Angeles. “And as the authors mention, they’re targeting the right problem, in the sense that a lot of applications for imaging have constraints in terms of environmental conditions or space.”

Relying on laser light piped down the fibers themselves “is harder than what they have shown in this experiment,” she cautions. “But the physical information is there. With the right arrangement, one can get it.”

“The primary advantage of this technology is that the end of the optical brush can change its form dynamically and flexibly,” adds Keisuke Goda, a professor of chemistry at the University of Tokyo. “I believe it can be useful for endoscopy of the small intestine, which is highly complex in structure.”