Computer scientists at MIT and the National University of Ireland (NUI) at Maynooth have developed a mapping algorithm that creates dense, highly detailed 3-D maps of indoor and outdoor environments in real time.

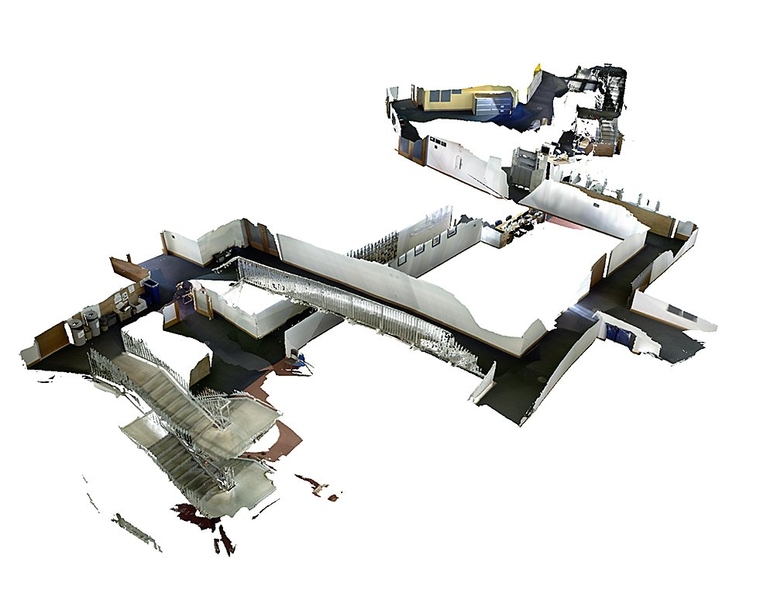

The researchers tested their algorithm on videos taken with a low-cost Kinect camera, including one that explores the serpentine halls and stairways of MIT’s Stata Center. Applying their mapping technique to these videos, the researchers created rich, three-dimensional maps as the camera explored its surroundings.

As the camera circled back to its starting point, the researchers found that after returning to a location recognized as familiar, the algorithm was able to quickly stitch images together to effectively “close the loop,” creating a continuous, realistic 3-D map in real time.

The technique solves a major problem in the robotic mapping community that’s known as either “loop closure” or “drift”: As a camera pans across a room or travels down a corridor, it invariably introduces slight errors in the estimated path taken. A doorway may shift a bit to the right, or a wall may appear slightly taller than it is. Over relatively long distances, these errors can compound, resulting in a disjointed map, with walls and stairways that don’t exactly line up.

In contrast, the new mapping technique determines how to connect a map by tracking a camera’s pose, or position in space, throughout its route. When a camera returns to a place where it’s already been, the algorithm determines which points within the 3-D map to adjust, based on the camera’s previous poses.

“Before the map has been corrected, it’s sort of all tangled up in itself,” says Thomas Whelan, a PhD student at NUI. “We use knowledge of where the camera’s been to untangle it. The technique we developed allows you to shift the map, so it warps and bends into place.”

The technique, he says, may be used to guide robots through potentially hazardous or unknown environments. Whelan’s colleague John Leonard, a professor of mechanical engineering at MIT, also envisions a more benign application.

“I have this dream of making a complete model of all of MIT,” says Leonard, who is also affiliated with MIT’s Computer Science and Artificial Intelligence Laboratory. “With this 3-D map, a potential applicant for the freshman class could sort of ‘swim’ through MIT like it’s a big aquarium. There’s still more work to do, but I think it’s doable.”

Leonard, Whelan and the other members of the team — Michael Kaess of MIT and John McDonald of NUI — will present their work at the 2013 International Conference on Intelligent Robots and Systems in Tokyo.

A visualization of the mapping process, producing dense maps at sub-centimeter resolution. Video: Thomas Whelan and John McDonald/NUI-Maynooth; Michael Kaess and John J. Leonard/MIT

The problem with a million points

The Kinect camera produces a color image, along with information on the spacing of every pixel in that image. A depth sensor in the camera translates the pixel spacing into a measurement of depth, recording the depth of every single pixel in an image. This data can be parsed by an application to generate a 3-D representation of the image.

In 2011, a group from Imperial College London and Microsoft Research developed a 3-D mapping application called KinectFusion, which successfully produced 3-D models from Kinect data in real time. The technique generated very detailed models, at subcentimeter resolution, but is restricted to a fixed region in space.

Whelan, Leonard and their team expanded on that group’s work to develop a technique to create equally high-resolution 3-D maps, over hundreds of meters, in various environments and in real time. The goal, they note, was ambitious from a data perspective: An environment spanning hundreds of meters would consist of millions of 3-D points. To generate an accurate map, one would have to know which points among the millions to align. Previous groups have tackled this problem by running the data over and over — an impractical approach if you want to create maps in real time.

Mapping by slicing

Instead, Whelan and his colleagues came up with a much faster approach, which they describe in two stages: a front end and a back end.

In the front end, the researchers developed an algorithm to track a camera’s position at any given moment along its route. As the Kinect camera takes images at 30 frames per second, the algorithm measures how much and in what direction the camera has moved between each frame. At the same time, the algorithm builds up a 3-D model, consisting of small “cloud slices” — cross-sections of thousands of 3-D points in the immediate environment. Each cloud slice is linked to a particular camera pose.

As a camera moves down a corridor, cloud slices are integrated into a global 3-D map representing the larger, bird’s-eye perspective of the route thus far.

In the back end, the technique takes all the camera poses that have been tracked and lines them up in places that look familiar. The technique automatically adjusts the associated cloud slices, along with their thousands of points — a fast approach that avoids having to determine, point by point, which to move.

The team has used its technique to create 3-D maps of MIT’s Stata Center, along with indoor and outdoor locations in London, Sydney, Germany and Ireland. In the future, the group envisions that the technique may be used to give robots much richer information about their surroundings. For example, a 3-D map would not only help a robot decide whether to turn left or right, but also present more detailed information.

“You can imagine a robot could look at one of these maps and say there’s a bin over here, or a fire extinguisher over here, and make more intelligent interpretations of the environment,” Whelan says. “It’s just a pick-up-and-go system, and we feel there’s a lot of potential for this kind of technique.”

Kostas Daniilidis, a professor of computer and information sciences at the University of Pennsylvania, sees the group’s technique as a useful method to guide robots in everyday tasks, as well as in building inspections.

“A system that minimizes drift in real time enables a lawnmower or a vacuum cleaner to come back to the same position without using any special markers, or a Mars rover to navigate efficiently,” says Daniilidis, who was not involved in the research. “It also makes the production of accurate 3-D models of the environment possible [for use] in architecture or infrastructure inspection. It would be interesting to see how the same methodology performs in challenging outdoor environments.”

This research received support from the Science Foundation of Ireland, the Irish Research Council and the Office of Naval Research.

The researchers tested their algorithm on videos taken with a low-cost Kinect camera, including one that explores the serpentine halls and stairways of MIT’s Stata Center. Applying their mapping technique to these videos, the researchers created rich, three-dimensional maps as the camera explored its surroundings.

As the camera circled back to its starting point, the researchers found that after returning to a location recognized as familiar, the algorithm was able to quickly stitch images together to effectively “close the loop,” creating a continuous, realistic 3-D map in real time.

The technique solves a major problem in the robotic mapping community that’s known as either “loop closure” or “drift”: As a camera pans across a room or travels down a corridor, it invariably introduces slight errors in the estimated path taken. A doorway may shift a bit to the right, or a wall may appear slightly taller than it is. Over relatively long distances, these errors can compound, resulting in a disjointed map, with walls and stairways that don’t exactly line up.

In contrast, the new mapping technique determines how to connect a map by tracking a camera’s pose, or position in space, throughout its route. When a camera returns to a place where it’s already been, the algorithm determines which points within the 3-D map to adjust, based on the camera’s previous poses.

“Before the map has been corrected, it’s sort of all tangled up in itself,” says Thomas Whelan, a PhD student at NUI. “We use knowledge of where the camera’s been to untangle it. The technique we developed allows you to shift the map, so it warps and bends into place.”

The technique, he says, may be used to guide robots through potentially hazardous or unknown environments. Whelan’s colleague John Leonard, a professor of mechanical engineering at MIT, also envisions a more benign application.

“I have this dream of making a complete model of all of MIT,” says Leonard, who is also affiliated with MIT’s Computer Science and Artificial Intelligence Laboratory. “With this 3-D map, a potential applicant for the freshman class could sort of ‘swim’ through MIT like it’s a big aquarium. There’s still more work to do, but I think it’s doable.”

Leonard, Whelan and the other members of the team — Michael Kaess of MIT and John McDonald of NUI — will present their work at the 2013 International Conference on Intelligent Robots and Systems in Tokyo.

A visualization of the mapping process, producing dense maps at sub-centimeter resolution. Video: Thomas Whelan and John McDonald/NUI-Maynooth; Michael Kaess and John J. Leonard/MIT

The problem with a million points

The Kinect camera produces a color image, along with information on the spacing of every pixel in that image. A depth sensor in the camera translates the pixel spacing into a measurement of depth, recording the depth of every single pixel in an image. This data can be parsed by an application to generate a 3-D representation of the image.

In 2011, a group from Imperial College London and Microsoft Research developed a 3-D mapping application called KinectFusion, which successfully produced 3-D models from Kinect data in real time. The technique generated very detailed models, at subcentimeter resolution, but is restricted to a fixed region in space.

Whelan, Leonard and their team expanded on that group’s work to develop a technique to create equally high-resolution 3-D maps, over hundreds of meters, in various environments and in real time. The goal, they note, was ambitious from a data perspective: An environment spanning hundreds of meters would consist of millions of 3-D points. To generate an accurate map, one would have to know which points among the millions to align. Previous groups have tackled this problem by running the data over and over — an impractical approach if you want to create maps in real time.

Mapping by slicing

Instead, Whelan and his colleagues came up with a much faster approach, which they describe in two stages: a front end and a back end.

In the front end, the researchers developed an algorithm to track a camera’s position at any given moment along its route. As the Kinect camera takes images at 30 frames per second, the algorithm measures how much and in what direction the camera has moved between each frame. At the same time, the algorithm builds up a 3-D model, consisting of small “cloud slices” — cross-sections of thousands of 3-D points in the immediate environment. Each cloud slice is linked to a particular camera pose.

As a camera moves down a corridor, cloud slices are integrated into a global 3-D map representing the larger, bird’s-eye perspective of the route thus far.

In the back end, the technique takes all the camera poses that have been tracked and lines them up in places that look familiar. The technique automatically adjusts the associated cloud slices, along with their thousands of points — a fast approach that avoids having to determine, point by point, which to move.

The team has used its technique to create 3-D maps of MIT’s Stata Center, along with indoor and outdoor locations in London, Sydney, Germany and Ireland. In the future, the group envisions that the technique may be used to give robots much richer information about their surroundings. For example, a 3-D map would not only help a robot decide whether to turn left or right, but also present more detailed information.

“You can imagine a robot could look at one of these maps and say there’s a bin over here, or a fire extinguisher over here, and make more intelligent interpretations of the environment,” Whelan says. “It’s just a pick-up-and-go system, and we feel there’s a lot of potential for this kind of technique.”

Kostas Daniilidis, a professor of computer and information sciences at the University of Pennsylvania, sees the group’s technique as a useful method to guide robots in everyday tasks, as well as in building inspections.

“A system that minimizes drift in real time enables a lawnmower or a vacuum cleaner to come back to the same position without using any special markers, or a Mars rover to navigate efficiently,” says Daniilidis, who was not involved in the research. “It also makes the production of accurate 3-D models of the environment possible [for use] in architecture or infrastructure inspection. It would be interesting to see how the same methodology performs in challenging outdoor environments.”

This research received support from the Science Foundation of Ireland, the Irish Research Council and the Office of Naval Research.