In recent years, robots have gained artificial vision, touch, and even smell. “Researchers have been giving robots human-like perception,” says MIT Associate Professor Fadel Adib. In a new paper, Adib’s team is pushing the technology a step further. “We’re trying to give robots superhuman perception,” he says.

The researchers have developed a robot that uses radio waves, which can pass through walls, to sense occluded objects. The robot, called RF-Grasp, combines this powerful sensing with more traditional computer vision to locate and grasp items that might otherwise be blocked from view. The advance could one day streamline e-commerce fulfillment in warehouses or help a machine pluck a screwdriver from a jumbled toolkit.

The research will be presented in May at the IEEE International Conference on Robotics and Automation. The paper’s lead author is Tara Boroushaki, a research assistant in the Signal Kinetics Group at the MIT Media Lab. Her MIT co-authors include Adib, who is the director of the Signal Kinetics Group; and Alberto Rodriguez, the Class of 1957 Associate Professor in the Department of Mechanical Engineering. Other co-authors include Junshan Leng, a research engineer at Harvard University, and Ian Clester, a PhD student at Georgia Tech.

As e-commerce continues to grow, warehouse work is still usually the domain of humans, not robots, despite sometimes-dangerous working conditions. That’s in part because robots struggle to locate and grasp objects in such a crowded environment. “Perception and picking are two roadblocks in the industry today,” says Rodriguez. Using optical vision alone, robots can’t perceive the presence of an item packed away in a box or hidden behind another object on the shelf — visible light waves, of course, don’t pass through walls.

But radio waves can.

For decades, radio frequency (RF) identification has been used to track everything from library books to pets. RF identification systems have two main components: a reader and a tag. The tag is a tiny computer chip that gets attached to — or, in the case of pets, implanted in — the item to be tracked. The reader then emits an RF signal, which gets modulated by the tag and reflected back to the reader.

The reflected signal provides information about the location and identity of the tagged item. The technology has gained popularity in retail supply chains — Japan aims to use RF tracking for nearly all retail purchases in a matter of years. The researchers realized this profusion of RF could be a boon for robots, giving them another mode of perception.

“RF is such a different sensing modality than vision,” says Rodriguez. “It would be a mistake not to explore what RF can do.”

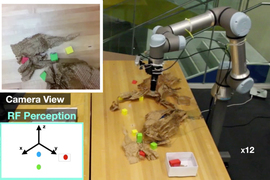

RF Grasp uses both a camera and an RF reader to find and grab tagged objects, even when they’re fully blocked from the camera’s view. It consists of a robotic arm attached to a grasping hand. The camera sits on the robot’s wrist. The RF reader stands independent of the robot and relays tracking information to the robot’s control algorithm. So, the robot is constantly collecting both RF tracking data and a visual picture of its surroundings. Integrating these two data streams into the robot’s decision making was one of the biggest challenges the researchers faced.

“The robot has to decide, at each point in time, which of these streams is more important to think about,” says Boroushaki. “It’s not just eye-hand coordination, it’s RF-eye-hand coordination. So, the problem gets very complicated.”

The robot initiates the seek-and-pluck process by pinging the target object’s RF tag for a sense of its whereabouts. “It starts by using RF to focus the attention of vision,” says Adib. “Then you use vision to navigate fine maneuvers.” The sequence is akin to hearing a siren from behind, then turning to look and get a clearer picture of the siren’s source.

With its two complementary senses, RF Grasp zeroes in on the target object. As it gets closer and even starts manipulating the item, vision, which provides much finer detail than RF, dominates the robot’s decision making.

RF Grasp proved its efficiency in a battery of tests. Compared to a similar robot equipped with only a camera, RF Grasp was able to pinpoint and grab its target object with about half as much total movement. Plus, RF Grasp displayed the unique ability to “declutter” its environment — removing packing materials and other obstacles in its way in order to access the target. Rodriguez says this demonstrates RF Grasp’s “unfair advantage” over robots without penetrative RF sensing. “It has this guidance that other systems simply don’t have.”

RF Grasp could one day perform fulfilment in packed e-commerce warehouses. Its RF sensing could even instantly verify an item’s identity without the need to manipulate the item, expose its barcode, then scan it. “RF has the potential to improve some of those limitations in industry, especially in perception and localization,” says Rodriguez.

Adib also envisions potential home applications for the robot, like locating the right Allen wrench to assemble your Ikea chair. “Or you could imagine the robot finding lost items. It’s like a super-Roomba that goes and retrieves my keys, wherever the heck I put them.”

The research is sponsored by the National Science Foundation, NTT DATA, Toppan, Toppan Forms, and the Abdul Latif Jameel Water and Food Systems Lab (J-WAFS).