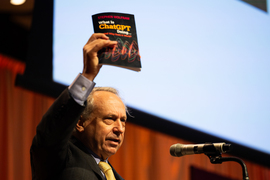

Speaking at the “Generative AI: Shaping the Future” symposium on Nov. 28, the kickoff event of MIT’s Generative AI Week, keynote speaker and iRobot co-founder Rodney Brooks warned attendees against uncritically overestimating the capabilities of this emerging technology, which underpins increasingly powerful tools like OpenAI’s ChatGPT and Google’s Bard.

“Hype leads to hubris, and hubris leads to conceit, and conceit leads to failure,” cautioned Brooks, who is also a professor emeritus at MIT, a former director of the Computer Science and Artificial Intelligence Laboratory (CSAIL), and founder of Robust.AI.

“No one technology has ever surpassed everything else,” he added.

The symposium, which drew hundreds of attendees from academia and industry to the Institute’s Kresge Auditorium, was laced with messages of hope about the opportunities generative AI offers for making the world a better place, including through art and creativity, interspersed with cautionary tales about what could go wrong if these AI tools are not developed responsibly.

Generative AI is a term to describe machine-learning models that learn to generate new material that looks like the data they were trained on. These models have exhibited some incredible capabilities, such as the ability to produce human-like creative writing, translate languages, generate functional computer code, or craft realistic images from text prompts.

In her opening remarks to launch the symposium, MIT President Sally Kornbluth highlighted several projects faculty and students have undertaken to use generative AI to make a positive impact in the world. For example, the work of the Axim Collaborative, an online education initiative launched by MIT and Harvard, includes exploring the educational aspects of generative AI to help underserved students.

The Institute also recently announced seed grants for 27 interdisciplinary faculty research projects centered on how AI will transform people’s lives across society.

In hosting Generative AI Week, MIT hopes to not only showcase this type of innovation, but also generate “collaborative collisions” among attendees, Kornbluth said.

Collaboration involving academics, policymakers, and industry will be critical if we are to safely integrate a rapidly evolving technology like generative AI in ways that are humane and help humans solve problems, she told the audience.

“I honestly cannot think of a challenge more closely aligned with MIT’s mission. It is a profound responsibility, but I have every confidence that we can face it, if we face it head on and if we face it as a community,” she said.

While generative AI holds the potential to help solve some of the planet’s most pressing problems, the emergence of these powerful machine learning models has blurred the distinction between science fiction and reality, said CSAIL Director Daniela Rus in her opening remarks. It is no longer a question of whether we can make machines that produce new content, she said, but how we can use these tools to enhance businesses and ensure sustainability.

“Today, we will discuss the possibility of a future where generative AI does not just exist as a technological marvel, but stands as a source of hope and a force for good,” said Rus, who is also the Andrew and Erna Viterbi Professor in the Department of Electrical Engineering and Computer Science.

But before the discussion dove deeply into the capabilities of generative AI, attendees were first asked to ponder their humanity, as MIT Professor Joshua Bennett read an original poem.

Bennett, a professor in the MIT Literature Section and Distinguished Chair of the Humanities, was asked to write a poem about what it means to be human, and drew inspiration from his daughter, who was born three weeks ago.

The poem told of his experiences as a boy watching Star Trek with his father and touched on the importance of passing traditions down to the next generation.

In his keynote remarks, Brooks set out to unpack some of the deep, scientific questions surrounding generative AI, as well as explore what the technology can tell us about ourselves.

To begin, he sought to dispel some of the mystery swirling around generative AI tools like ChatGPT by explaining the basics of how this large language model works. ChatGPT, for instance, generates text one word at a time by determining what the next word should be in the context of what it has already written. While a human might write a story by thinking about entire phrases, ChatGPT only focuses on the next word, Brooks explained.

ChatGPT 3.5 is built on a machine-learning model that has 175 billion parameters and has been exposed to billions of pages of text on the web during training. (The newest iteration, ChatGPT 4, is even larger.) It learns correlations between words in this massive corpus of text and uses this knowledge to propose what word might come next when given a prompt.

The model has demonstrated some incredible capabilities, such as the ability to write a sonnet about robots in the style of Shakespeare’s famous Sonnet 18. During his talk, Brooks showcased the sonnet he asked ChatGPT to write side-by-side with his own sonnet.

But while researchers still don’t fully understand exactly how these models work, Brooks assured the audience that generative AI’s seemingly incredible capabilities are not magic, and it doesn’t mean these models can do anything.

His biggest fears about generative AI don’t revolve around models that could someday surpass human intelligence. Rather, he is most worried about researchers who may throw away decades of excellent work that was nearing a breakthrough, just to jump on shiny new advancements in generative AI; venture capital firms that blindly swarm toward technologies that can yield the highest margins; or the possibility that a whole generation of engineers will forget about other forms of software and AI.

At the end of the day, those who believe generative AI can solve the world’s problems and those who believe it will only generate new problems have at least one thing in common: Both groups tend to overestimate the technology, he said.

“What is the conceit with generative AI? The conceit is that it is somehow going to lead to artificial general intelligence. By itself, it is not,” Brooks said.

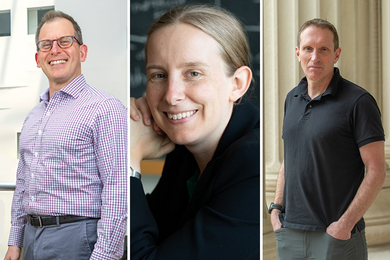

Following Brooks’ presentation, a group of MIT faculty spoke about their work using generative AI and participated in a panel discussion about future advances, important but underexplored research topics, and the challenges of AI regulation and policy.

The panel consisted of Jacob Andreas, an associate professor in the MIT Department of Electrical Engineering and Computer Science (EECS) and a member of CSAIL; Antonio Torralba, the Delta Electronics Professor of EECS and a member of CSAIL; Ev Fedorenko, an associate professor of brain and cognitive sciences and an investigator at the McGovern Institute for Brain Research at MIT; and Armando Solar-Lezama, a Distinguished Professor of Computing and associate director of CSAIL. It was moderated by William T. Freeman, the Thomas and Gerd Perkins Professor of EECS and a member of CSAIL.

The panelists discussed several potential future research directions around generative AI, including the possibility of integrating perceptual systems, drawing on human senses like touch and smell, rather than focusing primarily on language and images. The researchers also spoke about the importance of engaging with policymakers and the public to ensure generative AI tools are produced and deployed responsibly.

“One of the big risks with generative AI today is the risk of digital snake oil. There is a big risk of a lot of products going out that claim to do miraculous things but in the long run could be very harmful,” Solar-Lezama said.

The morning session concluded with an excerpt from the 1925 science fiction novel “Metropolis,” read by senior Joy Ma, a physics and theater arts major, followed by a roundtable discussion on the future of generative AI. The discussion included Joshua Tenenbaum, a professor in the Department of Brain and Cognitive Sciences and a member of CSAIL; Dina Katabi, the Thuan and Nicole Pham Professor in EECS and a principal investigator in CSAIL and the MIT Jameel Clinic; and Max Tegmark, professor of physics; and was moderated by Daniela Rus.

One focus of the discussion was the possibility of developing generative AI models that can go beyond what we can do as humans, such as tools that can sense someone’s emotions by using electromagnetic signals to understand how a person’s breathing and heart rate are changing.

But one key to integrating AI like this into the real world safely is to ensure that we can trust it, Tegmark said. If we know an AI tool will meet the specifications we insist on, then “we no longer have to be afraid of building really powerful systems that go out and do things for us in the world,” he said.