Light lets us see the things that surround us, but what if we could also use it to see things hidden around corners?

It sounds like science fiction, but that’s the idea behind a new algorithm out of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) — and its discovery has implications for everything from emergency response to self-driving cars.

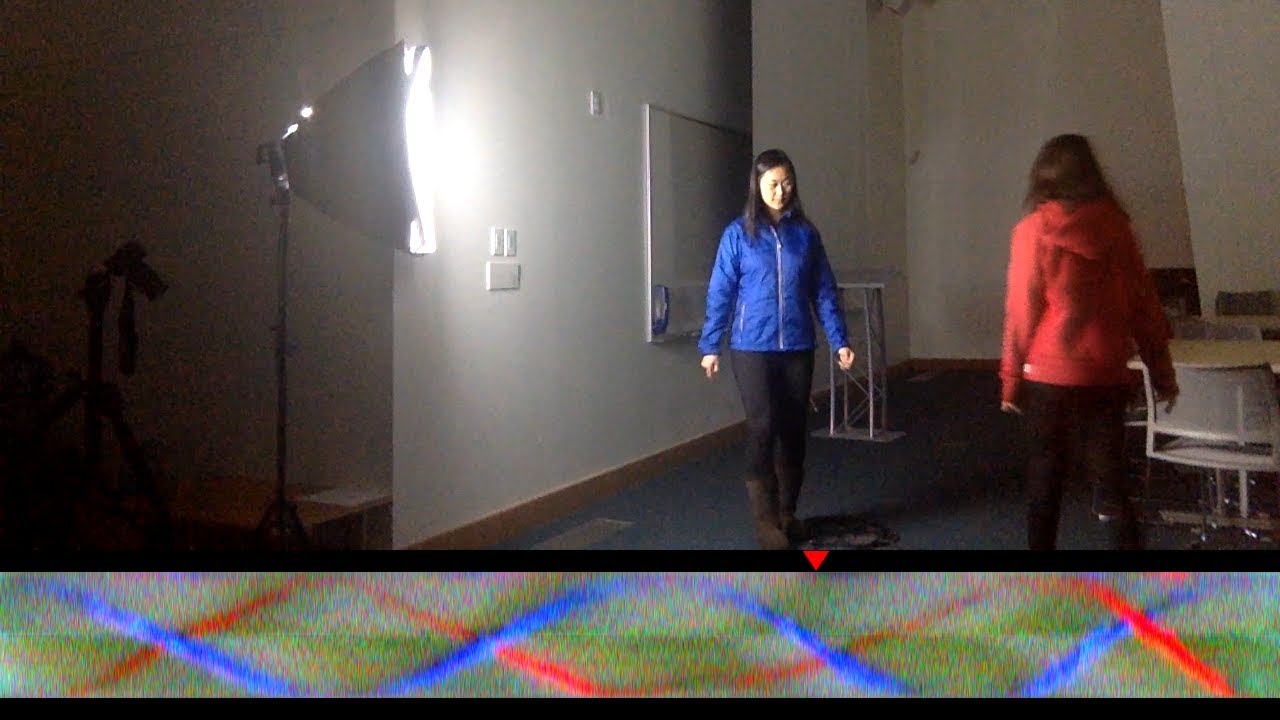

The CSAIL team’s imaging system, which can work with smartphone cameras, uses information about light reflections to detect objects or people in a hidden scene and measure their speed and trajectory — all in real-time.

To explain, imagine that you’re walking down an L-shaped hallway and have a wall between you and some objects around the corner. Those objects reflect a small amount of light on the ground in your line of sight, creating a fuzzy shadow that is referred to as the “penumbra.”

Using video of the penumbra, the system — which the team has dubbed “CornerCameras” — can stitch together a series of one-dimensional images that reveal information about the objects around the corner.

“Even though those objects aren’t actually visible to the camera, we can look at how their movements affect the penumbra to determine where they are and where they’re going,” says Katherine Bouman SM '13, PhD '17, who is lead author on a new paper about the system. “In this way, we show that walls and other obstructions with edges can be exploited as naturally-occurring ‘cameras’ that reveal the hidden scenes beyond them.”

Bouman says that the ability to see around obstructions would be useful for many tasks, from firefighters finding people in burning buildings to drivers detecting pedestrians in their blind spots.

She co-wrote the paper with MIT professors Bill Freeman, Antonio Torralba, Greg Wornell, and Fredo Durand; master’s student Vickie Ye; and PhD student Adam Yedidia. She will present the work later this month at the International Conference on Computer Vision in Venice, Italy.

How it works

Most approaches for seeing around obstacles involve special lasers. Specifically, researchers shine cameras on specific points that are visible to both the observable and hidden scene, and then measure how long it takes for the light to return.

However, these so-called “time-of-flight cameras” are expensive and can easily get thrown off by ambient light, especially outdoors.

In contrast, the CSAIL team’s technique doesn’t require actively projecting light into the space, and works in a wider range of indoor and outdoor environments and with off-the-shelf consumer cameras.

From viewing video of the penumbra, CornerCameras generates one-dimensional images of the hidden scene. A single image isn’t particularly useful, since it contains a fair amount of “noisy” data. But by observing the scene over several seconds and stitching together dozens of distinct images, the system can distinguish distinct objects in motion and determine their speed and trajectory.

“The notion to even try to achieve this is innovative in and of itself, but getting it to work in practice shows both creativity and adeptness,” says Professor Marc Christensen, who serves as dean of the Lyle School of Engineering at Southern Methodist University and was not involved in the research. “This work is a significant step in the broader attempt to develop revolutionary imaging capabilities that are not limited to line-of-sight observation.”

The team was surprised to find that CornerCameras worked in a range of challenging situations, including weather conditions like rain.

“Given that the rain was literally changing the color of the ground, I figured that there was no way we’d be able to see subtle differences in light on the order of a tenth of a percent,” says Bouman. “But because the system integrates so much information across dozens of images, the effect of the raindrops averages out, and so you can see the movement of the objects even in the middle of all that activity.”

The system still has some limitations. For obvious reasons, it doesn’t work if there’s no light in the scene, and can have issues if there’s low light in the hidden scene itself. It also can get tripped up if light conditions change, like if the scene is outdoors and clouds are constantly moving across the sun. With smartphone-quality cameras the signal also gets weaker as you get farther away from the corner.

The researchers plan to address some of these challenges in future papers, and will also try to get it to work while in motion. The team will soon be testing it on a wheelchair, with the goal of eventually adapting it for cars and other vehicles.

“If a little kid darts into the street, a driver might not be able to react in time,” says Bouman. “While we’re not there yet, a technology like this could one day be used to give drivers a few seconds of warning time and help in a lot of life-or-death situations."

This work was supported, in part, by the DARPA REVEAL Program, the National Science Foundation, Shell Research, and a National Defense Science and Engineering Graduate Fellowship.