MIT scientists are reporting new insights into how the human brain recognizes objects, especially faces, in work that could lead to improved machine vision systems, diagnostics for certain neurological conditions and more.

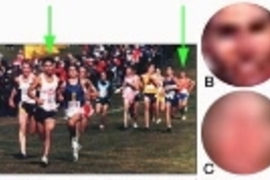

Look at a photo of people running a marathon. The lead runners' faces are quite distinct, but we can also make out the faces of those farther in the distance.

Zoom in on that distant runner, however, "and you'll see that there's very little intrinsic face-related information, such as eyes and a nose. It's just a diffuse blob. Yet somehow we can classify that blob as a face," said Pawan Sinha, an assistant professor in the Department of Brain and Cognitive Sciences (BCS). In contrast, performing this task reliably is beyond even the most advanced computer-recognition systems.

In the April 2 issue of Science, Sinha and colleagues show that a specific brain region known to be activated by clear images of faces is also strongly activated by very blurred images, just so long as surrounding contextual cues (such as a body) are present. "In other words, the neural circuitry in the human brain can use context to compensate for extreme levels of image degradations," Sinha said.

Past studies of human behavior and the work of many artists have suggested that context plays a role in recognition. "What is novel about this work is that it provides direct evidence of contextual cues eliciting object-specific neural responses in the brain," Sinha said.

The team used functional magnetic resonance imaging to map neuronal responses of the brain's fusiform face area (FFA) to a variety of images. These included clear faces, blurred faces attached to bodies, blurred faces alone, bodies alone, and a blurred face placed in the wrong context (below the torso, for example).

Only the clear faces and blurred faces with proper contextual cues elicited strong FFA responses. "These data support the idea that facial representations underlying FFA activity are based not only on intrinsic facial cues, but rather incorporate contextual information as well," wrote BCS graduate student David Cox, BCS technical assistant Ethan Meyers, and Sinha.

"One of the reasons that reports of such contextual influences on object-specific neural responses have been lacking in the literature so far is that researchers have tended to 'simplify' images by presenting objects in isolation. Using such images precludes consideration of contextual influences," Cox said.

The findings not only add to scientists' understanding of the brain and vision, but also "open up some very interesting issues from the perspective of developmental neuroscience," Sinha said. For example, how does the brain acquire the ability to use contextual cues? Are we born with this ability, or is it learned over time? Sinha is exploring these questions through Project Prakash, a scientific and humanitarian effort to look at how individuals who are born blind but later gain some vision perceive objects and faces.

Potential applications

Computer recognition systems work reasonably well when images are clear, but they break down catastrophically when images are degraded. "A human's ability is so far beyond what the computer can do," Meyers emphasized. "The new work could aid the development of better systems by changing our concept of the kind of image information useful for determining what an object is."

There could also be clinical applications. For example, said Sinha, "contextually evoked neural activity, or the lack of it, could potentially be used as an early diagnostic marker for specific neurological conditions like autism, which are believed to be correlated with impairments in information integration. We hope to address such questions as part of the Brain Development and Disorders Project, a collaboration between MIT and Children's Hospital" in Boston.

This work was supported by the Athinoula A. Martinos Center for Biomedical Imaging, the National Center for Research Resources and the Mental Illness and Neuroscience Discovery (MIND) Institute, as well as the National Defense Science and Engineering Graduate Fellowship, the Alfred P. Sloan Foundation and the John Merck Scholars Award.